Wan 2.1 Fun | ControlNet Video Generation

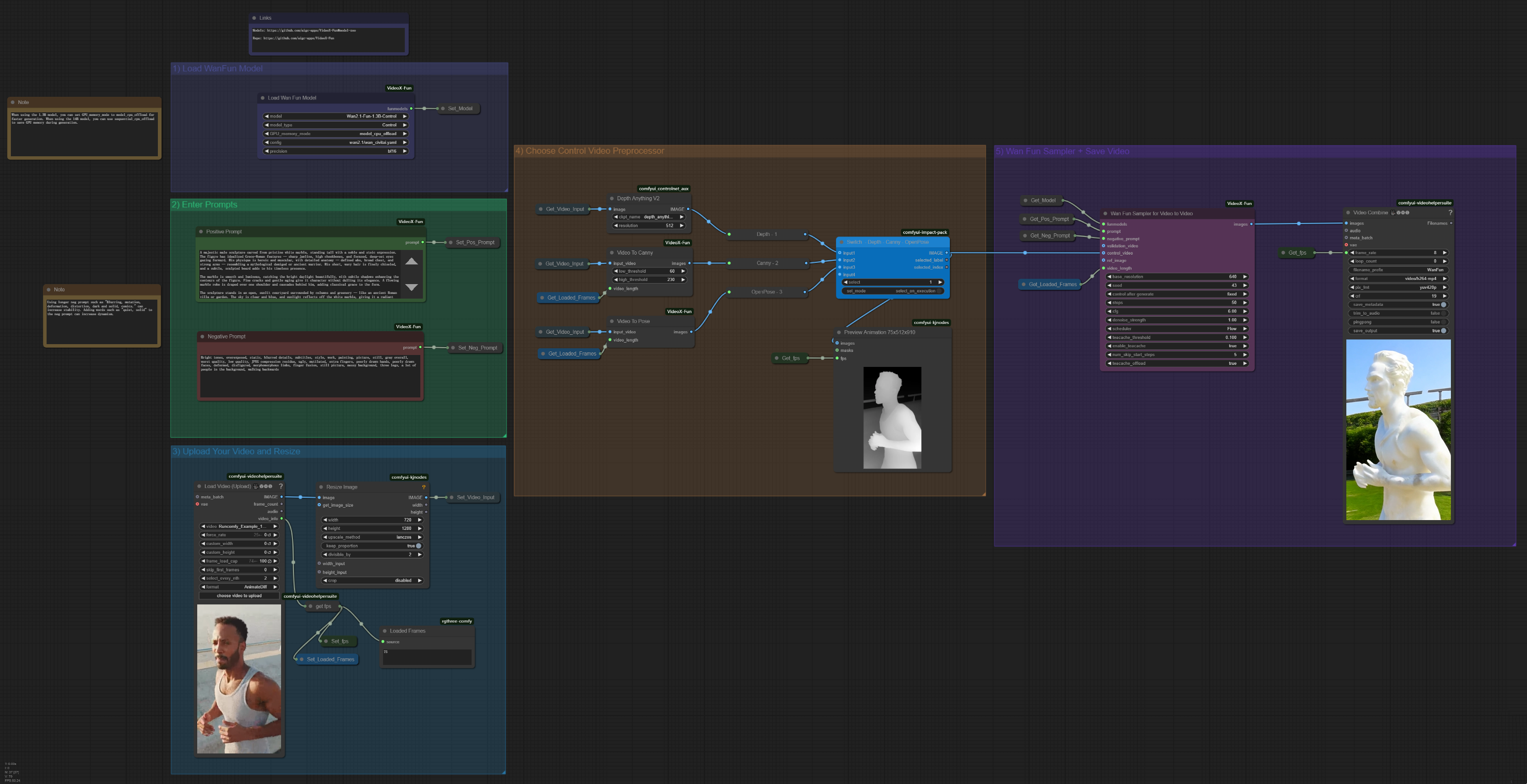

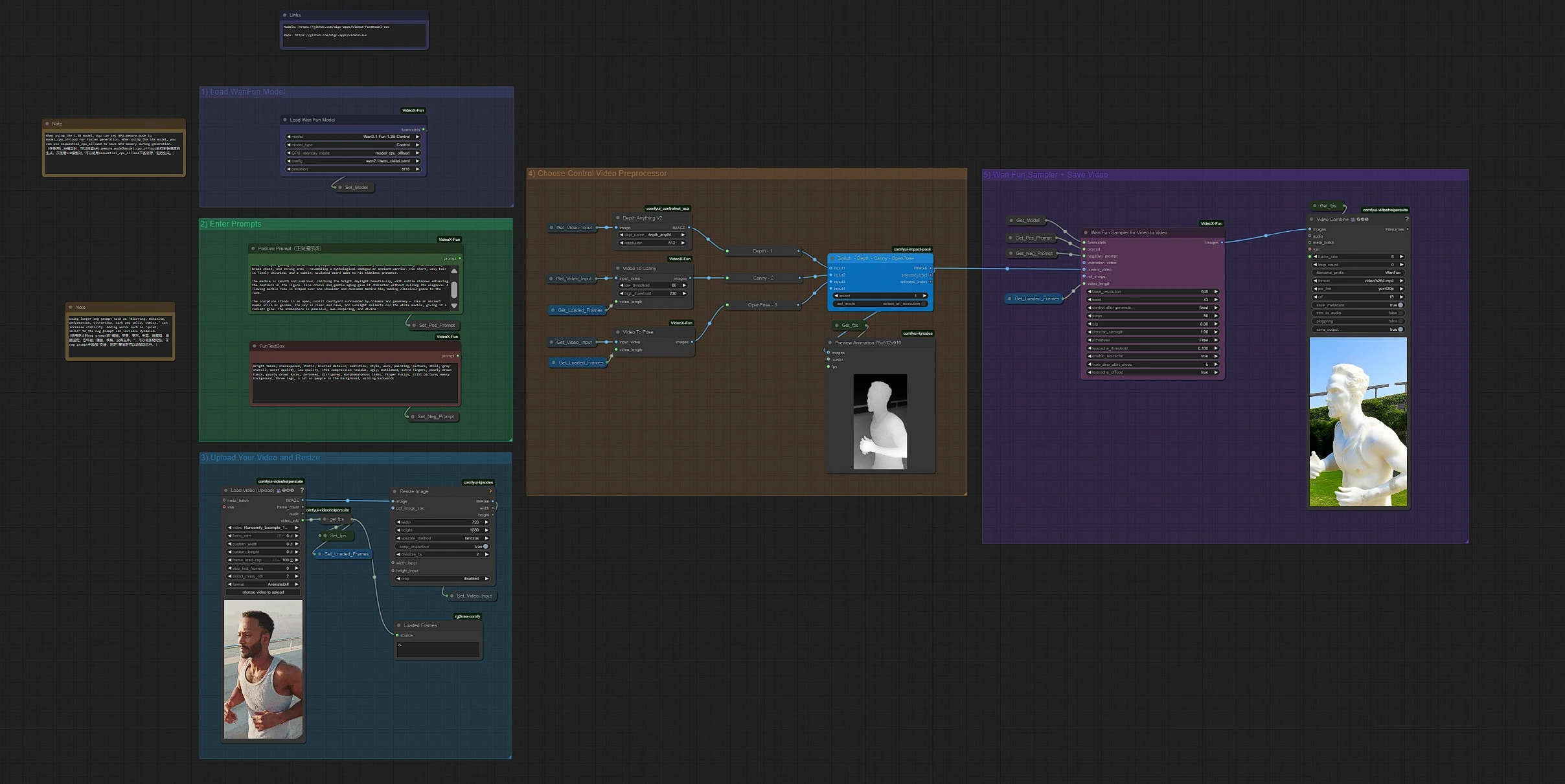

Wan 2.1 Fun is a flexible ControlNet-style AI video generation workflow built around the Wan 2.1 model family. It enables controlled video creation by extracting Depth, Canny, or OpenPose passes from an input video and applying them to Wan 2.1 Fun Control models. With 1.3B and 14B parameter variants, Wan 2.1 Fun provides scalable, high-quality outputs for both creative exploration and precision-guided animation.ComfyUI Wan 2.1 Fun Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI Wan 2.1 Fun Examples

ComfyUI Wan 2.1 Fun Description

Wan 2.1 Fun | ControlNet Video Generation

Wan 2.1 Fun introduces an intuitive and powerful method for controlled AI content creation using Wan 2.1 Fun models. Built with a ControlNet-inspired framework, Wan 2.1 Fun ensures compatibility with standard ControlNet preprocessor modules. By extracting Depth, Canny, or OpenPose passes from input footage, this ControlNet-based ComfyUI workflow allows users to influence the structure, motion, and style of the output with precision, instead of relying solely on text prompts.

Wan 2.1 Fun brings structured visual data into the process—preserving motion accuracy, enhancing stylization, and enabling more deliberate transformations. Whether you're building dynamic animations, pose-driven performances, or experimenting with abstract motion art, Wan 2.1 Fun puts artistic control directly into your hands while leveraging the expressive power of Wan 2.1 Fun models and ControlNet principles.

Why Use Wan 2.1 Fun?

The Wan 2.1 Fun workflow offers a flexible way to guide AI motion and structure using ControlNet-style visual conditioning:

- Use Depth, Canny, or OpenPose for structured control

- Built on a ControlNet-inspired system, enabling guided transformations

- Compatible with multiple ControlNet preprocessors including third-party extensions

- Achieve clearer spatial consistency, form, and dynamic flow with ControlNet principles

- No need for complex prompt engineering or training

- Lightweight and responsive with high visual fidelity

- Excellent for action design, stylized choreography, or performance-driven motion synthesis

How to Use the Wan 2.1 Fun ControlNet Workflow

Wan 2.1 Fun Overview

Load WanFun Model(purple): Model LoaderEnter Prompts(green): Positive and Negative PromptsUpload Your Video and Resize(cyan blue): User Inputs – Reference Footage and ResizingChoose Control Video Preprocessor(orange): ControlNet Node for Depth, Canny, or OpenPoseWan Fun Sampler + Save Video(pink): Sampling & Output

Quick Start Steps:

- Select your Wan 2.1 Fun model (

Wan2.1-Fun-Control (1.3B / 14B)) - Input your positive and negative prompts

- Upload reference footage

- Run the workflow via

Queue Prompt - Retrieve results from the last node or the

Outputsfolder

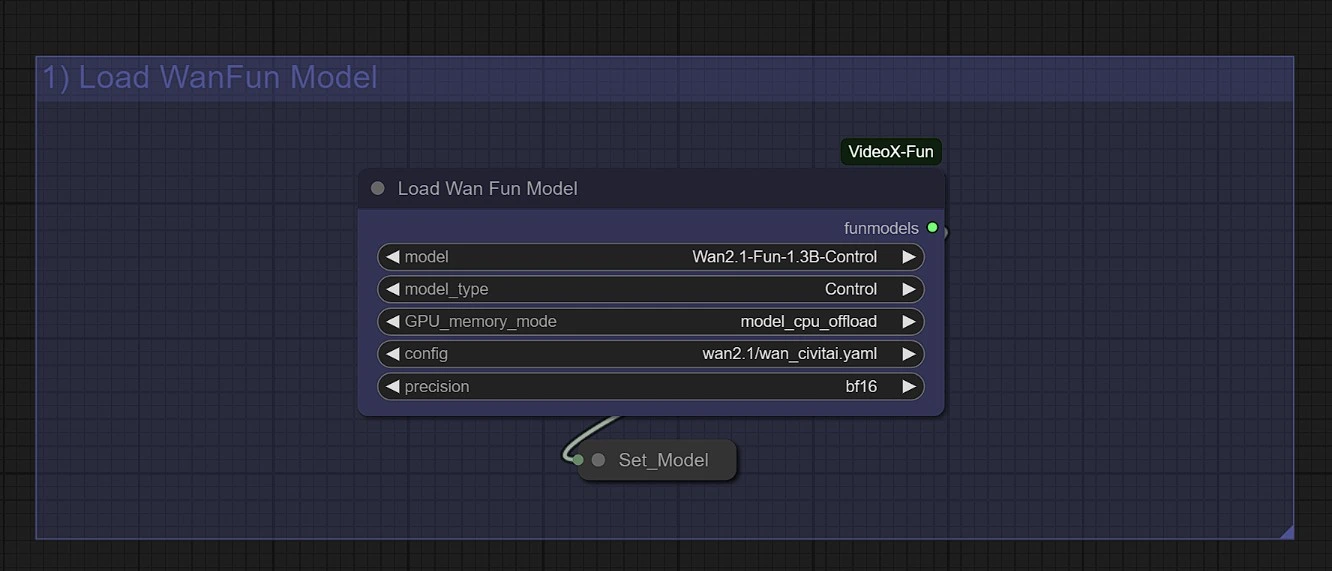

1 - Load WanFun Model

Choose the appropriate Wan2.1-Fun-Control variant for ControlNet-style conditioning:

- Use

model_cpu_offloadfor smoother runs with 1.3B - Use

sequential_cpu_offloadfor lower GPU load on 14B

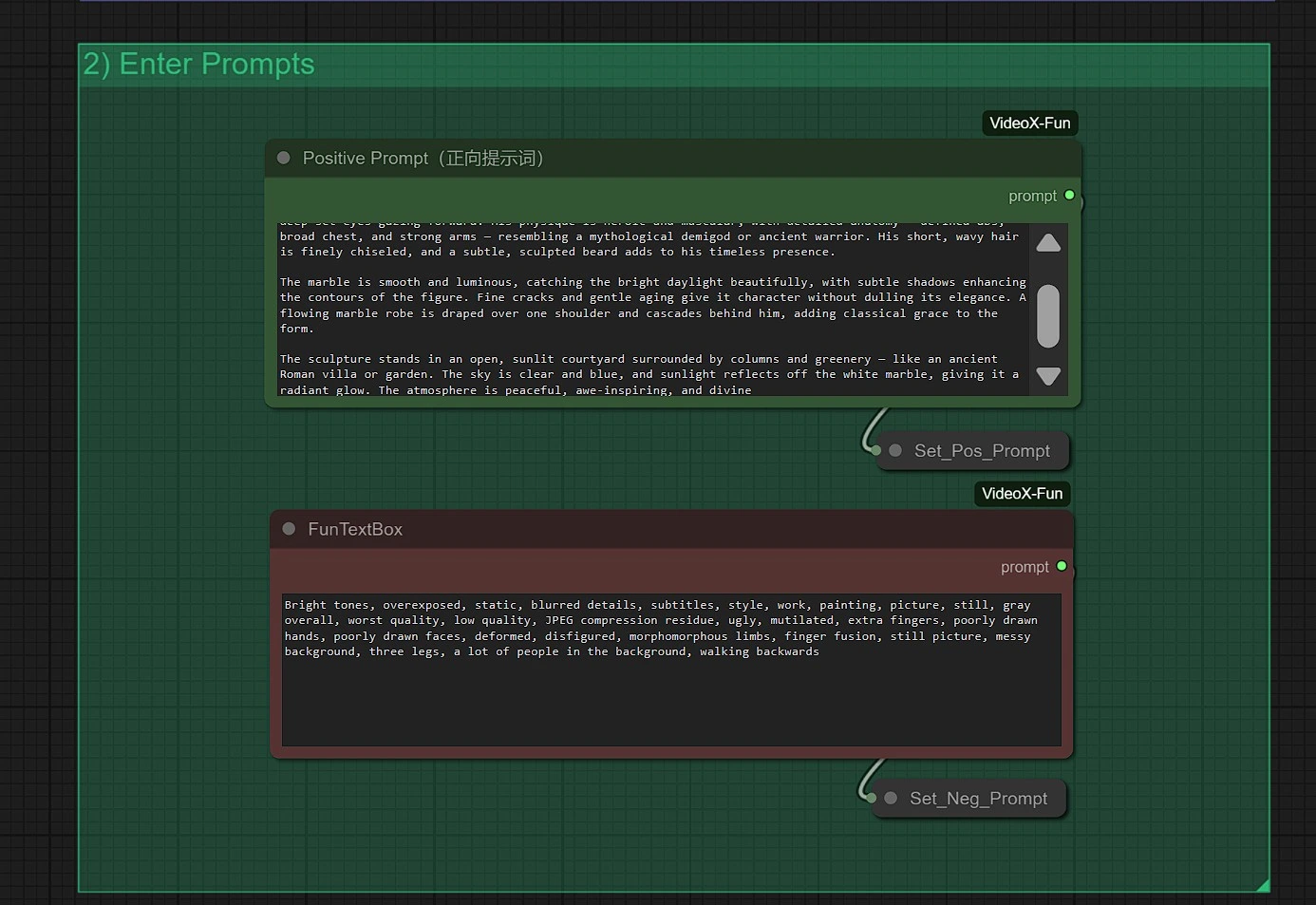

2 - Enter Prompts

- Positive Prompt:

- Describes motion style, atmosphere, or visual texture

- Vivid and descriptive prompts enhance creative control

- Negative Prompt:

- To improve output stability, try words like

"Blurring, mutation, deformation, distortion, dark and solid, comics." - For more dynamic motion, add terms like

"quiet, solid"

- To improve output stability, try words like

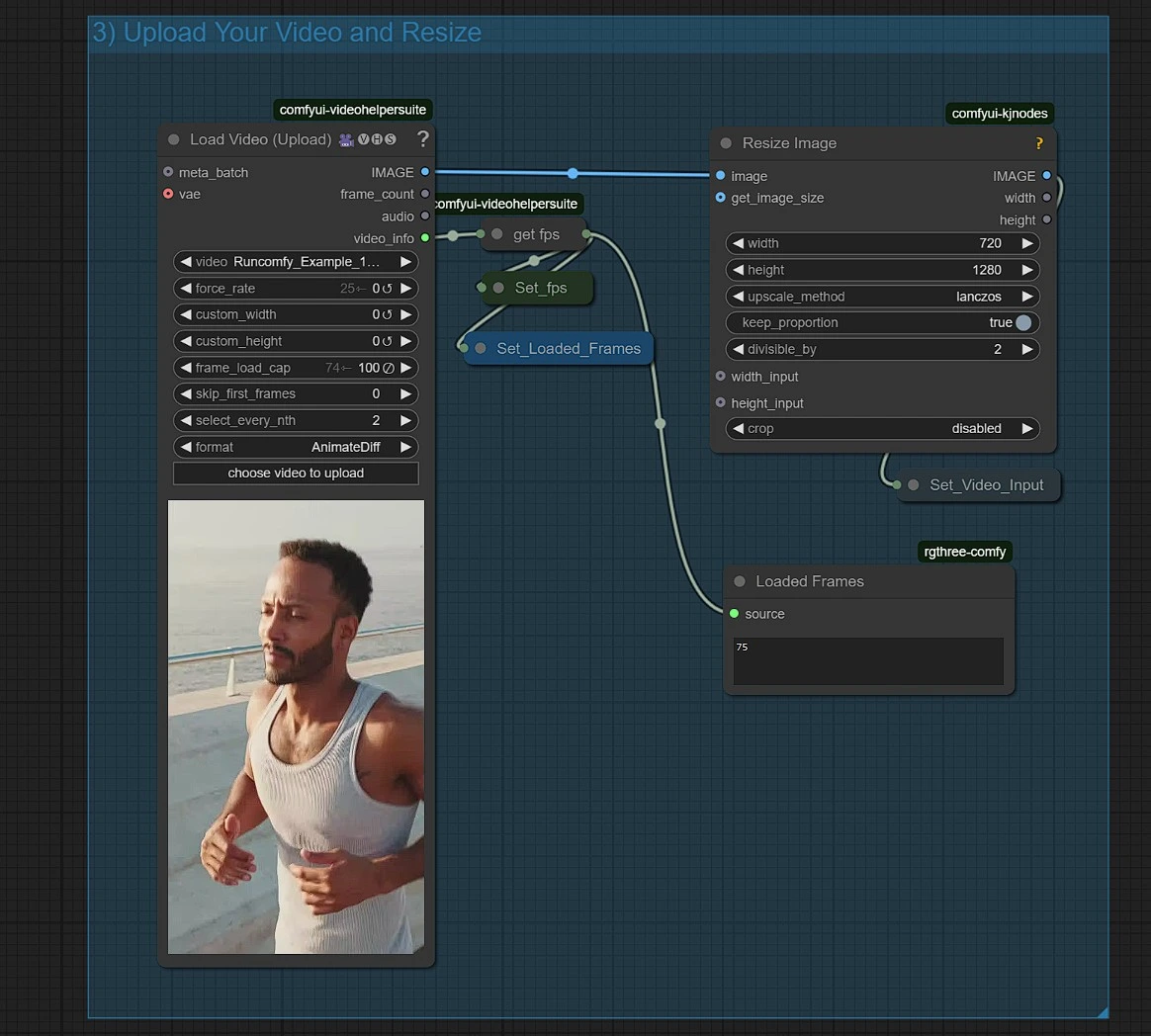

3 - Upload Your Video and Resize

Upload your source footage. For optimal results with Wan 2.1 Fun, resize appropriately to meet desired frame dimensions.

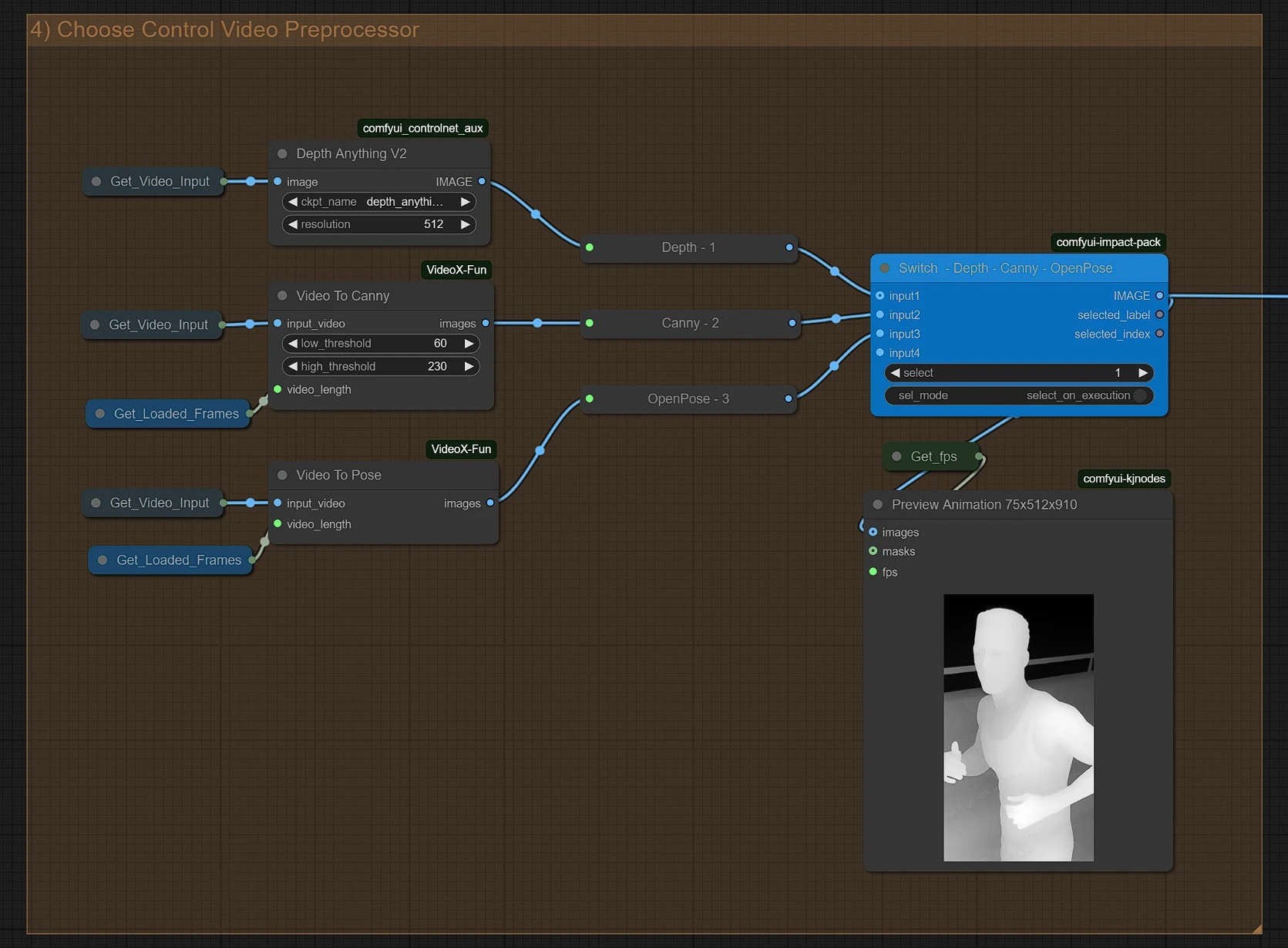

4 - Choose Control Video Preprocessor

This section activates the ControlNet-based preprocessing system for Wan 2.1 Fun:

Depth: Captures spatial layout as a depth mapCanny: Extracts strong edge contours and structureOpenPose: Identifies joints, limbs, and posture for motion-driven work

These guides condition the Wan 2.1 Fun workflow to follow visual cues instead of relying solely on prompts.

All modules align with ControlNet preprocessing standards, allowing you to extend functionality via custom nodes.

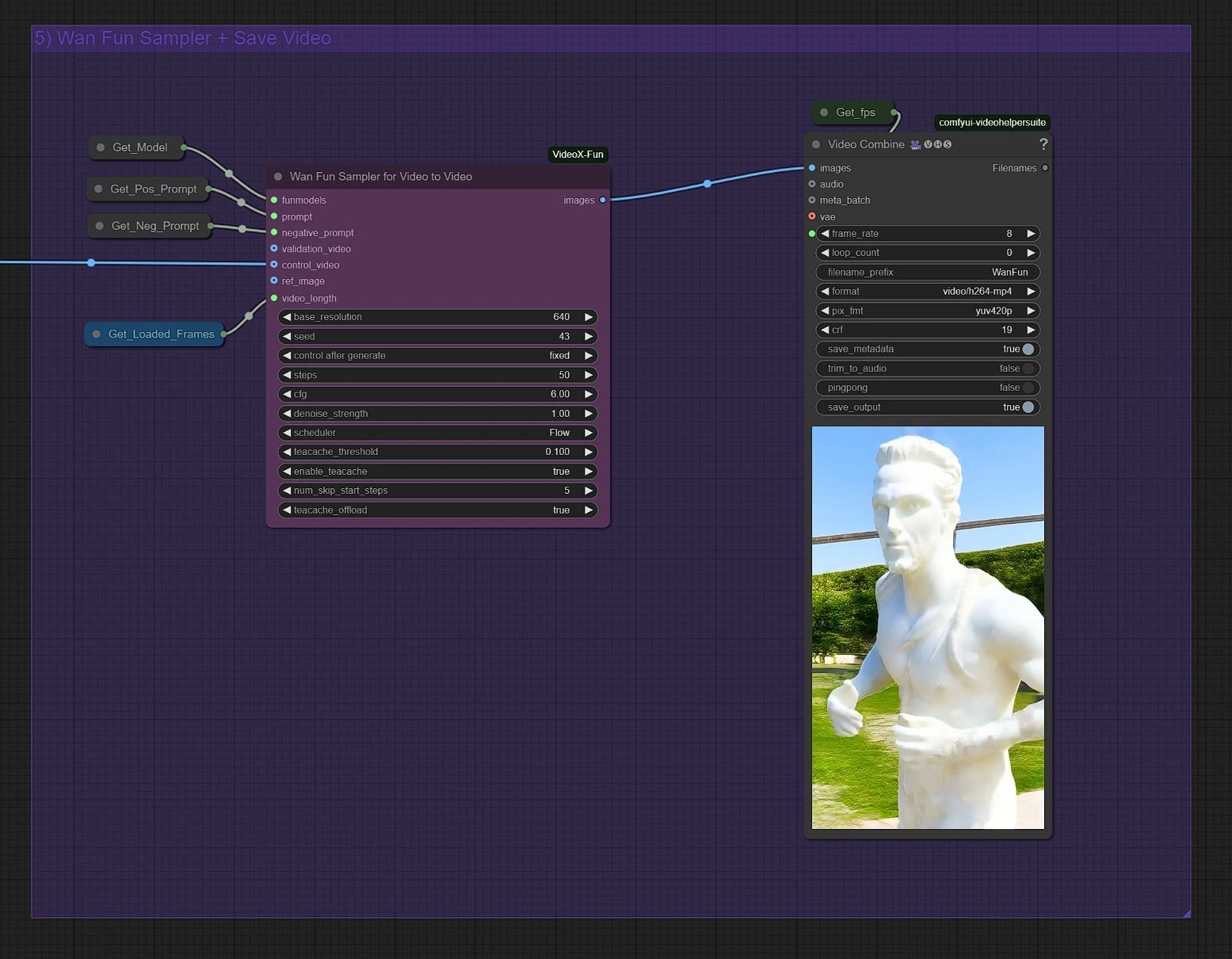

5 - Wan Fun Sampler + Save Output

The Wan 2.1 Fun Sampler is tuned for clarity and creative consistency.

Customize the configuration if needed. Outputs are automatically saved to the designated folder.

Acknowledgement

The Wan 2.1 Fun workflow was developed by and , whose contributions to AI-based motion control and stylization made advanced ControlNet integration possible. This project applies the expressive depth of the Wan 2.1 Fun models alongside Depth, Canny, and OpenPose inputs to enable compositional and dynamic precision in AI-assisted visual production.

We thank them for making these tools available to the creative community.