Wan2.1 LoRA

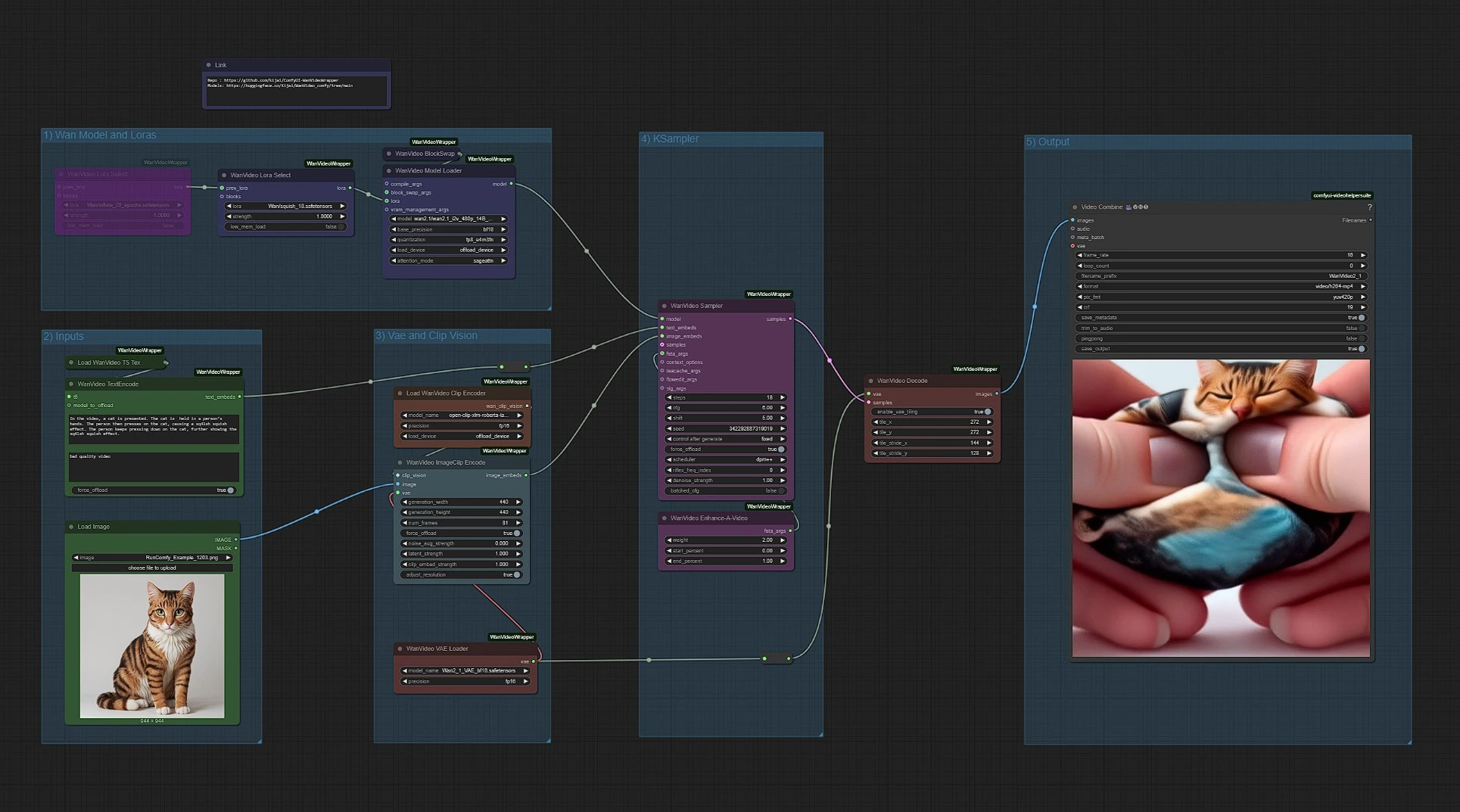

Wan2.1 LoRA extends the capabilities of the Wan2.1 video model by allowing users to apply pre-trained LoRA models for refined style and artistic customization. Whether for AI-driven animations, cinematic sequences, or creative projects, this workflow provides greater control and flexibility over video generation.ComfyUI Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI Examples

ComfyUI Description

Wan2.1 LoRA enhances the base Wan2.1 model by enabling fine-tuned control over motion dynamics, artistic styles, and specialized video outputs with minimal computational cost. Built for Text-to-Video, Image-to-Video, and Video Editing, LoRA optimizations allow users to personalize generation results while maintaining Wan2.1’s high fidelity. With efficient training requiring lower VRAM, creators can adapt Wan2.1 LoRA for cinematic, stylized, or domain-specific video projects, pushing the boundaries of AI-driven video generation with greater precision and flexibility.

The Wan2.1 LoRA workflow enhances video generation by allowing users to apply fine-tuned LoRA models for greater control over motion, style, and consistency. Instead of relying solely on text or image inputs, users can load specialized Wan2.1 LoRAs to refine visual aesthetics, maintain character fidelity, or introduce unique artistic effects. This workflow streamlines customization, making it easier to achieve cohesive, high-quality video outputs while leveraging Wan2.1’s state-of-the-art capabilities.

How to Use Wan2.1 Lora Workflow?

Nodes are color-coded for clarity:

- Green - Inputs forimplementation

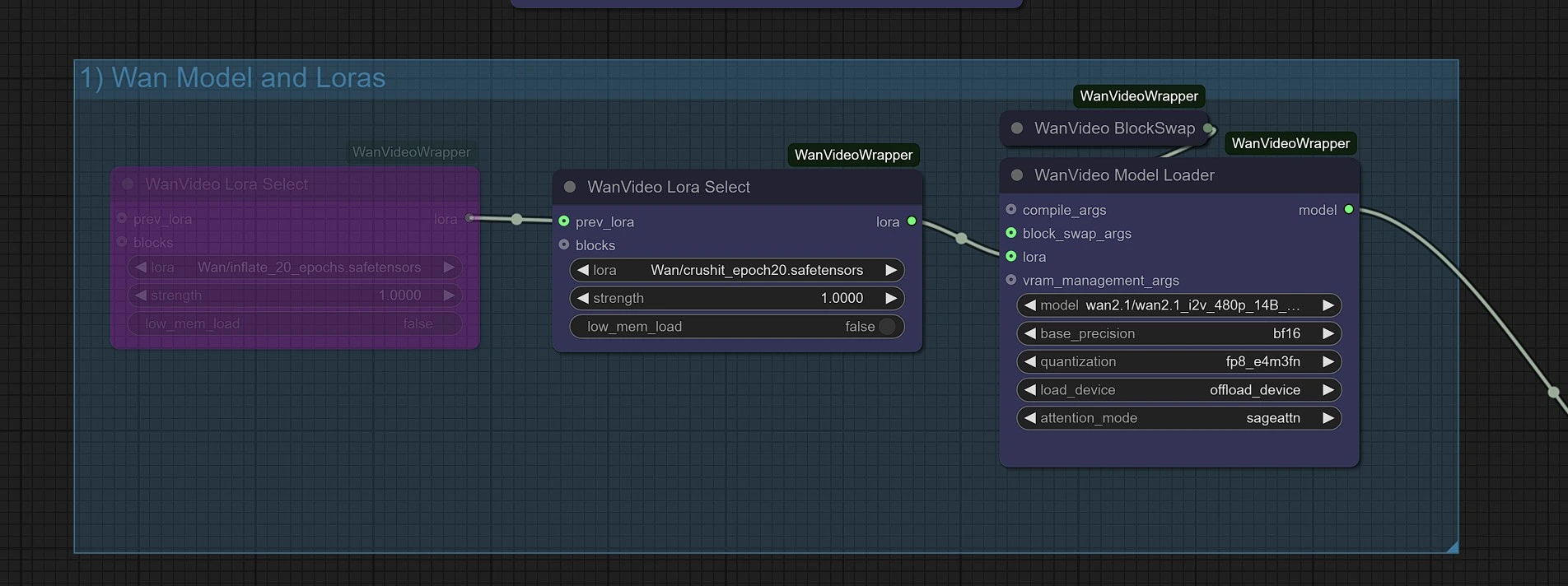

- Purple - Wan2.1 Models and Lora

- Pink - Wan2.1 Sampler

- Red - VAE + Decoding

- Grey - Output Video

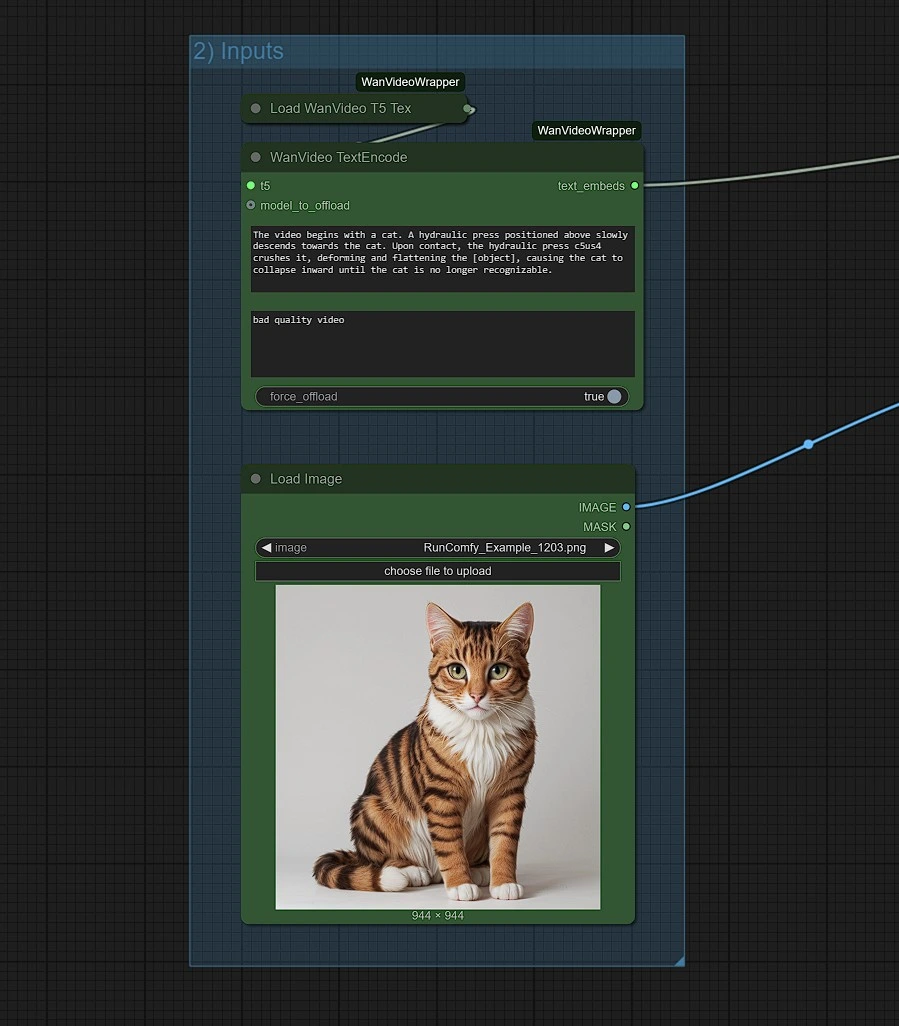

Enter your Positive text, Upload Image and Load any Wan2.1 Lora you Desire in the green nodes and adjust video settings, such as duration and resolution, in the cyan blue node in clip vision group.

Input (Wan2.1 Lora, Image and Text)

- Download your Wan2.1 Lora models from the Civit.ai website and place the models in Comfyui > models > lora > Wan for organization. Refresh the browswer to see them in the WanVideo Lora Select list.

- Enter your desired prompts and tigger keywords in the text box to trigger the Wan2.1 Lora.

You can chain multiple video LORAs by duplicating the WanVideo Lora node and connecting them as you do with standard SD models.

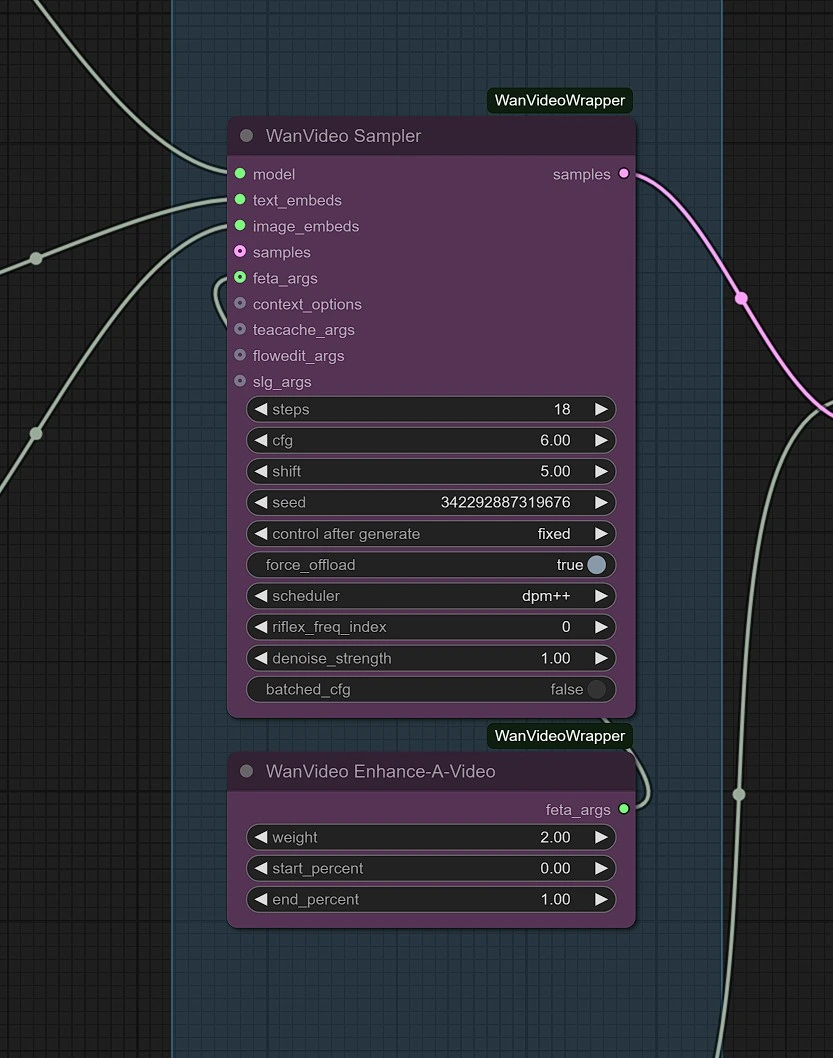

Sampler

- steps: 20-30 (higher values improve quality but increase time)

- cfg: 6.0 (controls prompt adherence strength)

- scheduler: "simple" (determines noise scheduling approach)

- sampler_name: "uni_pc" (recommended sampler for Wan 2.1)

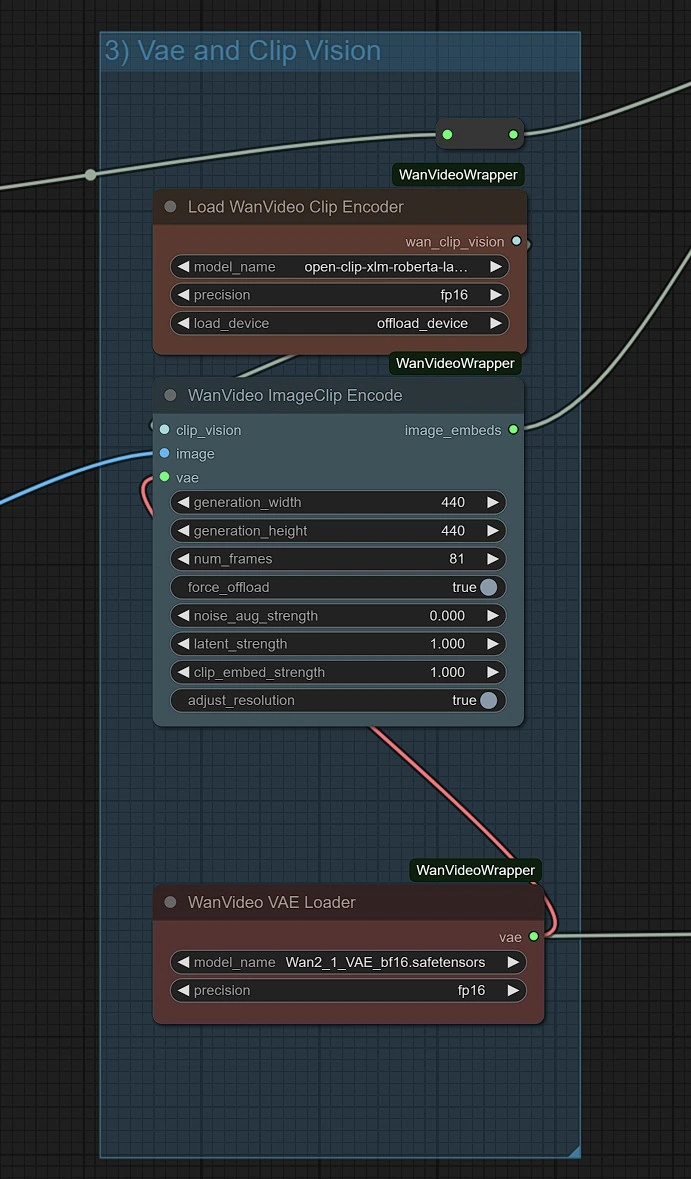

Set Resolutions, Vae and Clip Vision

In this Group, the vae and clip vision models will auto-download on first run. Please allow 3-5 minutes for the download to complete in your temporary storage.

Links for Wan2.1 Base Models: https://huggingface.co/Kijai/WanVideo_comfy/tree/main

In the cyan blue node, you can set the video’s frame duration and resolution.

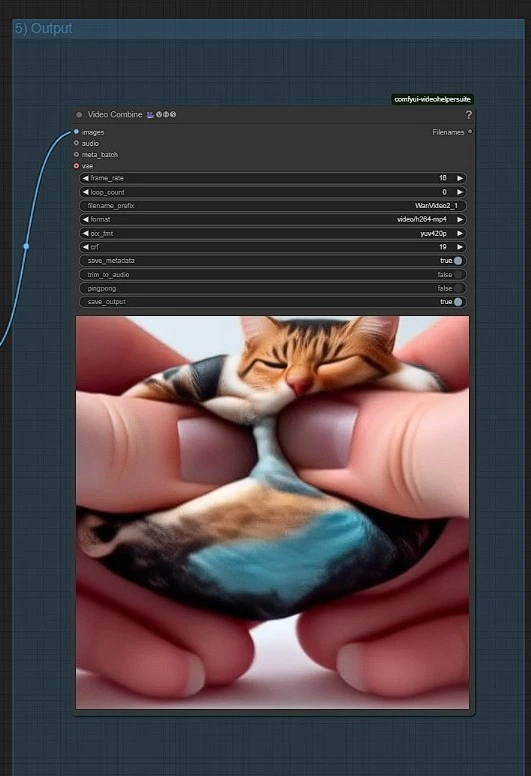

Outputs

The rendered Wan2.1 video will be saved in the Outputs folder in Comfyui.

Unlock the full potential of Wan2.1 LoRA and bring your video creations to life with enhanced style, consistency, and control. Whether you're crafting cinematic sequences, AI-driven animations, or artistic storytelling, Wan2.1 LoRA empowers you to push the boundaries of video generation. Experiment with different LoRA models, refine your visuals, and create stunning, high-quality videos with ease. Try it today and elevate your Wan2.1 video generation workflow to new heights!