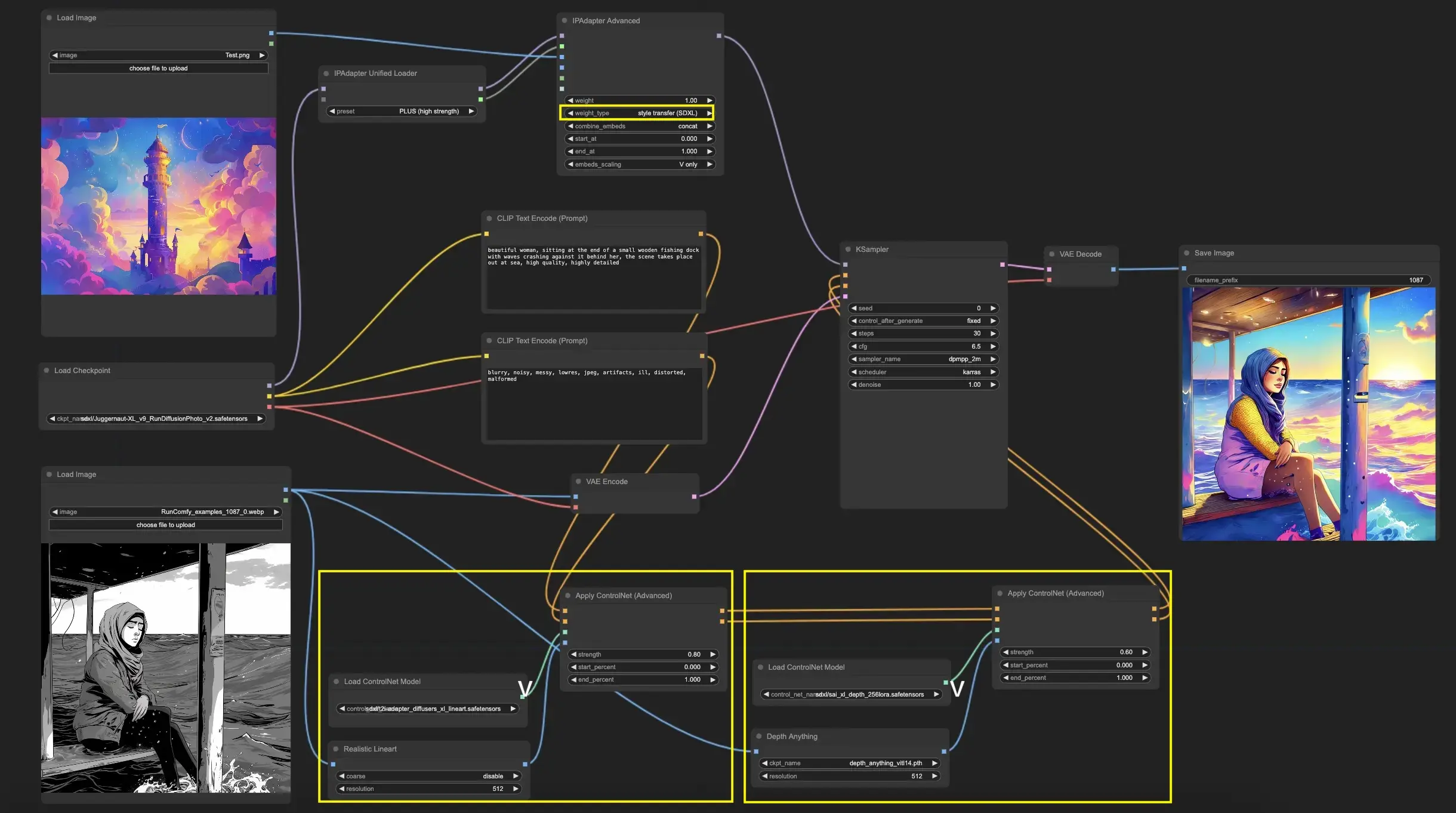

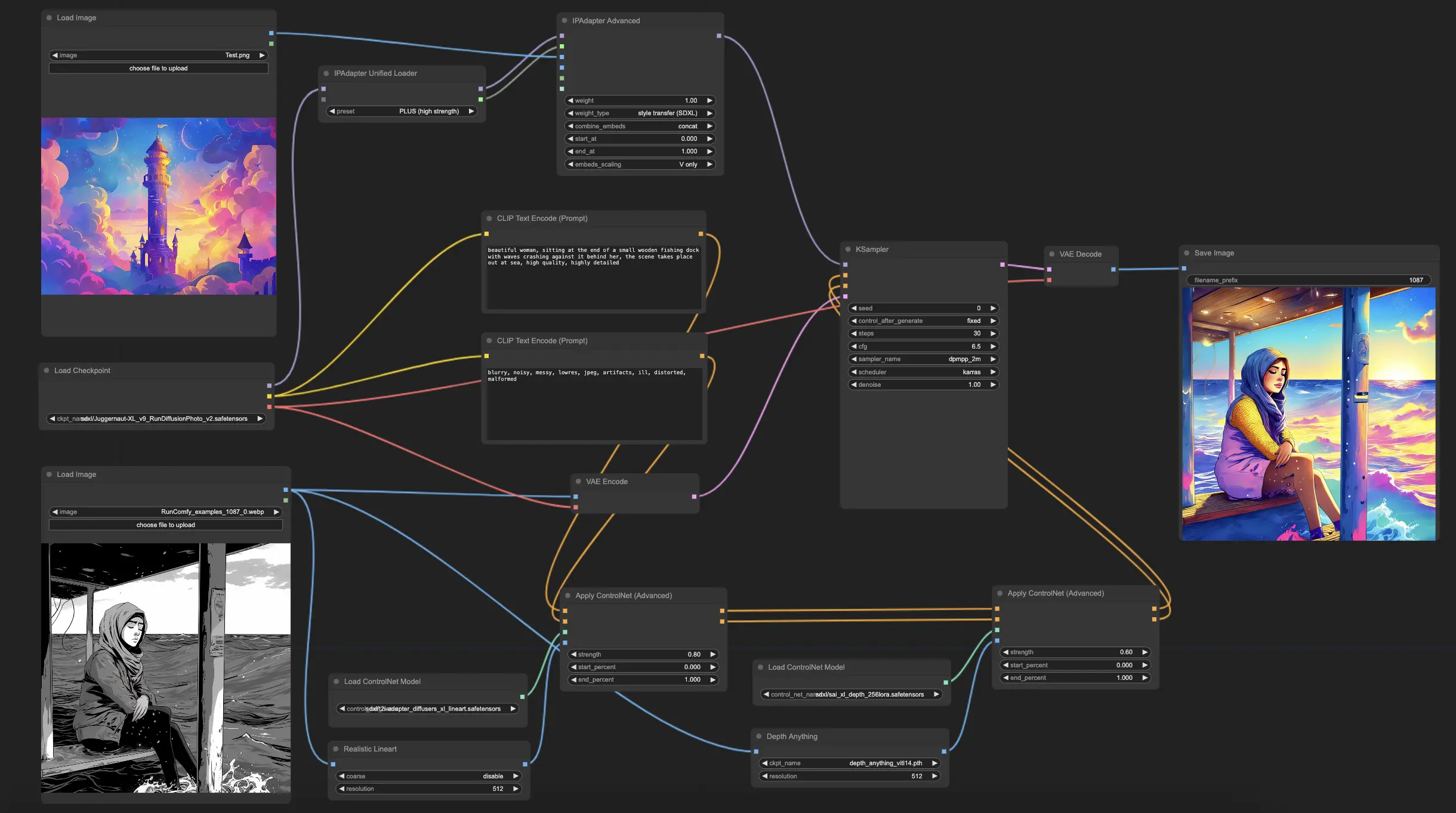

IPAdapter Plus (V2) | One-Image Style Transfer

Explore the integration of IPAdapter Plus (IPAdapter V2) and ControlNet in your style transfer projects. This workflow unlocks the potential of IPAdapter Plus for captivating style transfers, while ControlNet ensures that your output image retains the essence of your input image. Together, they simplify the process of achieving reliable and visually striking style adaptations.ComfyUI IPAdapter Plus (Style Transfer) Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI IPAdapter Plus (Style Transfer) Examples

ComfyUI IPAdapter Plus (Style Transfer) Description

1. ComfyUI IPAdapter Plus Workflow for Style Transfer

IPAdapter Plus can significantly enhance your style transfer projects. , the creator of IPAdapter, shares a detailed tutorial to help you set up and use IPAdapter Plus to infuse various artistic styles into your images. You can check out Matteo’s youtube channel .

In this workflow, you'll have fun learning how to perform style transfers using just one reference image with IPAdapter Plus (IPAdapter V2). This process allows you to experiment with IPAdapter Plus and apply different types of ControlNets. Feel free to choose the ControlNet model that best fits your reference image. A key part of mastering this workflow is adjusting the IPAdapter settings, such as weight, and fine-tuning the ControlNet settings, such as strength, to strike just the right balance for results that are not only precise but also stunningly beautiful. Give it a go and see how IPAdapter Plus can transform your image into another incredible style using only one image reference!

Here’s a guide on

2. Introduction to IPAdapter Plus

IPAdapter Plus, also known as IPAdapter V2, marks a substantial upgrade over its predecessor, specifically designed to enhance image-to-image conditioning within ComfyUI. This advanced node is adept at incorporating styles and elements from reference images directly into your projects, simplifying intricate imaging operations. With IPAdapter Plus, you can easily integrate style transfers and utilize its robust model options, significantly enhancing your capabilities in creative image generation and manipulation. This makes IPAdapter Plus an invaluable asset for anyone looking to elevate their imaging work.

3. How to use ComfyUI IPAdapter Plus for Style Transfer

Firstly, it's important to highlight that the IPAdapter Plus Style Transfer is specifically designed for compatibility with the SDXL model. To begin, select the SDXL model as the checkpoint model and also adjust the "weight type" in the "IPAdapter Advanced" node to "Style Transfer (SDXL)". You can experiment with different "weight" values to get different results from IPAdapter’s effects. The input image for IPAdapter Plus should be the reference image whose style you aim to emulate; you can pick any image that captivates you with its style.

The second part is to utilize ControlNet models to ensure the consistency and precision of the output image with your input image. The choice of ControlNet models depends on the characteristics of your input image. For a comprehensive guide on selecting and using different ControlNet models, please refer to the . It is also crucial to adjust the "strength" of each ControlNet to achieve the desired stylistic effect.

Finally, crafting the appropriate prompt is essential for refining the style transfer process. If the results from IPAdapter Plus are not satisfactory, adjusting the prompt can be an effective way to guide the outcome more precisely. Consider leveraging the prompt as a strategic method to refine and control the style transfer process.