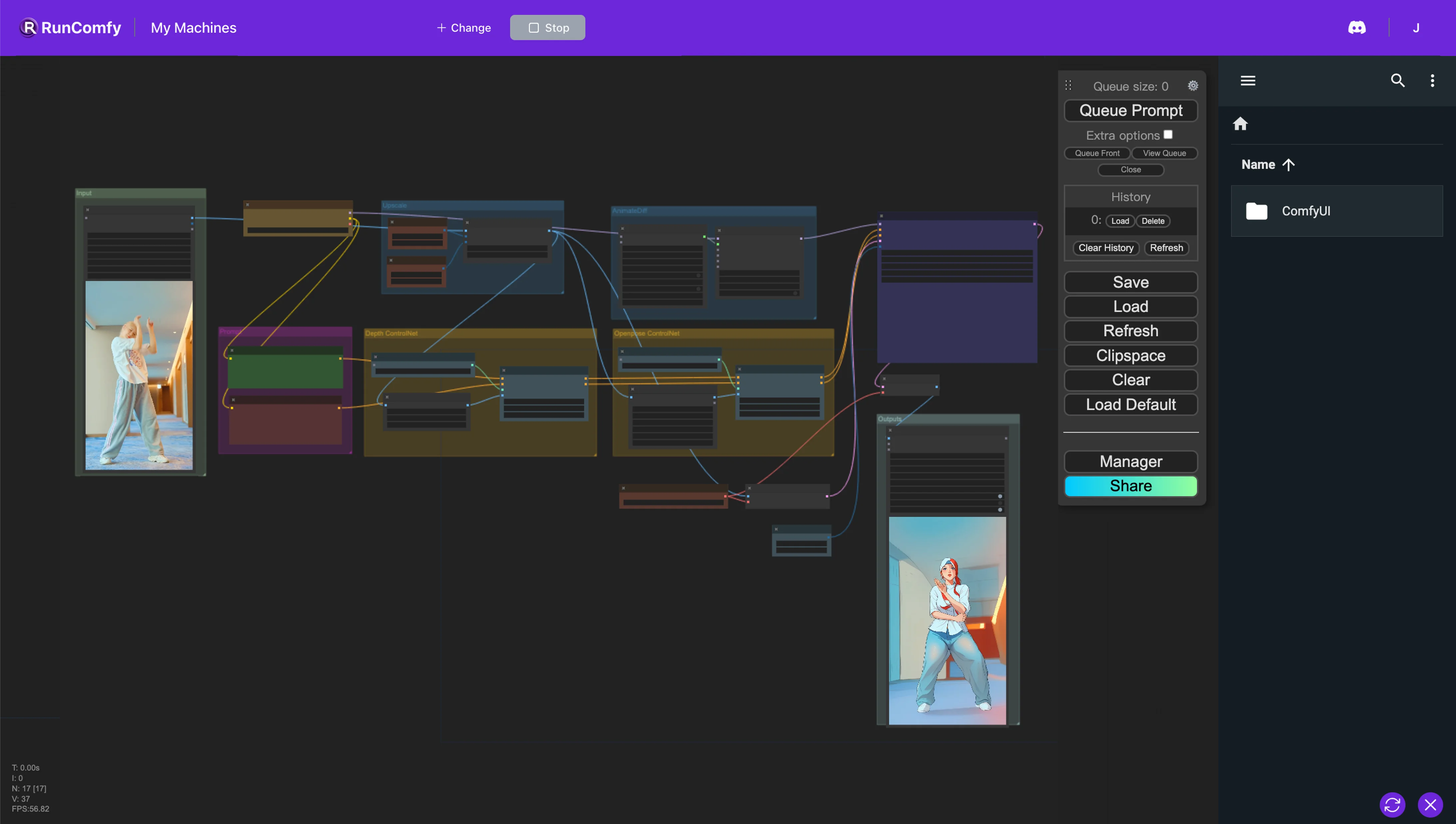

AnimateDiff + ControlNet | Cartoon Style

In this ComfyUI Workflow, we utilize nodes such as Animatediff and ControlNet (featuring Depth and OpenPose) to transform an original video into an animated style. The style is heavily influenced by the checkpoint model, so feel free to experiment with various checkpoints to achieve different styles.ComfyUI video2video (Cartoon Style) Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI video2video (Cartoon Style) Examples

ComfyUI video2video (Cartoon Style) Description

1. ComfyUI Workflow: AnimateDiff + ControlNet | Cartoon Style

This ComfyUI workflow adopts an methodology for video restyling, incorporating nodes such as AnimateDiff and ControlNet within the Stable Diffusion framework to augment the capabilities of video editing. AnimateDiff facilitates the conversion of text prompts into video content, extending beyond the conventional text-to-image models to produce dynamic videos. Conversely, ControlNet leverages reference images or videos to guide the motion of the generated content, ensuring that the output closely aligns with the reference in terms of movement. Integrating AnimateDiff's text-to-video generation with ControlNet's detailed movement control, this ComfyUI workflow provides a robust suite for generating high-quality, restyled video content.

2. Overview of AnimateDiff

Please check out the details on

3. Overview of ControlNet

Please check out the details on