IC-Light | Image Relighting

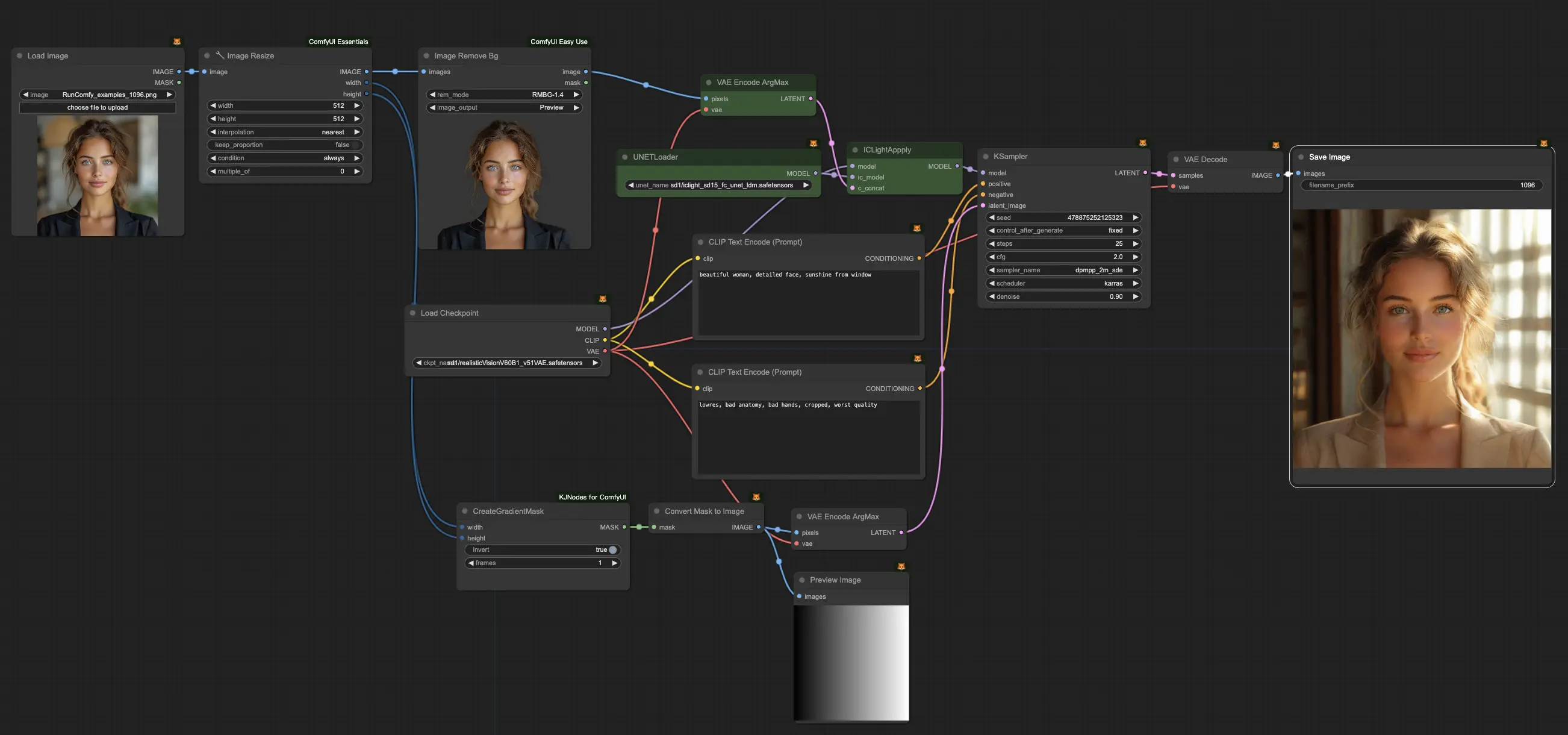

In this ComfyUI IC-Light workflow, you can easily relight your images. First, the original image is edited by removing the background. Then, based on your prompts, the tool regenerates a new image with enhanced relighting and a new background, ensuring consistent and precise results.ComfyUI IC-Light Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI IC-Light Examples

ComfyUI IC-Light Description

1. What is IC-Light?

IC-Light is an AI-based image editing tool that integrates with Stable Diffusion models to perform localized edits on generated images. It works by encoding the image into a latent space representation, applying edits to specific regions, and then decoding the modified latent representation back into an image. This approach allows for precise control over the editing process while preserving the overall style and coherence of the original image.

Now there’re two models released: text-conditioned relighting model and background-conditioned model. Both types take foreground images as inputs.

2. How IC-Light Works

Under the hood, IC-Light leverages the power of Stable Diffusion models to encode and decode images. The process can be broken down into the following steps:

2.1. Encoding: The input image is passed through the Stable Diffusion VAE (Variational Autoencoder) to obtain a compressed latent space representation. 2.2. Editing: The desired edits are applied to specific regions of the latent representation. This is typically done by concatenating the original latent with a mask indicating the areas to be modified, along with the corresponding edit prompts. 2.3. Decoding: The modified latent representation is passed through the Stable Diffusion decoder to reconstruct the edited image. By operating in the latent space, IC-Light can make localized edits while maintaining the overall coherence and style of the image.

3. How to Use ComfyUI IC-Light

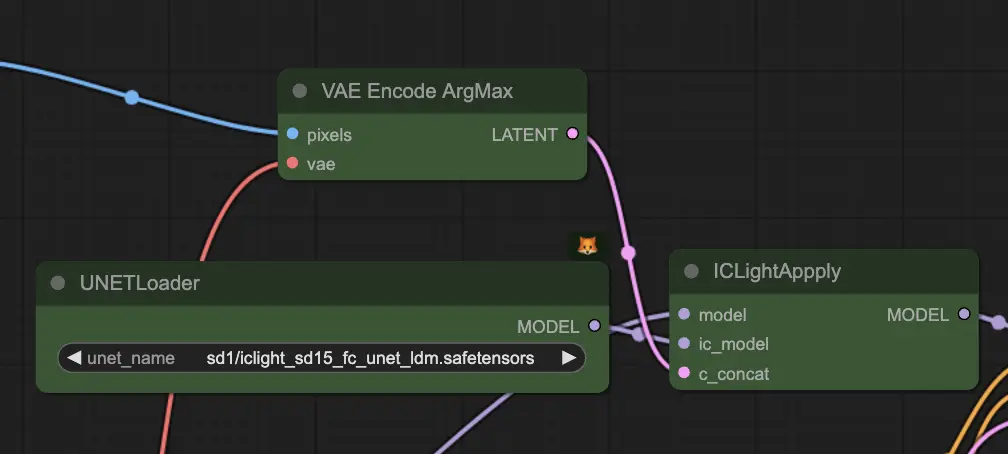

The main node you'll be working with is the "IC-Light Apply" node, which handles the entire process of encoding, editing, and decoding your image.

3.1. "IC-Light Apply" Input Parameters:

The "IC-Light Apply" node requires three main inputs:

- model: This is the base Stable Diffusion model that will be used for encoding and decoding your image.

- ic_model: This is the pre-trained IC-Light model that contains the necessary weights for the editing process.

- c_concat: This is a special input that combines your original image, the mask indicating which areas to edit, and the edit prompts that define how those areas should be modified.

To create the c_concat input:

- Use the VAEEncodeArgMax node to encode your original image. This node ensures that the most probable latent representation of your image is obtained.

- Use the ICLightApplyMaskGrey node to create a masked version of your image. This node takes your original image and a mask as input, and it outputs a version of the image where the non-masked areas are greyed out.

- Create latent representations of your edit prompts. These prompts will guide the modifications made to the selected regions of your image.

- Combine the latent representations of your original image, mask, and edit prompts into a single input for the "IC-Light Apply" node.

3.2. "IC-Light Apply" Output Parameters:

After processing your inputs, the "IC-Light Apply" node will output a single parameter:

- model: This is the patched Stable Diffusion model with the IC-Light modifications applied.

To generate your final edited image, simply connect the output model to the appropriate nodes in your ComfyUI workflow, such as the KSampler and VAEDecode nodes.

3.3. Tips for Best Results:

- Use high-quality masks: To ensure that your edits are precise and effective, make sure that your masks accurately outline the regions you want to modify.

- Experiment with different edit prompts: The edit prompts are what guide the modifications made to the selected regions of your image. Feel free to try different prompts to achieve the desired effect, and don't hesitate to refine your prompts based on the results you get.

- Balance global and local edits: While IC-Light is great for making localized edits, it's important to consider the overall composition and coherence of your image. Try to find a balance between focused edits and global adjustments to maintain the integrity of your generated artwork.

For more information, please visit