Segment Anything V2, also known as SAM2, is a groundbreaking AI model developed by Meta AI that revolutionizes object segmentation in both images and videos.

What is Segment Anything V2 (SAM2)?

Segment Anything V2 is a state-of-the-art AI model that enables the seamless segmentation of objects across images and videos. It is the first unified model capable of handling both image and video segmentation tasks with exceptional accuracy and efficiency. Segment Anything V2 (SAM2) builds upon the success of its predecessor, the Segment Anything Model (SAM), by extending its promptable capabilities to the video domain.

With Segment Anything V2 (SAM2), users can select an object in an image or video frame using various input methods, such as a click, bounding box, or mask. The model then intelligently segments the selected object, allowing for precise extraction and manipulation of specific elements within the visual content.

Highlights of Segment Anything V2 (SAM2)

- State-of-the-Art Performance: SAM2 outperforms existing models in the field of object segmentation for both images and videos. It sets a new benchmark for accuracy and precision, surpassing the performance of its predecessor, SAM, in image segmentation tasks.

- Unified Model for Images and Videos: SAM2 is the first model to provide a unified solution for segmenting objects across both images and videos. This integration simplifies the workflow for AI artists, as they can use a single model for various segmentation tasks.

- Enhanced Video Segmentation Capabilities: SAM2 excels in video object segmentation, particularly in tracking object parts. It outperforms existing video segmentation models, offering improved accuracy and consistency in segmenting objects across frames.

- Highlights of Segment A. Reduced Interaction Time: Compared to existing interactive video segmentation methods, SAM2 requires less interaction time from users. This efficiency allows AI artists to focus more on their creative vision and spend less time on manual segmentation tasks.

- Simple Design and Fast Inference: Despite its advanced capabilities, SAM2 maintains a simple architectural design and offers fast inference speeds. This ensures that AI artists can integrate SAM2 into their workflows seamlessly without compromising on performance or efficiency.

How Segment Anything V2 (SAM2) Works

SAM2 extends SAM's promptable capability to videos by introducing a per-session memory module that captures target object information, enabling object tracking across frames, even with temporary disappearances. The streaming architecture processes video frames one at a time, behaving like SAM for images when the memory module is empty. This allows for real-time video processing and natural generalization of SAM's capabilities. SAM2 also supports interactive mask prediction corrections based on user prompts. The model utilizes a transformer architecture with streaming memory and is trained on the SA-V dataset, the largest video segmentation dataset collected using a model-in-the-loop data engine that improves both the model and data through user interaction.

How to use Segment Anything V2 (SAM2) in ComfyUI

This ComfyUI workflow supports selecting an object in a video frame using a click/point.

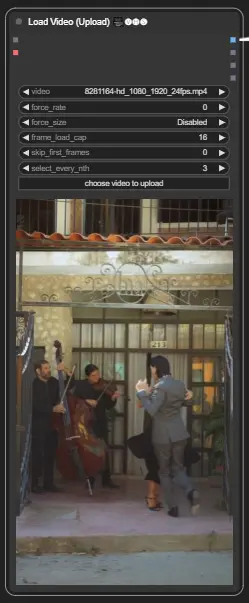

1. Load Video(Upload)

Video Loading: Select and upload the video you wish to process.

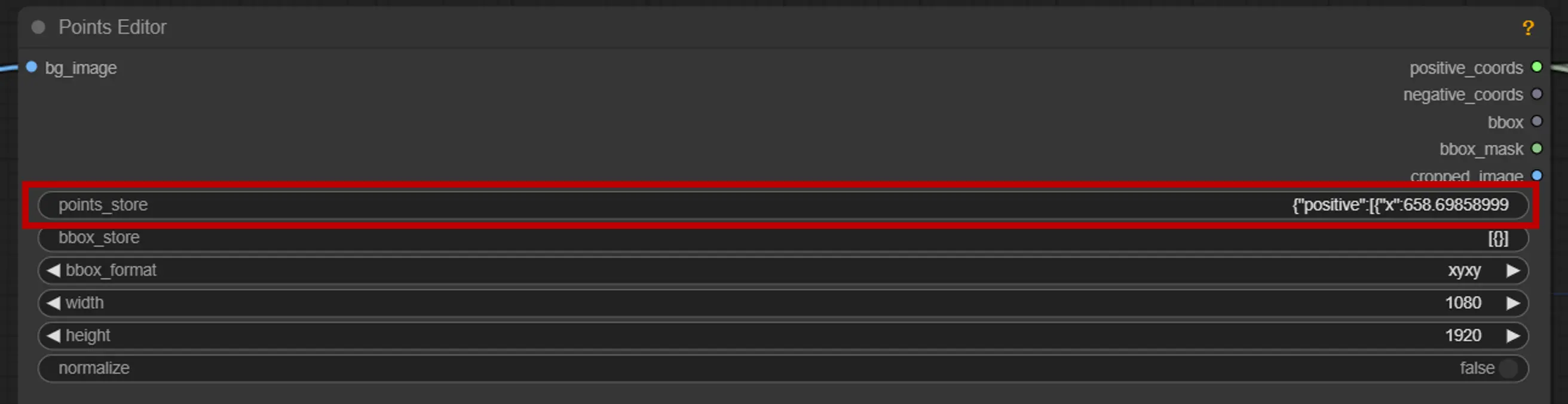

2. Points Editor

key point: Place three key points on the canvas—positive0, positive1, and negative0:

positive0 and positive1 mark the regions or objects you want to segment.

negative0 helps exclude unwanted areas or distractions.

points_store: Allows you to add or remove points as needed to refine the segmentation process.

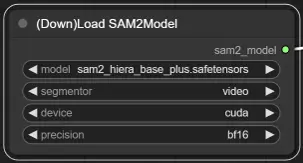

3. Model Selection of SAM2

Model Options: Choose from available SAM2 models: tiny, small, large, or base_plus. Larger models provide better results but require more loading time.

For more information, please visit Kijai ComfyUI-segment-anything-2.