The ComfyUI-MochiEdit nodes and its associated workflow are fully developed by logtd and Kijai. We give all due credit to logtd and Kijai for this innovative work. On the RunComfy platform, we are simply presenting their contributions to the community. We deeply appreciate logtd and Kijai’s work!

The Mochi Edit workflow is a tool designed to let users modify video content using text-based prompts. It supports tasks such as adding or altering elements (e.g., placing hats on characters), adjusting the overall style, or replacing subjects within the footage.

Mochi Edit Unsampling

At the heart of Mochi Edit lies its unsampling technique. Mochi Edit's unsampling technique leverages a simplified approach to editing videos and images, allowing for transformations through multi-modal prompts without requiring additional preprocessing steps or external network modules. The core idea behind Mochi Edit's unsampling is to manipulate the video's latent representation directly, rather than performing complex operations like face detection or pose estimation, which are common in traditional image generation pipelines. This method is aligned with the broader goal of creating a more flexible and streamlined image-generation process, much like GPT's ability to generate text from any input prompt. With Mochi Edit's unsampling technique, users can generate various styles and modifications directly from a multi-modal description, making the process far more intuitive and efficient.

In short, Mochi Edit allows you to create small variations of the video you upload. Like copy and translate motion of the subject to another subject or change background settings change subject properties..etc

1.1 How to Use Mochi Edit Workflow?

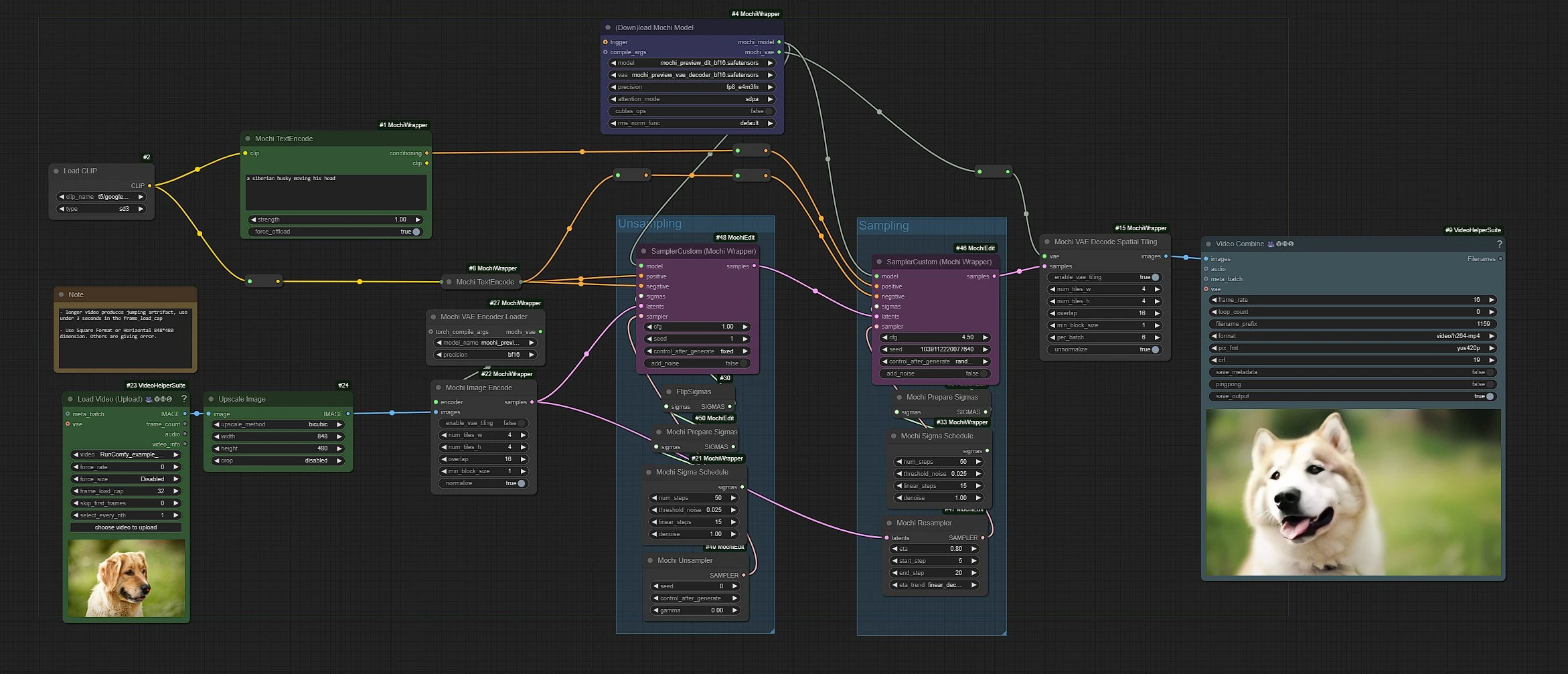

In this workflow, Left Green nodes are the inputs for Video and text, Middle Purple nodes are the mochi unsampler and sampler nodes, and Right Blue is the video output node.

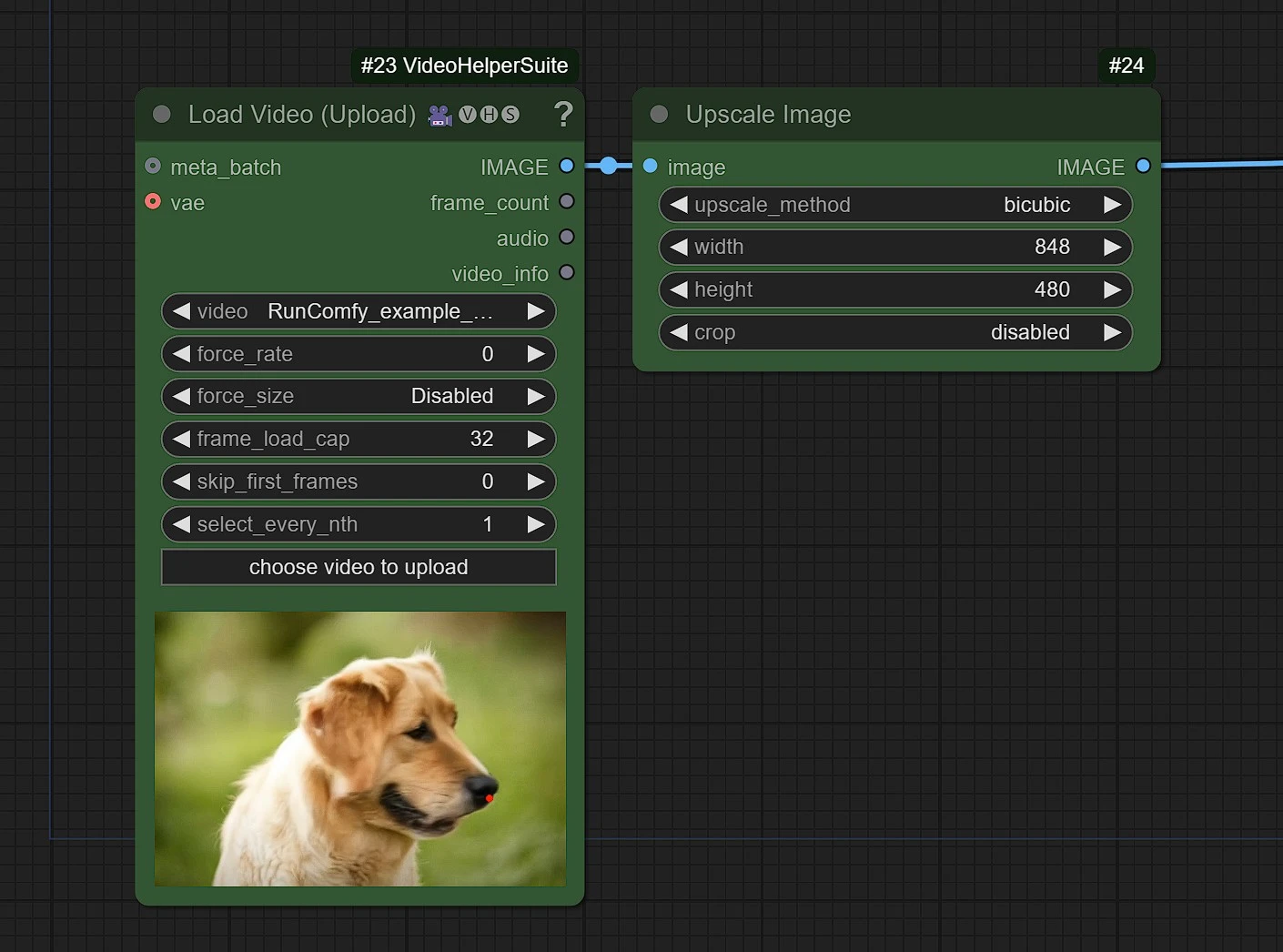

1.2 Load Video Node

- Click and Upload your video in the load video node

frame_load_cap: is default to 32 frames. Above 32 frames, Jumping Artifacts are observerd. Keep under 3 seconds (32) frames for best results.skip_frames: Skip frames if you want to start from a specific frame

Use Square Format (512 x 512) or Horizontal (848 x 480) dimension in the upscale node. Others are giving error.

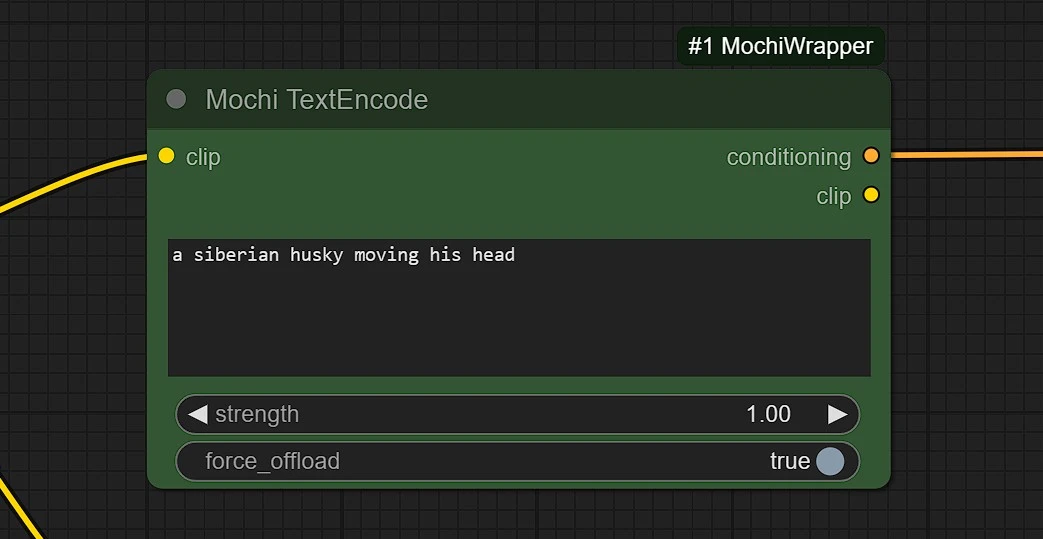

1.3 Prompts

This is experimental usage, sometimes it may work or sometimes not or sometimes fully change the original video.

- Use small variation of the subject as prompt.

- Strong Variation may distort and change the image fully.

- Try different seed if you are not getting proper results.

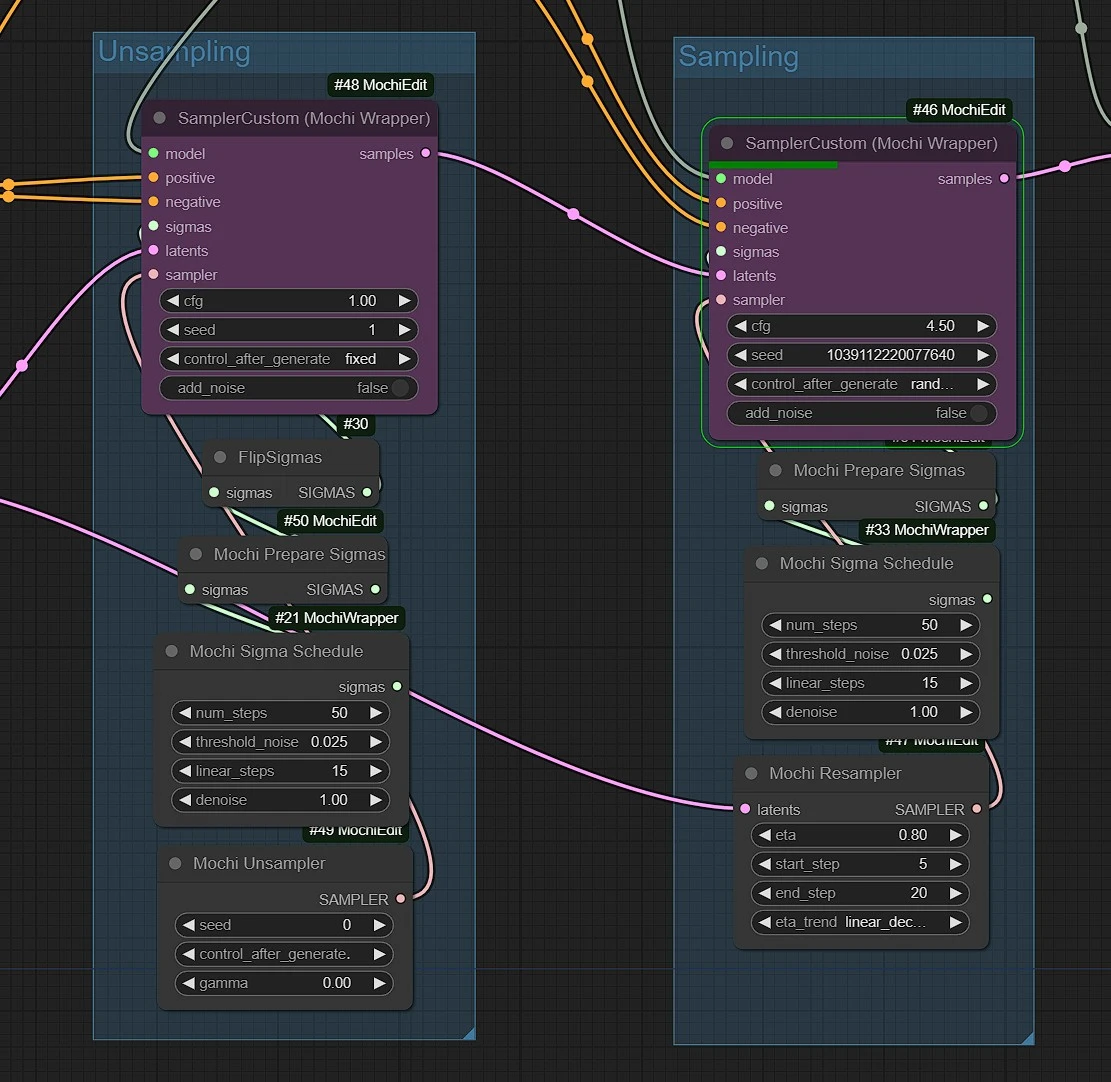

1.4 Sampling and Unsampling Node Groups

The Sampling and Unsampling Ksampler are set to best setting by the author. If settings edited vaguely may result in weird undesirable results. Feel free to play with:

- Sampler's

Seedfor varations num_stepsandlinear_stepto Change rendering quality or speed.eta,start_stepandend_Stepto change the unsampling strength, start and end percent.

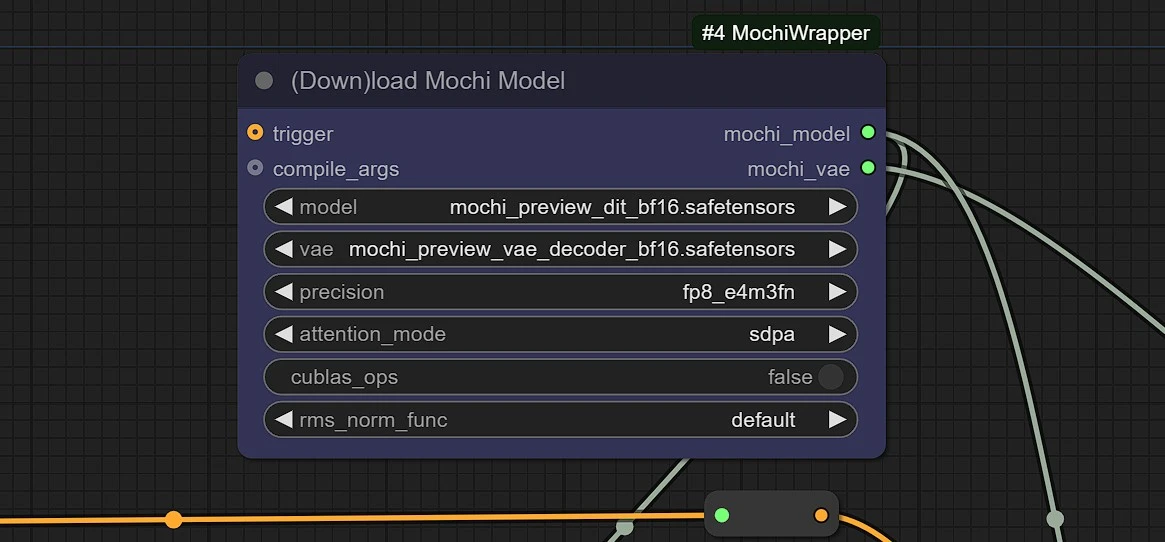

1.5 Mochi Models

Models are downloaded from this HuggingFace Repo in your comfyui Automatically. It will take around 5-10 mins for the first time to download the 10.3 GB model.

Mochi Edit's unsampling technique revolutionizes video and image editing by simplifying the process and removing the need for complex preprocessing or additional modules. This innovative approach empowers users to generate high-quality, customized visuals effortlessly through multi-modal prompts. By combining flexibility and accessibility, Mochi Edit paves the way for a more intuitive and creative image-generation future.