Mochi 1 | Genmo Text-to-Video

Mochi 1 Preview Model is an open state-of-the-art video generation model with high-fidelity motion and strong prompt adherence in preliminary evaluation. This model dramatically closes the gap between closed and open video generation systems. Mochi Wrapper Model is developed by Genmo, all the models credits goes to himComfyUI Mochi 1 Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

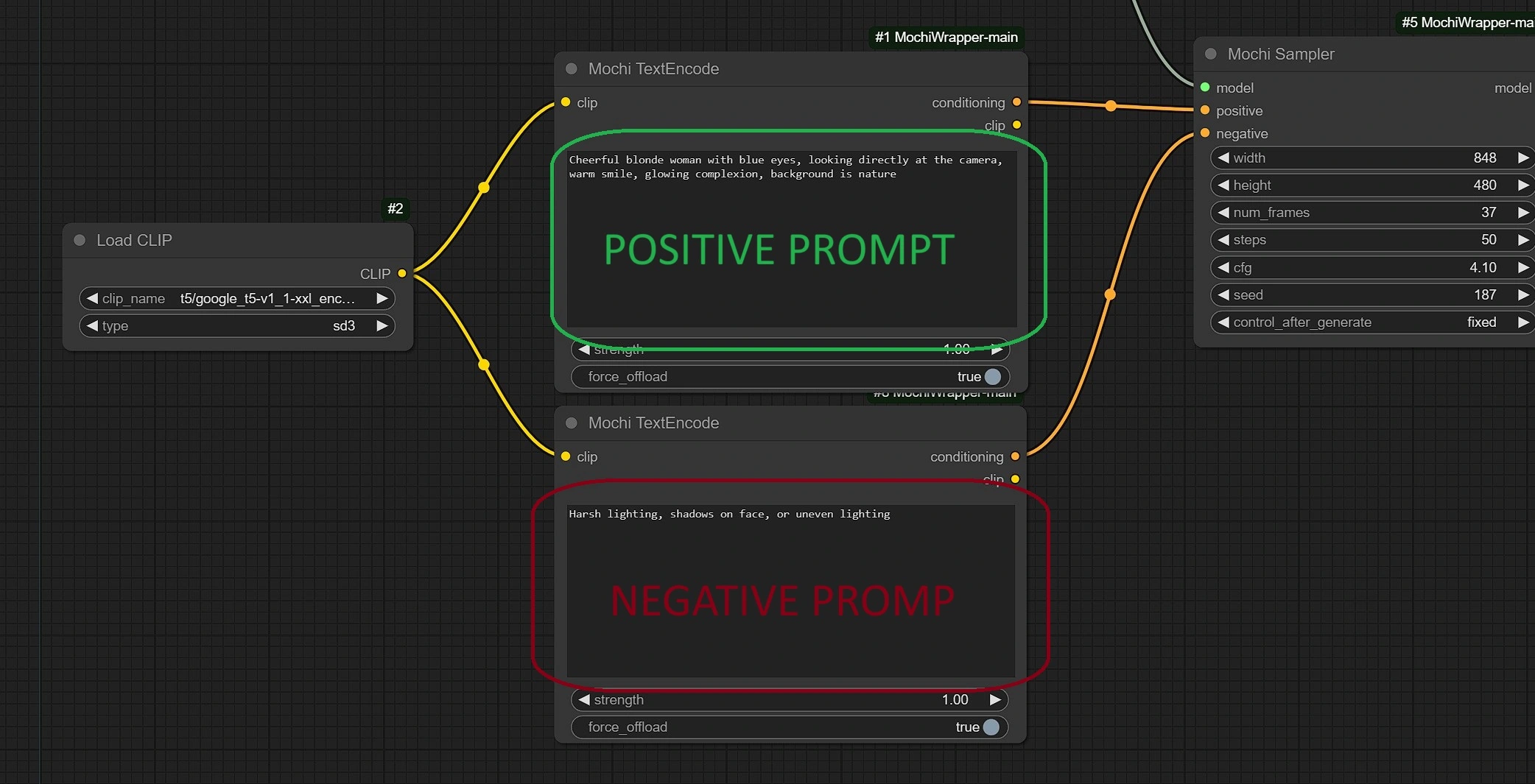

ComfyUI Mochi 1 Examples

ComfyUI Mochi 1 Description

The and its associated workflow are fully developed by Kijai. We give all due credit to Kijai for this innovative work. On the RunComfy platform, we are simply presenting Kijai’s contributions to the community. It is important to note that there is currently no formal connection or partnership between RunComfy and Kijai. We deeply appreciate Kijai’s work!

Mochi 1

Mochi 1 is an advanced text-to-video model from that brings creative ideas to life by transforming textual descriptions into vivid, visually engaging videos. Built with intuitive AI capabilities, Mochi 1 can interpret a range of prompts, creating dynamic animations, realistic scenes, or artistic visualizations that align with user intent. Whether it’s for storytelling, advertising, educational content, or entertainment, Mochi 1 provides flexibility by supporting diverse video styles, from 2D animations to cinematic renders. Mochi 1 is designed to empower creators and make video production more accessible.

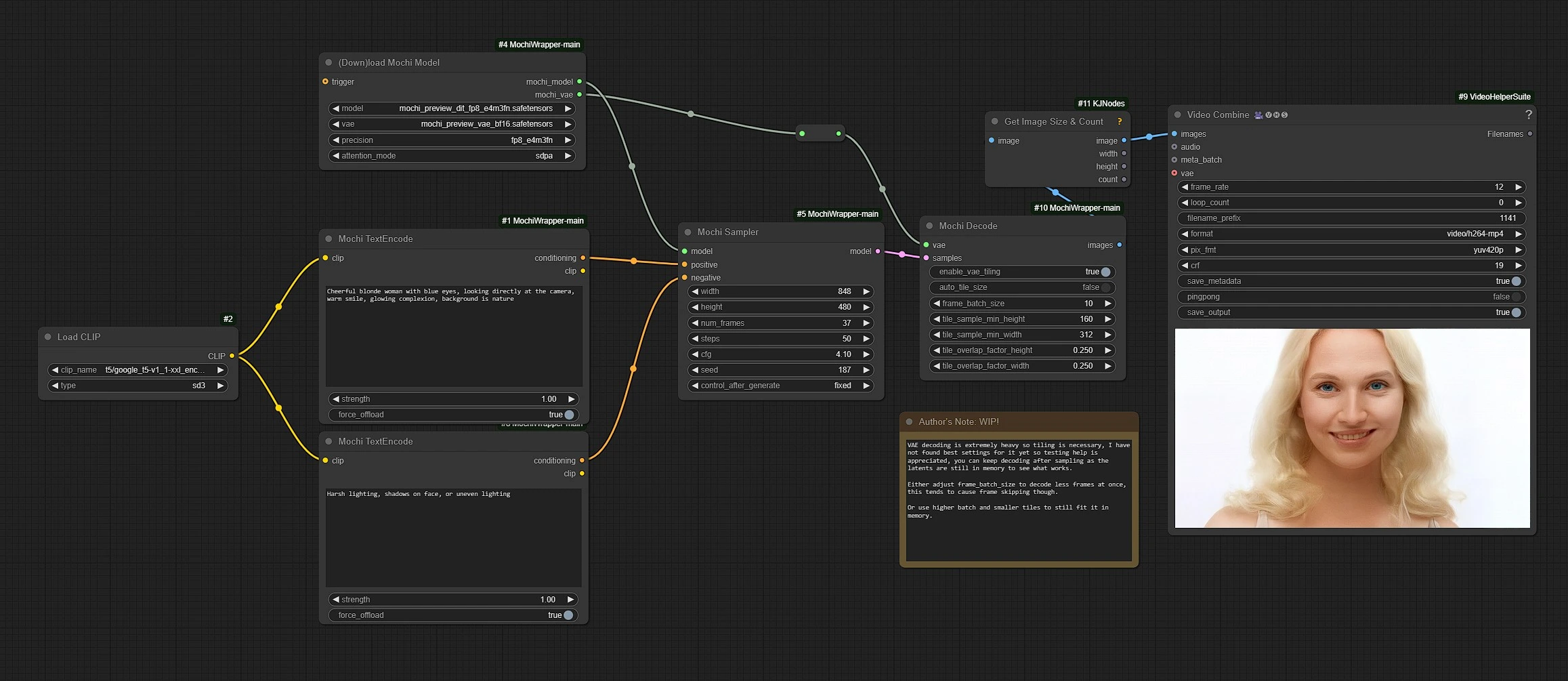

How to Use ComfyUI Mochi 1 Workflow?

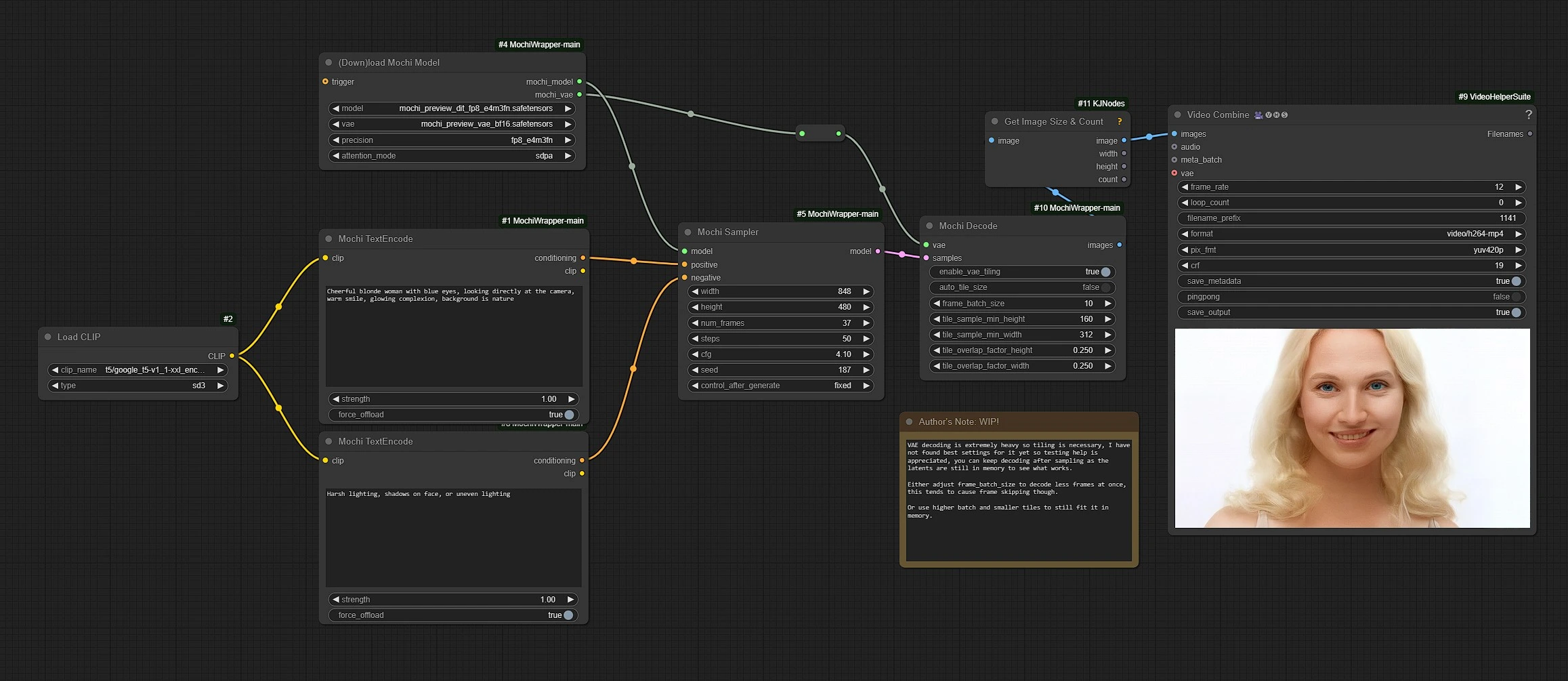

1.1 Mochi 1 Sampler

This is the Main Node of the workflow, in which you define most of the settings like number of frames, resolutions, steps, cfg ...etc for video generation with Mochi 1.

Parameters:

Width: The width of the video generated by Mochi 1.Height: The height of the video generated by Mochi 1.num_frames: Controls the number frames generated in a Mochi 1 video.steps: The number of iterations for enhancing details in Mochi 1 output. More steps result in finer details but require more processing time.cfg: The Classifier-Free Guidance scale, which adjusts how closely Mochi 1 follows the input guidance.seed: Controls randomness in the Mochi 1 generation process. Using the same seed yields the same results.control_after_generate: Adjusts the image details post-generation in Mochi 1.

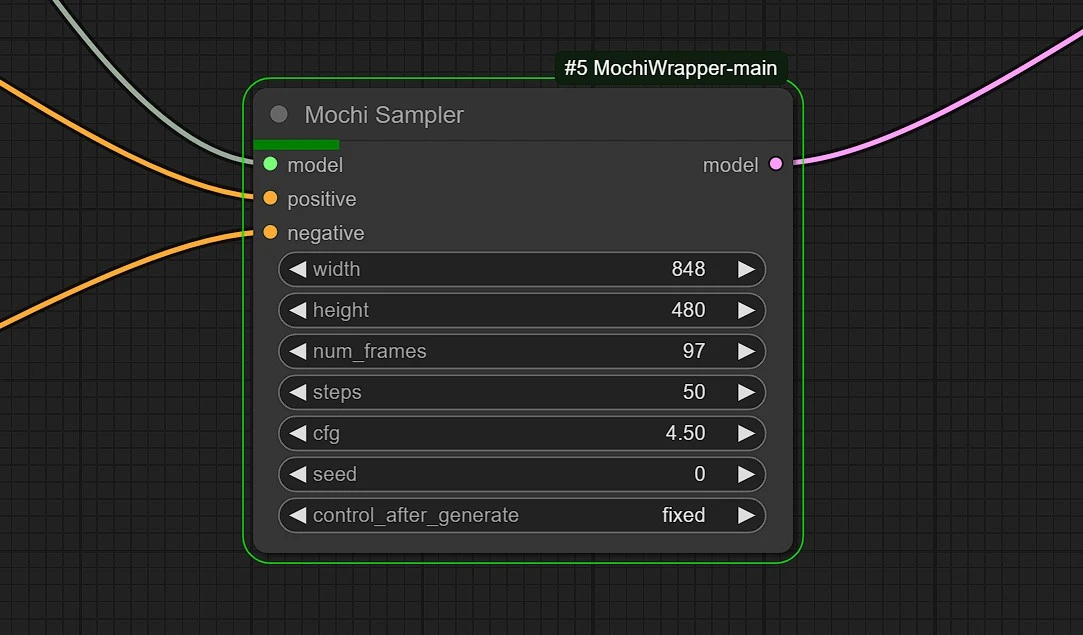

1.2 Positive and Negative Prompts.

Positive Prompt: Describes what you want Mochi 1 to generate, focusing on the desired elements. For example, “a blonde woman smiling in natural sunlight” or “a serene park with blooming flowers.”Negative Prompt: Describes what you do not want Mochi 1 to generate in the video.Strength: It determines the strength of the prompts and how closely Mochi 1 will follow the prompt.

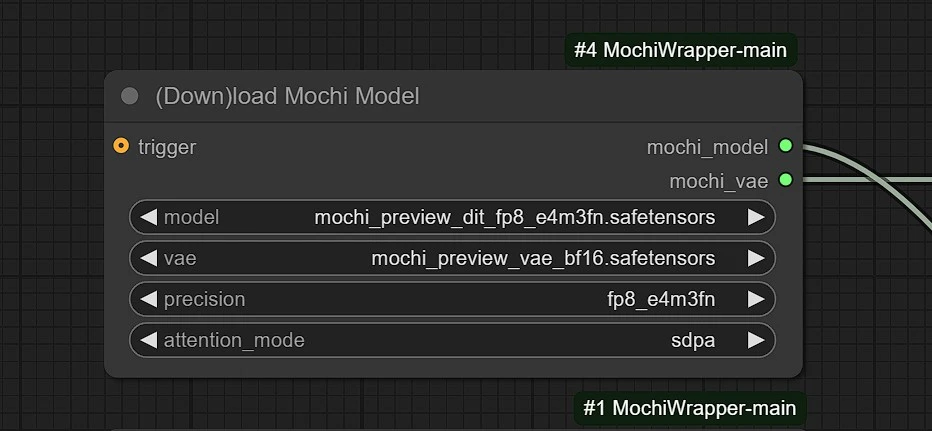

1.3 Model AutoDownloader

- This is the auto downloader and loader for the Mochi 1 models.

- It will take 3-5 mins to download the Mochi 1 Model 19.1 GB Model when running for the first time.

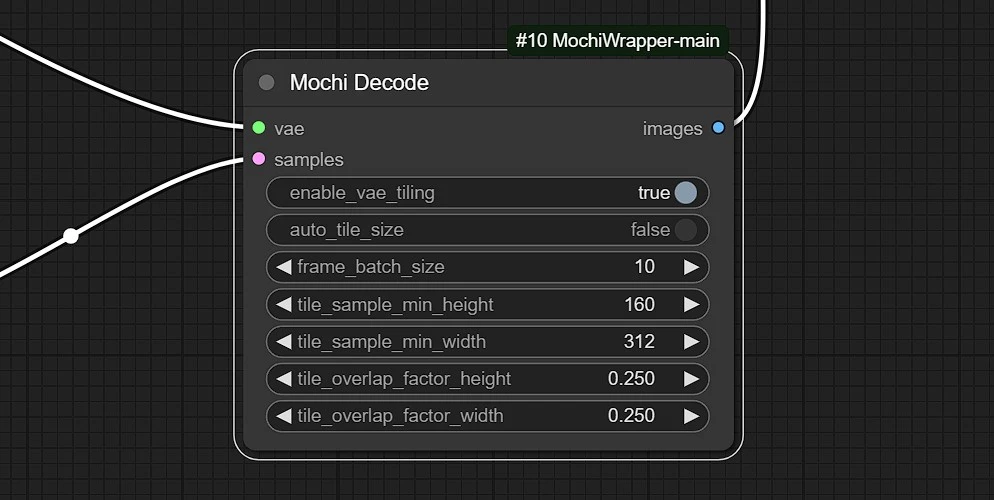

1.4 Mochi 1 Decode

Parameters:

enable_vae_tiling: Enables/Disables the Tile Decoding for low vram Consumption.auto_tile_size: Automatically determines the Tile Decoding Size.frames_batch_size: Determines the number of frames to decode in one go. Use Lower values to consume less GPU but will take more time.tile_sample_mine_height: Tile min height for decoding Mochi 1 outputs.tile_sample_mine_width: Tile min width for decoding Mochi 1 outputs.tile_overlap_factor_height: Tile min overlapping height for decoding Mochi 1.tile_overlap_factor_width: Tile min overlapping width for decoding Mochi 1.

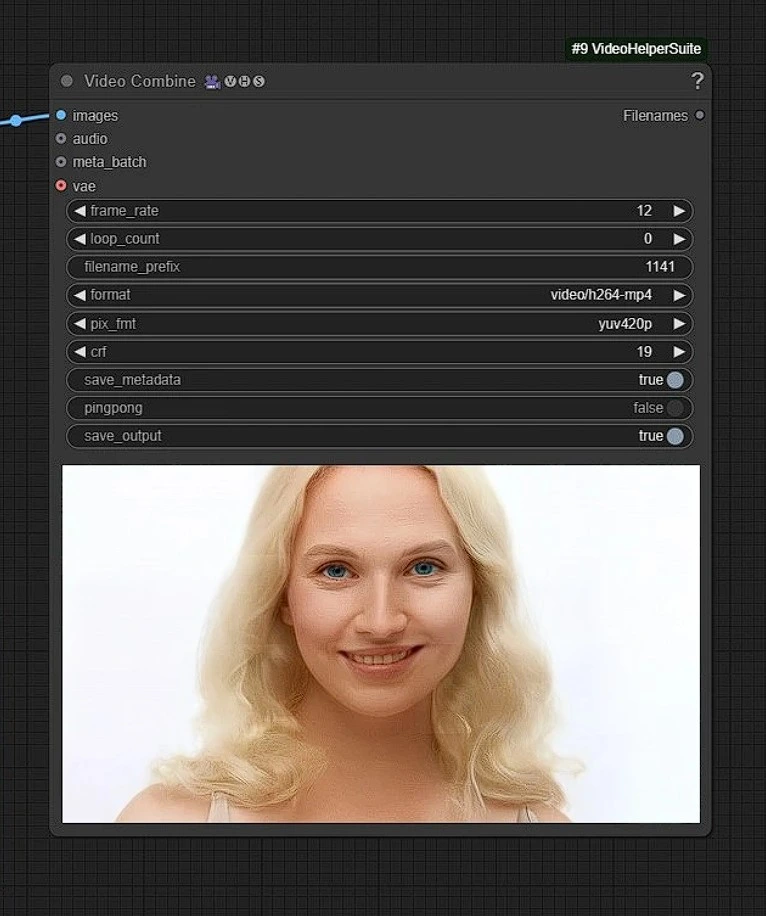

1.5 Video Combine

Parameters:

frame_rate: Sets the frame rate for the Mochi 1 output. In this case, it is set to 12, meaning the video will display 12 frames per second (fps).loop_count: Defines how many times the video will loop. A value of 0 typically means infinite looping, though this may depend on the specific software implementation.filename_prefix: This adds a prefix to the filename of the Mochi 1 output file. In the image, it's set to 1141, so the output file would start with that number.format: Specifies the Mochi 1 output video format. Here, it's set to video/h264-mp4, which means the video will be encoded in H.264 codec and saved as an MP4 file.pix_fmt: Defines the pixel format for the video. The value yuv420p indicates that it will use the YUV color space with 4:2:0 chroma subsampling, which is commonly used due to its balance of quality and compression.crf: Stands for Constant Rate Factor, which controls the quality and file size of the video in relation to the bitrate. A value of 19 provides a good balance between video quality and file size, where lower values give better quality and larger file size, while higher values reduce quality and file size.save_metadata: When enabled (true), this saves metadata (such as settings and properties) into the output file.pingpong: This setting, when enabled, causes the video to play forward and then in reverse, creating a "ping-pong" effect. It is set to false here, so this effect will not be applied.save_output: When set to true, the final output from Mochi 1 will be saved to disk.

Designed for accessibility, Mochi 1 allows users to customize details like color schemes, camera angles, motion dynamics, and more, making it adaptable for both novice and experienced creators. Mochi 1's underlying algorithms are trained on a vast dataset of visuals, enabling it to understand context, emotion, and visual storytelling nuances. Mochi 1 integrates seamlessly with popular editing platforms, enabling users to polish generated videos or add custom effects.

By combining efficiency and creativity, Mochi 1 opens new possibilities for content creators, saving time while boosting creativity and enabling rapid prototyping. This makes Mochi 1 ideal for creating engaging YouTube videos, social media content, or interactive stories, empowering users to express ideas in compelling visual formats without needing in-depth video editing expertise.