Luma Ray 2

Video Model Luma Ray 2

Text to Video

Image to Video

Introduction of Luma Ray 2

Luma Ray 2 is a cutting-edge video generative model developed by Luma Labs, released in January 2025. It creates realistic visuals with smooth, coherent motion and leverages a new multi-modal architecture. Luma Ray 2 excels in text and image to video generation, delivering ultra-realistic details and logical event sequences.

Discover the Key Features of Luma Ray 2

Ship captain smokes a pipe, turns and looks at a looming storm in the distance

Flexible Input and Responsive Generation

Luma Ray 2 supports text-to-video, allowing users to control scene layout, objects, and movement through text-based instructions. It also supports image-to-video, transforming a single image into a moving video while preserving details.

A man plays saxophone

Lifelike Characters and Detailed Environments

Luma Ray 2 is designed to generate realistic people with natural facial expressions and movements. Characters appear expressive and fluid, making animations feel more authentic.

An overhead shot follows a vintage car winding through autumn-painted mountain roads, its polished surface reflecting the fiery canopy above. Fallen leaves swirl in its wake while sunlight filters through branches, creating a dappled dance of light across the hood.

Smooth Motion and Realistic Physics

Luma Ray 2 generates movement that looks natural and fluid, making animations feel more lifelike. It applies real-world physics, so effects like gravity, object collisions, and flowing water behave in a way that makes sense. Whether animating a person walking or a ball rolling, the model ensures that motion follows a logical pattern, helping videos feel more realistic and natural.

Seal Whiskers

Realistic and Cinematic Visuals

Luma Ray 2 creates videos with a natural, film-like appearance by carefully rendering details, lighting, and composition. It captures fine textures, reflections, and depth to make scenes look as close to real life as possible. The model also applies cinematic techniques like balanced framing and natural contrast, making videos visually engaging. Even in close-up shots, it preserves small details like skin textures and fabric patterns, keeping the visuals sharp and realistic.

a tiny chihuahua dressed in post apocalyptic leathers with a WW2 bomber cap and goggles, driving through a wasteland in a tiny lifted and tricked out rig. The style is action movie, and filmed from a dramatic close up angle

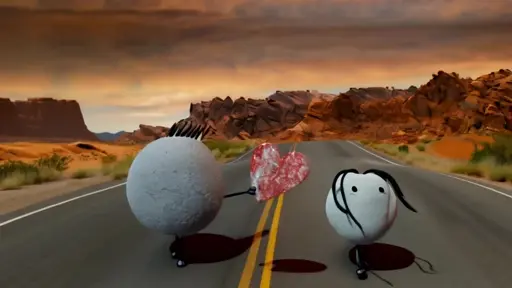

Visual Effects

Luma Ray 2 excels in creating visually striking videos with advanced visual effects. It enhances scenes with cinematic elements like slow motion, dramatic lighting, and unique color treatments. Luma Ray 2 supports various visual styles, from surreal and abstract imagery to realistic effects, allowing users to experiment with different looks and moods.

Related Playgrounds

Frequently Asked Questions

What is Luma Ray 2?

Luma Ray 2 is a large-scale video generative model capable of producing realistic visuals with natural, coherent motion. It understands text instructions and can accept image and video inputs. Trained on Luma's multimodal architecture with 10 times the compute of its predecessor, Luma Ray 1, Luma Ray 2 can generate videos up to 10 seconds in length with resolutions up to 1080p. Its training on video data allows it to learn natural motion, realistic lighting, and accurate interactions between objects, resulting in authentic video outputs.

How does Luma Ray 2 work?

Luma Ray 2 works by leveraging deep learning models trained on a vast dataset of video, images, and motion data to generate realistic videos based on user inputs. Luma Ray 2 is designed to understand and generate natural motion, lighting, and interactions between objects, which makes its outputs appear seamless and realistic. Here’s a step-by-step breakdown of its functionality:

- Input Processing: The user provides input in the form of text prompts or images. Text prompts describe the scene, objects, actions, or environments for the video, while image inputs can be used to generate videos based on a static image, turning it into a dynamic sequence.

- Feature Extraction: Luma Ray 2's architecture processes the input by extracting key features and understanding the context of the scene. For example, it identifies objects, lighting, and their relationships.

- Video Generation: Luma Ray 2 uses its neural network to generate a video sequence that is consistent with the input. It handles motion and interaction in a physically realistic manner, ensuring that objects move smoothly and lighting behaves naturally.

- Refinement and Upscaling: Once the initial video is generated, Luma Ray 2 offers options to upscale it to higher resolutions (up to 4K) while preserving quality. It also supports dynamic elements like camera angles and motion, ensuring a visually appealing outcome.

How to use Luma Ray 2?

You can use Luma Ray 2 through the Luma Dream Machine platform by first visiting the Luma AI website and navigating to the Dream Machine interface. Once there, you can start a new project by clicking on "Start a Board", then select the Luma Ray 2 model in the settings menu. Adjust parameters like aspect ratio, resolution, and duration, and enter a detailed text prompt describing the scene or action you want to generate. After submitting your prompt, Luma Ray2 will process it and generate the corresponding video.

Alternatively, you can access Luma Ray 2 through the RunComfy AI Playground, which offers similar capabilities to the Luma Dream Machine platform, while also providing access to a wide range of other AI tools for enhanced creative flexibility. This flexible environment allows you to experiment with various models for video, image, and animation generation, providing a comprehensive creative space for your projects.

How much is Ray 2 Luma?

Luma offers subscription plans starting from the Lite Plan at $9.99 USD/month. However, if you're looking to explore not just Luma Ray 2 but also a variety of other AI tools, RunComfy AI Playground provides a similar basic plan at the same price. This plan gives you access to Luma Ray 2 along with other powerful tools for video, image, and animation generation, making it a great option if you want to experiment with multiple AI models in a single platform. You can find more details and pricing on RunComfy's Playground Pricing page.

How does Luma Ray 2 differ from Luma Ray 1.6 (also known as Luma Dream Machine 1.6)?

Luma Ray 2 introduces several significant enhancements over its predecessor, Luma Ray 1.6 (also known as Luma Dream Machine 1.6), particularly in realism and quality, video length and resolution, and workflow efficiency.

-

Realism and quality: Luma Ray 2 delivers lifelike textures, smooth camera movements, and dynamic scenes, resulting in more immersive and visually appealing videos. This marks a substantial improvement over Luma Ray 1.6, which, while capable, did not achieve the same level of detail and natural motion.

-

Video length and resolution: Luma Ray 2 supports generating clips up to 10 seconds long at 720p resolution. This is an advancement over Luma Ray 1.6, which offered shorter videos at lower resolutions, thereby enhancing the potential for creating more detailed and engaging content.

-

Workflow efficiency: Luma Ray 2 addresses and eliminates the slow-motion playback issues that were sometimes encountered with Ray 1.6. This improvement streamlines the video generation process, allowing for faster and more reliable production of high-quality videos.

What types of inputs does Luma Ray 2 accept (e.g., text-to-video, image-to-video, video-to-video)?

Currently, Luma Ray 2 supports: Text-to-Video: Generating videos based on descriptive text prompts. Image-to-Video: Creating videos starting from a static image input. Video-to-video and editing capabilities are planned for future releases.

Can Luma Ray 2 generate videos from both text and images?

Yes, Luma Ray 2 supports both text-to-video and image-to-video.

What video resolutions and durations does Luma Ray 2 support?

Luma Ray 2 supports the following resolutions and durations: Resolutions: 540p, 720p, 1080p. Durations: 5 seconds and 10 seconds.

What is the difference between Luma Ray 2 and Luma Ray 2 Flash?

Luma Ray 2 Flash is a faster and more cost-effective variant of the Luma Ray 2 model, delivering high-quality video generation at three times the speed and one-third the cost of the standard version, making it more accessible to users.

How does Luma Ray 2 handle dynamic camera movements such as POV flight?

Luma Ray 2 has advanced motion tracking and scene generation capabilities, allowing it to create dynamic camera movements, including POV (point-of-view) flight. When generating videos, Luma Ray 2 can simulate natural camera motions like flying, walking, or panning, which gives videos a more cinematic and immersive feel. Luma Ray 2 understands spatial relationships within a scene, making these movements realistic.

How do I access Luma Ray 2?

You can access Luma Ray 2 through the Luma Dream Machine platform by signing in, subscribing to Luma, and selecting the Luma Ray 2 model in the settings menu after starting a new project.

Alternatively, you can also use RunComfy AI Playground, which not only provides similar capabilities to Luma Ray 2 but also offers additional AI tools for enhanced creative flexibility. By signing in, you can get free credits to try Luma Ray 2, explore other powerful AI features, and then decide whether to subscribe. RunComfy’s extra tools make it a great choice for users seeking more creative options.

What instructions or best practices should I follow when using Luma Ray 2?

Here are some best practices to get the best results from Luma Ray 2:

- Be specific with prompts: The more detailed and descriptive your text or image prompt, the more accurate and aligned the output will be with your vision. For example, instead of saying "city at night," describe the colors, weather, or type of buildings.

- Start with a lower resolution: Begin with 540p or 720p for faster generation times, especially if you're experimenting or trying different inputs.

- Test different camera angles and movements: If you're aiming for dynamic scenes, try specifying camera movements like "POV flight" or "cinematic dolly shot."

- Keep video length in mind: While Luma Ray 2 supports up to 10-second videos, long or complex videos might result in slower render times or reduced quality. Ensure your expectations match the video length.

- Refine prompts with feedback: If the initial result isn't perfect, adjust your prompt with more clarity or additional details for better refinement

Does Luma Ray 2 support anime-style generation or morphing effects in video?

While Luma Ray 2 can create highly realistic videos, its focus is primarily on photorealistic output. However, Luma Ray 2 does support stylized effects and morphing to some extent, allowing the creation of non-photorealistic video styles, including artistic or animated styles. For anime-specific video generation or highly abstract morphing effects, this might be less refined than models specifically trained for those styles, but it is still possible with creative prompt engineering.

How well does Luma Ray 2 maintain character consistency in image-to-video outputs?

When provided with consistent visual references (such as multiple images of the same character or scene), Luma Ray 2 can generate smooth transitions between frames, maintaining the character's features.

However, for best results, ensure the input image(s) are clear and high-quality, especially if you're working with characters or specific visual elements that need to remain consistent throughout the video. Inconsistent or low-quality reference images can lead to slight discrepancies in the output.

How does Luma AI Ray 2 compare to Kling AI and Runway AI?

Luma Ray 2 offers a creative twist with dynamic, cinematic camera moves and strong close-up prompt interpretation. However, it sometimes falls short in detail and consistency compared to its competitors. For example, Kling AI is known for sharper visuals and more reliable full-body motion, while Runway AI stands out with faster generation and crisp outputs.

If you value creative camera effects, Luma Ray 2 is a fun tool to try—but for consistently detailed results, Kling AI and Runway AI currently lead the pack. You can experience models like Kling AI and Runway AI here.