Runway Gen-4 Turbo

Video Model Runway Gen-4 Turbo

Image to Video

Introduction of Runway Gen-4

Runway Gen-4, released on March 31, 2025, by Runway AI Inc., is an AI video generation model focused on improving visual consistency, motion realism, and prompt alignment. It supports the creation of short video clips featuring coherent characters, environments, and styles. Runway Gen-4 also introduces advancements in natural language understanding and basic physics simulation, aiming to offer more control and reliability for integrating AI-generated content into creative workflows such as live-action, animation, and visual effects.

Discover the Key Features of Runway Gen-4

The subject sits in the driver's seat of a parked car in the forest. She starts the engine and slowly begins to drive forward along the narrow dirt path. Sunlight flickers through the trees as the car moves. The camera remains inside the car, handheld and steady, subtly tracking the subject's profile as she drives. Cinematic live-action, warm forest tones, shallow depth of field.

Consistent Characters

Runway Gen-4 achieves remarkable character fidelity using just a single reference image. Its advanced generation capabilities ensure that nuanced character details and visual integrity are meticulously preserved across vastly different lighting conditions, diverse locations, and unique stylistic treatments. This allows Runway Gen-4 to deliver exceptionally lifelike and convincing characters, maintaining high fidelity and reducing inconsistencies even within complex cinematic sequences. Experience superior character consistency for professional results.

A handheld camera orbits around the subject as the sci-fi drone hovers steadily in place. The sandstorm begins to swirl behind it, grains of sand whipped up by strong gusts. Subtle engine vibrations disturb the air below the drone. Live-action, cinematic style. The drone stays consistent in design and color.

Consistent Objects

Runway Gen-4 excels at maintaining high-fidelity object and subject appearance throughout generated video clips. Runway Gen-4's sophisticated engine ensures key elements preserve their core visual characteristics and details consistently as the scene dynamically unfolds. This capability minimizes visual jitter or unwanted transformations, achieving exceptional stability vital for polished narrative work or product visualizations where object integrity is paramount within the shot. With Runway Gen-4, achieve seamless object stability for professional-grade outputs.

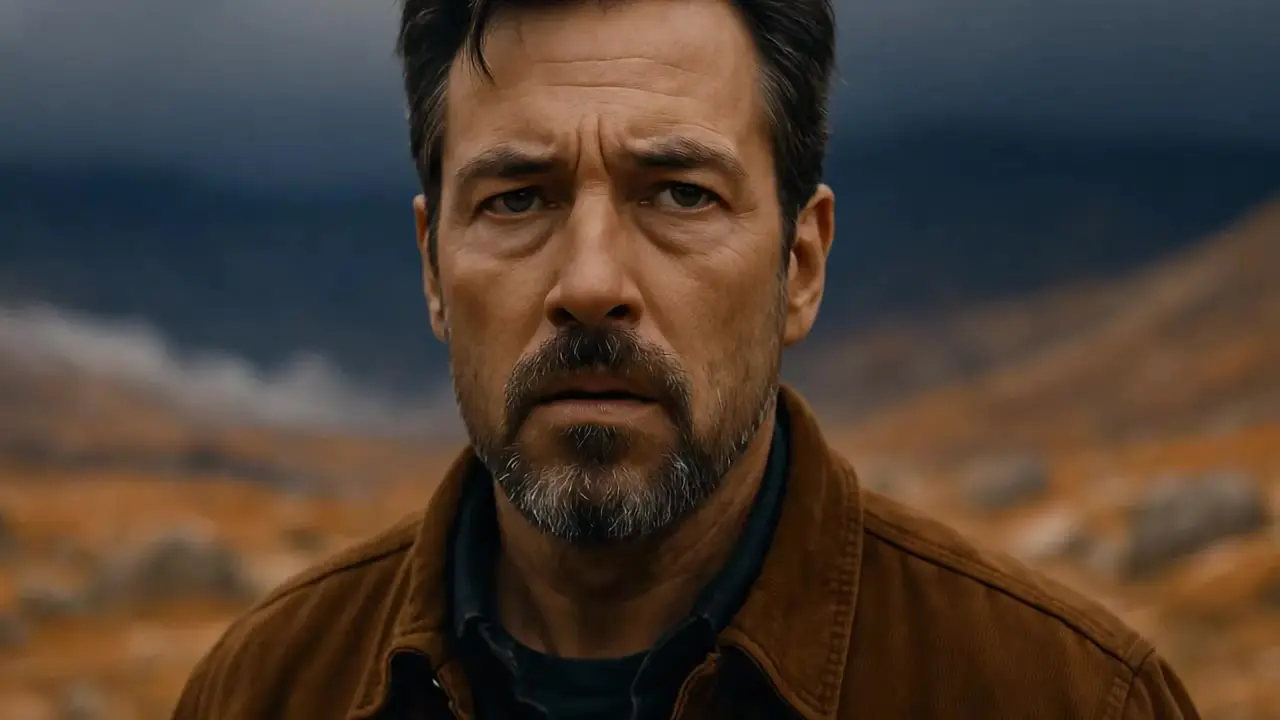

The camera slowly dollies in on the subject's face as he stares ahead in silence. Behind him, faint plumes of smoke drift upward and soft white particles shimmer and rise in the background. The air feels heavy and still, with a hint of something uncanny approaching. cinematic, grounded live-action.

Get Every Angle of Any Scene

Runway Gen-4 provides enhanced control over scene composition and camera dynamics within generated video. Define your intended shot framing, perspective, and potential camera movements using detailed descriptions, optionally guided by reference inputs. Runway Gen-4 then synthesizes the dynamic scene, striving to maintain visual coherence and adhere closely to the specified composition as the action unfolds. This allows for crafting specific shots with greater directorial precision, delivering high-quality outputs that better match your vision.

A POV shot that glides forward along the road, approaching the large, irregular soap bubble as it floats in mid-air. The camera tilts slightly upward, circling the bubble in a smooth arc as it shimmers with rainbow light. The background of suburban houses and pine trees gently blurs, creating a dreamy, cinematic atmosphere. handheld movement, soft depth of field, magical realism style.

Production-ready video

Runway Gen-4 sets a new standard for production-ready video, demonstrating exceptional quality and language understanding. It excels in generating highly dynamic visuals with remarkably realistic motion, while maintaining superior consistency across subjects, objects, and artistic style throughout the sequence. Critically, Runway Gen-4 boasts best-in-class prompt adherence, accurately interpreting complex descriptions to deliver high-fidelity outputs that align precisely with creative intent. Trust Runway Gen-4 for professional-grade video generation.

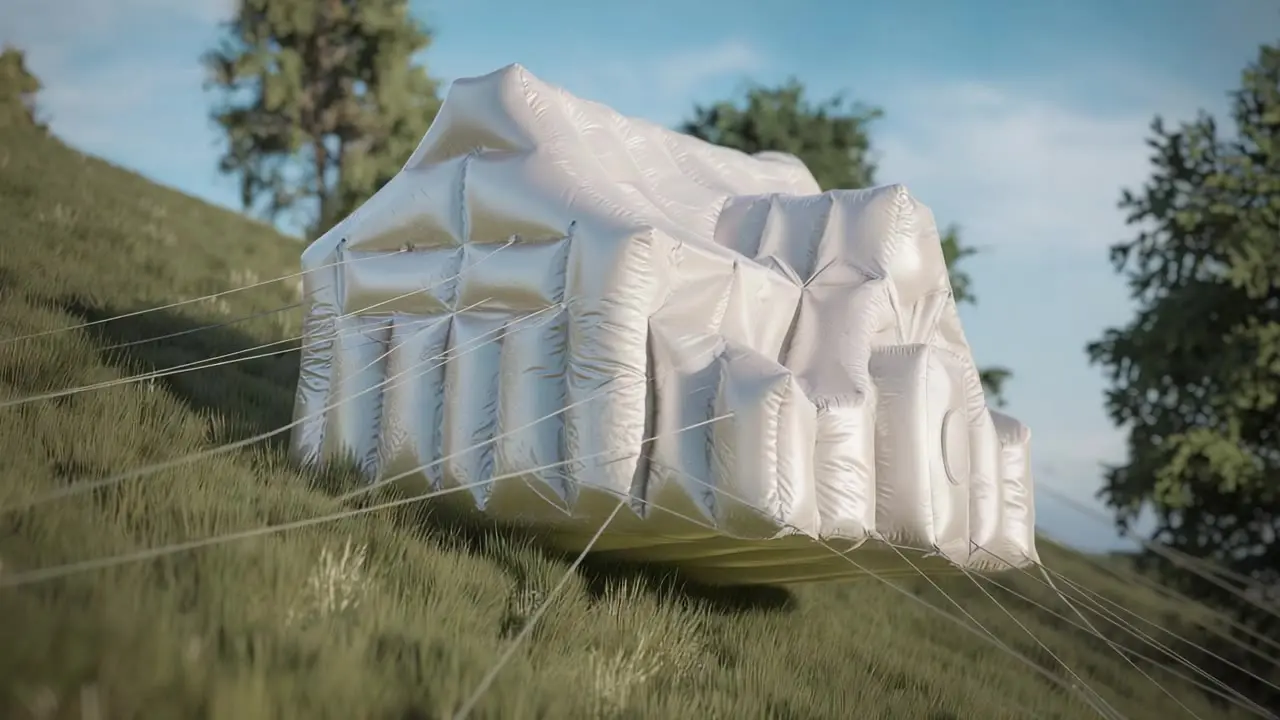

The camera slowly dollies upward as the inflatable silver house begins to rise from the grassy slope. The reflective surface of the structure glistens under sunlight as it lifts, the tension in the tethers visibly increasing. Grasses sway gently beneath. A soft breeze stirs the surrounding trees. The house floats steadily into the air, ropes pulling taut in graceful arcs. POV shifts slightly to track the ascent from below. cinematic, surreal realism, daylight, smooth camera motion.

Simulate Real World Physics

Runway Gen-4 incorporates a sophisticated understanding of real-world physics, marking a significant advancement for visual generative models. This enhanced capability allows Runway Gen-4 to simulate physical interactions and object behaviors with greater accuracy within generated scenes. The result is video output exhibiting superior realism and physical plausibility, capturing motion and environmental dynamics more convincingly. Experience lifelike visuals that adhere closely to world physics, thanks to Runway Gen-4's pioneering simulation abilities.

The camera remains steady as the man runs toward it through the smoky field. Behind him, flames slowly begin to erupt across the dry hills, spreading along the ridgeline. Embers drift through the air. The cows scatter slightly in fear. The smoke thickens, glowing orange as the fire catches. Cinematic, naturalistic lighting, realistic fire and motion.

Visual effects

Runway Gen-4 redefines visual effects creation with its advanced video generation technology. Delivering fast, highly controllable, and flexible outputs, it produces sophisticated VFX elements designed for seamless integration with live-action footage, animation, and existing VFX pipelines. This ensures remarkable stylistic coherence and visual integrity across your entire project. Leverage Runway Gen-4 to generate high-quality, adaptable visual effects that enhance professional productions with unprecedented efficiency.

Related Playgrounds

Frequently Asked Questions

What is Runway Gen-4 and what are its key features?

Runway Gen-4 is the latest iteration of Runway's AI video generation model. Compared to earlier versions like Gen-2 and Gen-3 Alpha, Runway Gen-4 shows improved ability to maintain visual consistency within a single clip—for example, keeping a character or object stable as the camera angle changes or the scene progresses.

The model also includes updates to motion rendering and overall scene coherence. While motion in Runway Gen-4 can appear more fluid than in previous versions, outcomes still vary depending on the complexity of the input and prompt, and occasional inconsistencies remain.

What types of inputs does Runway Gen-4 accept?

Runway Gen-4 requires two inputs: an image (which serves as the base visual and first frame) and a text prompt describing the desired action or motion. The model does not currently support generation from text alone, which limits flexibility compared to some text-to-video tools.

Text prompts can be up to 1,000 characters long. In practice, prompts that focus on motion or camera direction tend to work best, since the image already defines the visual appearance. Both photos and AI-generated images can be used, though the quality of the output is still highly dependent on the clarity of the prompt and the content of the image.

What are Runway Gen-4’s output capabilities (duration, resolution, etc.)?

Runway Gen-4 supports video outputs of 5 or 10 seconds at 24 frames per second. The standard resolution is 1280×720 pixels (720p) in a 16:9 aspect ratio, with other formats like vertical (9:16) and square (1:1) also available.

While Gen-4 delivers higher quality than its predecessors, the limited duration and resolution can be restrictive for more complex storytelling or commercial use. Creating longer sequences still requires stitching together multiple short clips.

What are the main use cases for Runway Gen-4?

Runway Gen-4 is particularly suited for concept development, visual experimentation, and prototyping. It has potential applications in storyboarding, music video production, visual art projects, and other creative workflows where short, stylized clips are sufficient.

How does Runway Gen-4 compare to earlier versions like Gen-2 or Gen-3?

Runway Gen-4 shows improvements over earlier models such as Gen-2 and Gen-3 Alpha, particularly in terms of visual consistency within individual clips and smoother motion generation. The model also shows better alignment with user prompts, producing results that more closely reflect the described actions or camera movements.

What are the limitations of Runway Gen-4?

Despite advancements, Runway Gen-4 has several limitations to be aware of:

Clip Duration: Each video is limited to 5 or 10 seconds. Producing longer content requires stitching clips together, which takes additional time and planning.

Image Input Requirement: You must provide an input image to start generation. Gen-4 does not support text-only video generation, which adds an extra step and can limit spontaneity.

Cross-Scene Consistency: While Gen-4 performs reasonably well within single clips, keeping a character or visual style consistent across multiple clips remains challenging.

Visual Artifacts: As with many generative models, unexpected visual glitches or misinterpretations can occur. Objects may be distorted, or prompts may be only partially followed. Complex scenes can require several attempts to achieve a usable result.

How to generate consistent character videos using Runway Gen-4?

Currently, Runway Gen-4 can often preserve a character’s appearance within a single short clip. However, it lacks a reliable solution for maintaining that consistency across multiple video clips. To improve continuity, creators may need to prepare consistent reference images for each scene, but even then, achieving a stable look across several clips remains challenging. Until dedicated features are introduced, maintaining narrative consistency will likely require manual workarounds and post-production editing.