Hunyuan Video

Video Model Hunyuan Video

Text to Video

Image to Video

Introduction of Hunyuan Video

Hunyuan Video is an open-source AI model developed by Tencent and released in December 2024. It transforms detailed text prompts into high-quality videos, featuring smooth scene transitions, natural cuts, and consistent motion that turn creative ideas into engaging visual stories.

Discover the Key Features of Hunyuan Video

Close-up, A little girl wearing a red hoodie in winter strikes a match. The sky is dark, there is a layer of snow on the ground, and it is still snowing lightly. The flame of the match flickers, illuminating the girl's face intermittently.

High-Quality Cinematic Videos with Smooth Transitions

Hunyuan Video generates high-quality videos with a structured cinematic approach by leveraging a spatial-temporally compressed latent space. The model utilizes a Causal 3D VAE to encode and decode video frames while maintaining smooth scene transitions. With precise control over camera movement, lighting, and composition, Hunyuan Video ensures that outputs align with professional cinematic standards while preserving consistency across generated frames

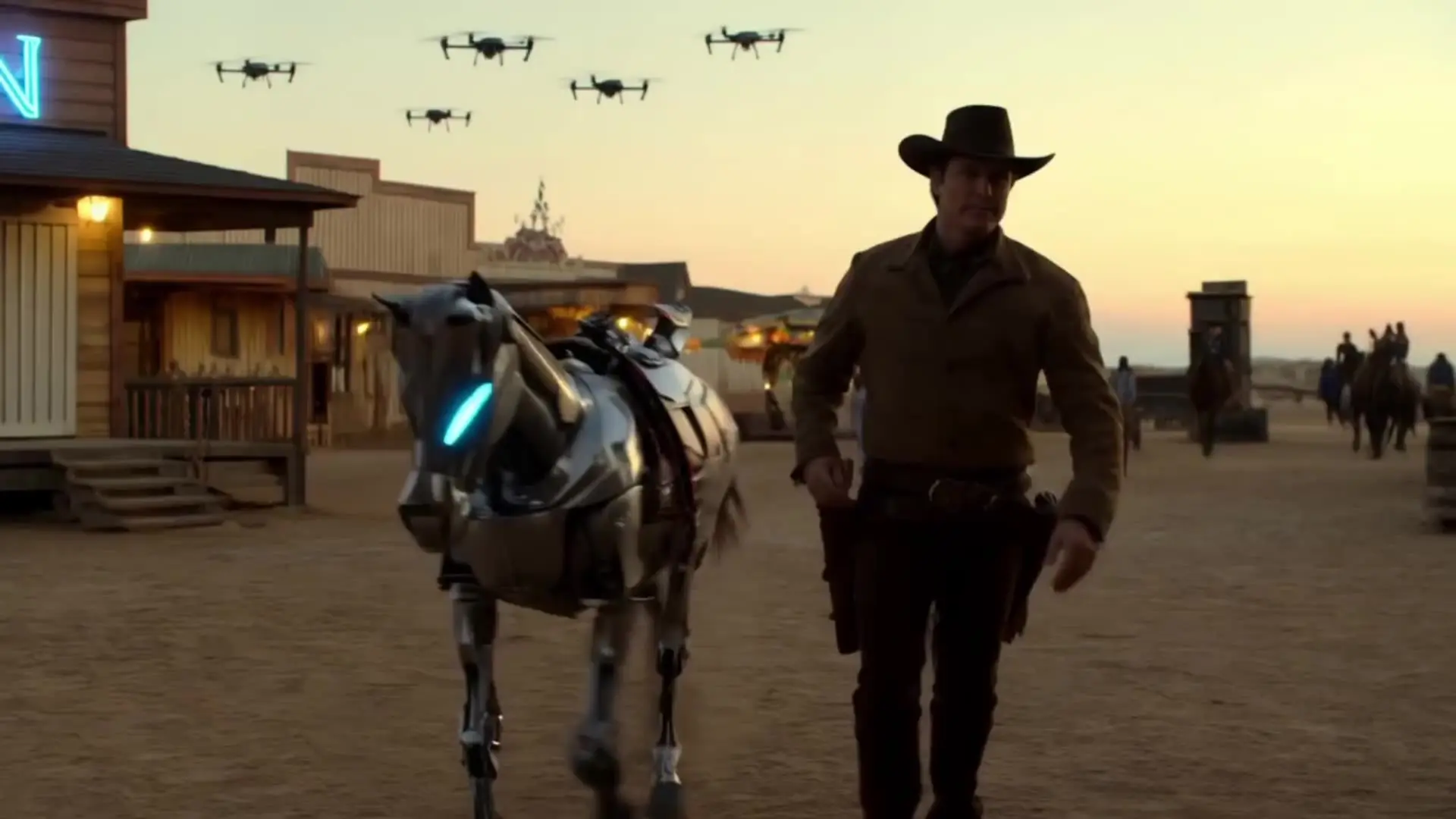

Close-up: A worn leather boot presses into cracked desert soil. The camera pans up to reveal a bounty hunter in a dusty duster coat, face hidden beneath a wide-brimmed hat. He lights a cigarette as the wind howls across the empty town. Behind him, a crashed drone smolders. The shot lingers on his silhouette as he walks into the blinding sunset. High-contrast lighting, cinematic widescreen ratio, Western-noir tone.

Seamless Scene Changes and Natural Camera Movements

Hunyuan Video incorporates semantic scene-cut capabilities, automatically segmenting videos into coherent shots based on motion and visual structure. By leveraging its transformer-based architecture and spatial-temporal modeling, the system enables smooth transitions between scenes without abrupt visual disruptions. Additionally, predefined camera movement controls allow for refined storytelling, aligning generated content with input descriptions for better coherence and realism.

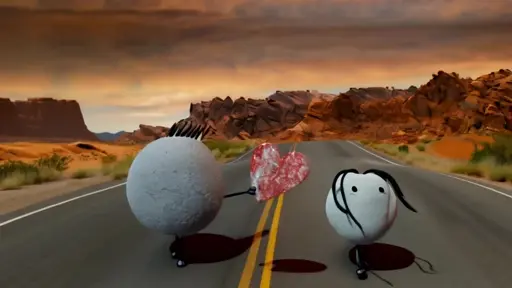

A lone gunslinger in a black trench coat walks slowly down an empty desert highway, his heavy boots crunching against the pavement. The wind howls softly, dust swirling in the distance. He comes to a stop, raising his pistol with steady precision. A single gunshot echoes through the barren landscape. The camera holds the moment, the smoke drifting from the barrel as the neon lights of a distant roadside bar flicker in the background. Cinematic western-noir, slow deliberate movement, tense atmosphere.

Fluid Motion and Precise Action Control

Hunyuan Video is optimized for generating videos with continuous actions by employing progressive video-image joint training. The model maintains motion dynamics across multiple frames, ensuring that sequential actions remain fluid and logically structured. Through its Flow Matching framework, Hunyuan Video predicts motion trajectories with reduced artifacts, making it well-suited for generating animations and long-form videos with stable movement.

The camera drifts slowly forward through the circular opening of a damaged space module. Ahead, a lone astronaut floats just beyond, framed by the curve of the structure. His reflection glimmers in the visor, Earth turning silently behind. He steadies himself, then extends one arm toward a loose cable swaying gently in zero gravity. The camera glides closer, subtly rotating to reveal more of the surrounding machinery. A faint radio beep pulses as static flickers across his comms panel. The shot lingers for a beat, then softly fades out. Cinematic weightlessness, immersive depth, quiet tension.

Creative Concept Fusion and Scene Setup

Hunyuan Video demonstrates strong concept generalization capabilities, allowing it to generate complex scenes based on diverse input prompts. Using a multimodal large language model text encoder, it interprets and synthesizes various visual concepts while preserving semantic coherence. This enables combining multiple elements—such as distinct environments, objects, and artistic styles—into a single, well-integrated video output without extensive manual adjustments.

Related Playgrounds

Frequently Asked Questions

What is Hunyuan Video and what can it do?

Hunyuan Video is an open-source AI video generation model developed by Tencent, boasting 13 billion parameters. It transforms detailed text prompts into high-quality videos, delivering smooth scene transitions, realistic cuts, and consistent motion. This makes Hunyuan Video ideal for crafting compelling visual narratives.

How to use Hunyuan video?

Hunyuan Video is typically used through ComfyUI (or similar interfaces) to generate videos from text (T2V) or images (I2V). RunComfy offers several workflows for this, including the Hunyuan Text-to-Video workflow, Hunyuan Image-to-Video workflow, Hunyuan Video-to-Video workflow, and Hunyuan LoRA workflows.

If you're not using ComfyUI, you can still experience Hunyuan Video effortlessly on RunComfy AI Playground, which offers a user-friendly interface—no setup required!

Where can I use Hunyuan Video for free?

You can try Hunyuan Video for free on the RunComfy AI Playground, where you're given some free credits to explore Hunyuan Video tools along with other AI models and workflows.

How to make Hunyuan video longer in ComfyUI?

Hunyuan video duration is determined by the "num_frames" and "frame rate" parameters, with the duration calculated as num_frames divided by frame rate. For example, if num_frames is set to 85 and the frame rate is set to 16 fps, the video will be approximately 5 seconds long.

To generate a longer video, increase the num_frames value while keeping the frame rate constant, or adjust both parameters to balance duration and smoothness. Keep in mind that longer videos require more computational resources and VRAM.

RunComfy provides a variety of Hunyuan Video workflows for you to explore, including Hunyuan Text-to-Video workflow, Hunyuan Image-to-Video workflow, Hunyuan Video-to-Video workflow, and Hunyuan LoRA workflows.

What is the longest video I can produce with Hunyuan?

The maximum video length you can produce with HunyuanVideo is 129 frames. At 24 fps, this results in approximately 5 seconds of video. If you lower the frame rate to 16 fps, the maximum duration extends to approximately 8 seconds.

How to install Hunyuan video?

1. Install Hunyuan Video Locally Step 1: Install or update to the latest version of ComfyUI. Step 2: Download the required model files (diffusion model, text encoders, VAE) from official sources like Tencent’s GitHub or Hugging Face. Step 3: Place the downloaded files in their correct directories (refer to installation guides for folder structure). Step 4: Download and load the Hunyuan Video workflow JSON file into ComfyUI. Step 5: Install any missing custom nodes using ComfyUI Manager if required. Step 6: Restart ComfyUI and generate a test video to confirm everything works properly.

2. Use Hunyuan Video online via RunComfy AI Playground You can run Hunyuan Video online without installation via the RunComfy AI Playground, where you can access Hunyuan along with other AI tools.

3. Use Hunyuan Video online via RunComfy ComfyUI For a seamless workflow experience in ComfyUI, explore the following ready-to-use workflows on RunComfy: Hunyuan Text-to-Video workflow Hunyuan Image-to-Video workflow Hunyuan Video-to-Video workflow Hunyuan LoRA workflows

How much VRAM does Hunyuan AI video model require?

The VRAM requirements for Hunyuan AI Video vary depending on model configuration, output length, and quality. A minimum of 10–12 GB VRAM is needed for basic workflows, while 16 GB or more is recommended for smoother performance and higher-quality outputs, especially for longer videos. Exact requirements may vary based on specific settings and model variants.

Where to put Hunyuan LoRA?

Hunyuan LoRA files should be placed in the dedicated LoRA folder of your installation. In many local setups using ComfyUI or Stable Diffusion, this is typically a subfolder within your models directory (e.g., “models/lora”). This ensures that the system automatically detects and loads the LoRA files.

How to prompt for Hunyuan AI?

Creating effective prompts is crucial for generating high-quality videos with Hunyuan AI. A well-crafted prompt typically includes the following elements:

- Subject: Clearly define the main focus of your video. For example, "A young woman with flowing red hair" or "A sleek electric sports car."

- Scene: Describe the environment where the action takes place, such as "In a neon-lit cyberpunk cityscape" or "Amidst a snow-covered forest at dawn."

- Motion: Specify how the subject moves or interacts within the scene, like "Gracefully dancing through falling autumn leaves" or "Rapidly accelerating along a coastal highway."

- Camera Movement: Indicate how the camera should capture the action, for instance, "Slow upward tilt revealing the cityscape" or "Smooth tracking shot following the subject."

- Atmosphere: Set the emotional tone or mood of the video, using descriptions like "Mysterious and ethereal atmosphere" or "Energetic and vibrant mood."

- Lighting: Define the lighting conditions to enhance the visual appeal, such as "Soft, warm sunlight filtering through trees" or "Sharp, contrasting shadows from street lights."

- Shot Composition: Describe how elements should be arranged in the frame, like "Close-up shot focusing on emotional expression" or "Wide landscape shot emphasizing scale." By integrating these components, you provide Hunyuan AI with a comprehensive guide to generate the desired video.

What is SkyReels Hunyuan?

Skyreels Hunyuan is a specialized variant of the Hunyuan video model, designed for cinematic and stylized video generation. Fine-tuned on over 10 million high-quality film and television clips from the Hunyuan base model, Skyreels excels at producing realistic human movements and expressions. Experience Skyreels AI's capabilities firsthand and start creating with Skyreels here!

How does Hunyuan Video handle image-to-video tasks?

Hunyuan Video is primarily a text-to-video (T2V) model developed by Tencent, designed to generate high-quality videos from textual descriptions. To expand its capabilities, Tencent introduced HunyuanVideo-I2V, an image-to-video (I2V) extension that transforms static images into dynamic videos. This extension employs a token replacement technique to effectively reconstruct and incorporate reference image information into the video generation process.

How to use Hunyuan I2V in ComfyUI?

Here's a detailed tutorial on how to use Hunyuan I2V in ComfyUI

What is Hunyuan-DiT?

Hunyuan-DiT is a diffusion transformer variant focusing on text-to-image tasks. It shares core technology with Hunyuan Video, utilizing similar transformer-based methods to merge text or image inputs with video generation, providing a unified approach across modalities.

Does Hunyuan Video support 3D content creation?

Yes, Hunyuan Video supports 3D content creation. Tencent has expanded its AI capabilities by releasing tools that convert text and images into 3D visuals. These open-source models, based on Hunyuan3D-2.0 technology, can generate high-quality 3D visuals rapidly, enhancing the scope of creative projects. For a seamless experience in creating 3D content from static images, you can utilize the Hunyuan3D-2 Workflow through RunComfy’s ComfyUI platform.

How to install Tencent Hunyuan3D-2 in ComfyUI

You can install it locally within ComfyUI by ensuring you have the latest version of ComfyUI, then downloading the required model files and the Hunyuan3D-2 workflow JSON from Tencent’s official sources. After placing these files in their designated folders and installing any missing custom nodes via ComfyUI Manager, simply restart ComfyUI to test your setup. Alternatively, you can use the online Hunyuan3D-2 workflow at RunComfy, a hassle-free, ready-to-use solution for generating 3D assets from images. This online workflow lets you explore the full potential of Hunyuan3D-2 without the need for local installation or setup.

How to run Hunyuan Video locally or on a MacBook?

To run Hunyuan Video locally on your system, you’ll need to download the official model weights from Tencent’s GitHub repository and set it up within your local ComfyUI environment. If you're using a MacBook, ensure that your system meets the hardware and software requirements to handle the model effectively.

Alternatively, you can run Hunyuan Video online without the need for installation via RunComfy AI Playground. It allows you to access Hunyuan and many other AI tools directly, offering a more convenient option if you prefer not to set up the model locally.

What is the Hunyuan Video wrapper and how to use it?

The Hunyuan Video wrapper is a ComfyUI node developed by kijai, enabling seamless integration of the Hunyuan Video model within ComfyUI. To generate videos using Hunyuan Video model, you can explore various workflows, such as: Hunyuan Text-to-Video workflow Hunyuan Image-to-Video workflow Hunyuan Video-to-Video workflow Hunyuan LoRA workflows

How to use Hunyuan Video with ComfyUI?

Explore Hunyuan Video in ComfyUI with these ready-to-use workflows. Each workflow comes pre-configured and includes a detailed guide to help you get started. Simply choose the one that fits your needs: Hunyuan Text-to-Video workflow Hunyuan Image-to-Video workflow Hunyuan Video-to-Video workflow Hunyuan LoRA workflows