Wan 2.1

Video Model Wan 2.1

Text to Video

Image to Video

Introduction of Wan 2.1

Wan 2.1 is an open-source video generative model by Wan-AI, released Feb 2025. Wan2.1 supports text-to-video, image-to-video and video editing with complex motion, obeying physical laws, cinematic quality, and visual effects.

Discover the Key Features of Wan 2.1

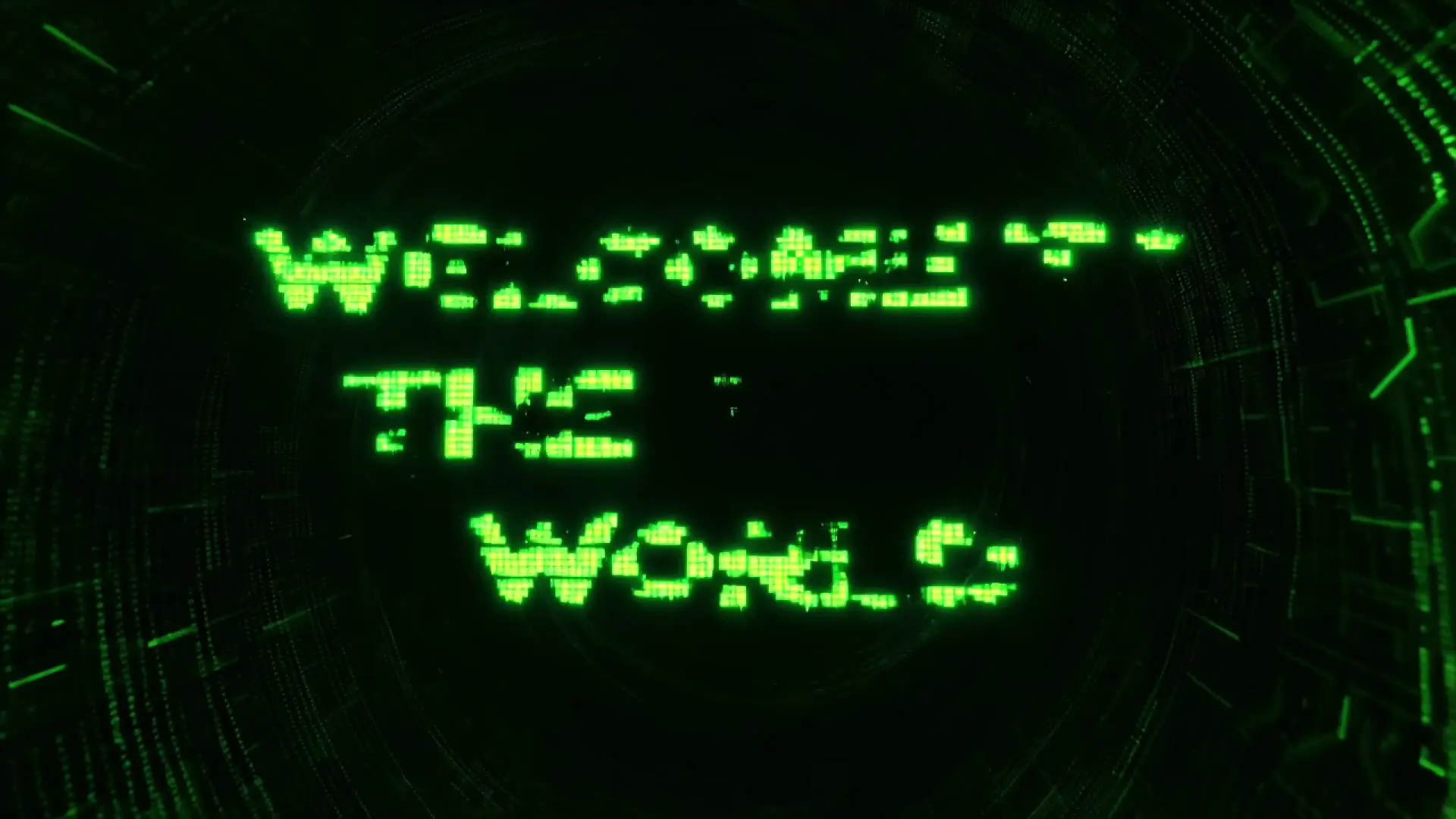

A haunting title sequence with distressed, flickering typewriter-style text forming the words 'THEY ARE WATCHING' on a textured, grainy black background. The letters jitter as they appear, as if typed by an unseen force. Ink stains and scratches flash momentarily before fading away. The eerie, flickering glow of an old fluorescent bulb casts shadows across the scene. The sound of faint typewriter clicks and distant whispers adds to the unsettling atmosphere, evoking a sense of mystery and unease.

Visual Effects

Embed visual effects directly into generated content. Wan 2.1, as the first model generating both Chinese and English text in videos, integrates particle systems, dynamic typography, and environmental hybrids (e.g., surreal light trails, morphing landscapes) without post-production. Wan 2.1 intelligently layers effects while preserving scene coherence—ideal for crafting title sequences, supernatural elements, or stylized transitions that demand technical finesse.

A bassist plays a low groove in a dimly lit studio. Each pluck causes the string to oscillate dynamically, reacting to tension and damping naturally over time.

Complex Motion

Wan 2.1 masters complex motion synthesis with industry-leading fluidity. Its algorithms decode intricate actions—from rapid sports sequences to organic wildlife movements—while maintaining biomechanical accuracy. Wan 2.1’s temporal consistency ensures smooth transitions between frames, whether capturing the grace of a dance routine or the raw power of dynamic athletic performances.

A collection of vibrant woven fabrics sways gently in a light breeze

Following Physical Laws

Groundbreaking physics simulation sets Wan 2.1 apart. Wan 2.1’s neural engine replicates real-world interactions with uncanny precision, from liquid viscosity to material deformation. Objects obey gravity, light refracts authentically, and textures respond to environmental forces, making even fantastical scenarios feel anchored in tangible reality.

A stormy harbor at night, heavy rain pours as powerful floodlights cut through the mist. The restless sea crashes against the docks, reflecting golden and white lights. A lone man in the foreground grips an umbrella, taking slow, deliberate steps forward as wind and rain lash against him. His silhouette moves subtly against the backdrop of swaying ships, their flickering lights adding an eerie sense of mystery.

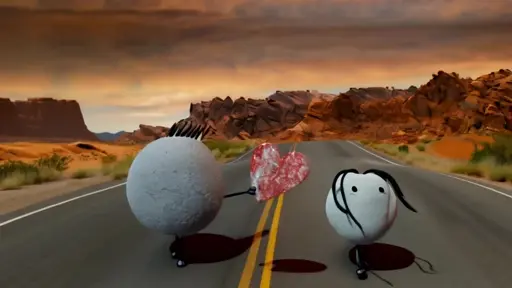

Cinematic Quality

Elevate productions with multi-style adaptability. Wan 2.1 natively supports diverse aesthetics—3D animation, tilt-shift miniatures, ink-art abstraction, or hyper-real close-ups. Wan 2.1’s lighting engine mimics professional cinematography, dynamically adjusting shadows, depth of field, and color grading to match target styles, from indie film grit to Pixar-esque polish.

Related Playgrounds

Frequently Asked Questions

What is Wan 2.1?

Wan AI 2.1 is Alibaba’s open-source Wan video AI model designed to create high-quality AI video content. It uses advanced diffusion techniques to generate realistic motion and supports bilingual text generation (English and Chinese). The model family includes several versions:

- T2V-14B: A text-to-video model (14B parameters) that produces detailed 480P/720P videos but requires more VRAM.

- T2V-1.3B: A lighter text-to-video model (1.3B parameters) optimized for consumer GPUs (~8GB VRAM), ideal for efficient 480P generation.

- I2V-14B-720P: An image-to-video model that converts still images into 720P videos with smooth, professional-grade motion.

- I2V-14B-480P: Similar to the 720P version but tailored for 480P output, reducing hardware load while maintaining quality.

How to use Wan 2.1?

-

Using Wan 2.1 online via RunComfy AI Playground: Using RunComfy Wan 2.1 AI Playground to access Wan 2.1 is straightforward. Simply visit the RunComfy AI Playground, select the Wan 2.1 AI playground, and input your text prompt or upload an image. Customize settings like resolution and duration as desired, then start the video generation process. Once completed, you can preview and download your video. This intuitive interface makes creating high-quality videos with Wan 2.1 both easy and efficient.

-

Using Wan 2.1 online via RunComfy ComfyUI: To effortlessly generate videos using the Wan 2.1 workflow in ComfyUI, simply visit the RunComfy Wan 2.1 Workflow page. There, you'll find a fully operational workflow ready for immediate use, with all necessary environments and models pre-configured. This setup allows you to create high-quality videos from text prompts or images with minimal effort.

-

Using Wan 2.1 Locally

- Clone the Wan 2.1 repository from GitHub.

- Install dependencies and download the appropriate model weights (T2V-14B, T2V-1.3B, I2V-14B-720P, or I2V-14B-480P).

- Use the provided command-line scripts (e.g. using generate.py) to generate videos.

How to run Wan 2.1?

- Using Wan 2.1 via RunComfy AI Playground:

Visit the RunComfy AI Playground and log in. After accessing your account, select the Wan 2.1 model. For Text-to-Video (T2V) generation, input your descriptive text prompt. For Image-to-Video (I2V) generation, upload your base image and optionally add a guiding text prompt. Configure your video settings, such as resolution (480p or 720p) and duration, then initiate the video generation process. Once completed, you can preview and download your video.

- Using Wan 2.1 via RunComfy ComfyUI:

Choose either the Wan 2.1 Workflow or the Wan 2.1 LoRA workflow depending on your needs and log in. The ComfyUI interface allows easy customization. Enter a text prompt or upload an image, or apply LoRA models to adjust style, then set your video preferences. Once you're ready, start the video generation and download the final video when it's done.

How to use LoRA in Wan 2.1?

LoRA allows you to fine-tune the Wan 2.1 video model with extra parameters to customize style, motion, or other artistic details without retraining the entire model.

- RunComfy AI Playground: Wan 2.1 LoRA will soon be available on the RunComfy AI Playground.

- RunComfy ComfyUI: You can use Wan 2.1 LoRA in ComfyUI on this page: Wan 2.1 LoRA Customizable AI Video Generation. The environment is pre-set, with Wan 2.1 LoRA models ready for use, and you can easily upload your own Wan 2.1 LoRA models as well.

How to train Wan 2.1 LoRA?

Training a Wan 2.1 LoRA model follows a process similar to LoRA training for other diffusion models:

- Dataset Preparation: Collect a set of high-quality images (or short video clips, if training for motion) and create matching text files that describe each image. It’s important to use a consistent trigger word in all captions so that the model learns the intended concept.

- Environment & Configuration: Use training frameworks such as “diffusion-pipe” (there’s a detailed step-by-step guide available on tutorials sites) where you configure a TOML file (for example, a modified “wan_video.toml”) with settings like learning rate (typically around 3e-05), number of epochs, network rank (e.g. 32), and other advanced parameters.

- Running Training: With your dataset in place and the configuration file updated, you launch the training script (often with deepspeed for multi-GPU setups) to fine-tune only the additional LoRA parameters on top of the base Wan 2.1 model.

- Post-Training: Once training completes, the resulting LoRA checkpoint (saved as a .safetensors file) can then be loaded into your generation workflows.

Where to find LoRA for Wan 2.1

Community-created LoRA models for Wan 2.1 are available on Hugging Face. For example: Wan2.1 14B 480p I2V LoRAs

How much VRAM does Wan 2.1 use?

The Wan 2.1 14B models (including both T2V-14B and I2V-14B) typically require a high-end GPU—such as an NVIDIA RTX 4090—to efficiently generate high-resolution video content. Under standard settings, these models are used to produce 5-second 720p videos; however, with optimizations like model offloading and quantization, they can generate up to 8-second 480p videos using approximately 12 GB of VRAM.

In contrast, theWan 2.1 T2V-1.3B model is more resource-efficient, requiring around 8.19 GB of VRAM, which makes it well-suited for consumer-grade GPUs. While it generates 5-second 480p videos on an RTX 4090 in about 4 minutes (without additional optimizations), it generally offers a trade-off between lower VRAM usage and slightly reduced resolution and speed compared to the 14B models.

Which Wan 2.1 model can be used on the RTX 3090?

The NVIDIA RTX 3090, with its 24 GB of VRAM, is well-suited for running the Wan 2.1 T2V-1.3B model in inference mode. This version typically uses around 8.19 GB of VRAM during inference, making it compatible with the RTX 3090's memory capacity.

However, running the more demanding Wan 2.1 T2V-14B model on the RTX 3090 may pose challenges. While the GPU’s 24 GB of VRAM is substantial, users have reported that generating videos with the 14B model requires significant memory and processing power. Some have managed to run the 14B model on GPUs with as little as 10 GB of VRAM, but this often involves trade-offs in performance and may not be practical for all users.

What hardware is needed to run Wan 2.1 video?

The hardware requirements for Wan 2.1 AI video vary depending on the model. The T2V-1.3B version is optimized for efficiency and works well on consumer GPUs with around 8GB of VRAM, producing 480p videos quickly. On the other hand, the T2V-14B model offers higher-quality 720p videos but requires more VRAM to handle its 14 billion parameters.

If you want to try Wan 2.1 AI video without investing in high-end hardware, you can use the RunComfy AI Playground, which offers free credits and an online environment to explore Wan 2.1 and many other AI tools.

How to cheaply run Wan 2.1 on cloud?

To run Wan 2.1 cost-effectively in the cloud, RunComfy offers two primary methods:

1. RunComfy AI Playground: This platform allows you to run Wan 2.1 along with a variety of AI tools. New users receive free credits, enabling them to explore and experiment without initial investment.

2. RunComfy ComfyUI: For a more streamlined experience, RunComfy provides a pre-configured ComfyUI workflow for Wan 2.1 and Wan 2.1 LoRA. All the necessary environments and models are set up, so you can start generating videos right away after logging in.

Additionally, for further cost savings, you can use the more efficient 1.3B model with optimization techniques such as quantization or model offloading (using flags like --offload_model True) to reduce VRAM usage and lower operational costs.

How to use Wan 2.1 AI to create image-to-video?

Wan 2.1 supports both text-to-video and image-to-video (I2V) generation. To create an image-to-video, simply provide a still image along with a text prompt describing the desired animation or transformation. The model will use its spatiotemporal dynamics to animate the image.

-

Locally: Run Wan 2.1 via the command line with the flag -task i2v-14B and specify the image path (e.g., -image examples/i2v_input.JPG) along with your prompt.

-

RunComfy ComfyUI: Use the Wan 2.1 workflow through RunComfy ComfyUI for seamless image-to-video generation.

-

RunComfy Playground: Simply select the image-to-video mode to get started.

What is the max length you can generate with Wan 2.1?

The default—and effectively maximum—video length for Wan 2.1 is set to 81 frames. In practice, this means that if you use a typical frame rate (for example, around 16 FPS), you’ll get roughly a 5‑second video clip.

A few additional details: The model’s design requires the total number of frames to follow the form 4n+1 (e.g. 81 frames fits this rule).

Although some users have experimented with longer sequences (such as 100 frames), the standard (and most stable) configuration is 81 frames, which balances quality with temporal consistency.

What kinds of projects are Wan 2.1 video best suited for?

Wan 2.1 video is versatile for a range of creative projects. It handles text-to-video and image-to-video generation and even supports video editing. Whether you’re creating social media clips, educational content, or promotional videos, Wan 2.1 offers a practical solution. Its ability to generate dynamic visuals and readable text makes it especially useful for content creators and marketers looking to produce high-quality ai video content without a complex setup.

How to use Wan 2.1 in ComfyUI?

You can easily use Wan 2.1 in ComfyUI for both text-to-video and image-to-video projects. Below are the detailed guides for using Wan 2.1 in ComfyUI:

RunComfy offers a pre-configured environment with all necessary models already downloaded. This means you can start generating high-quality Wan 2.1 AI video content immediately—no additional setup required.