Hunyuan3D-1 | ComfyUI 3D Pack

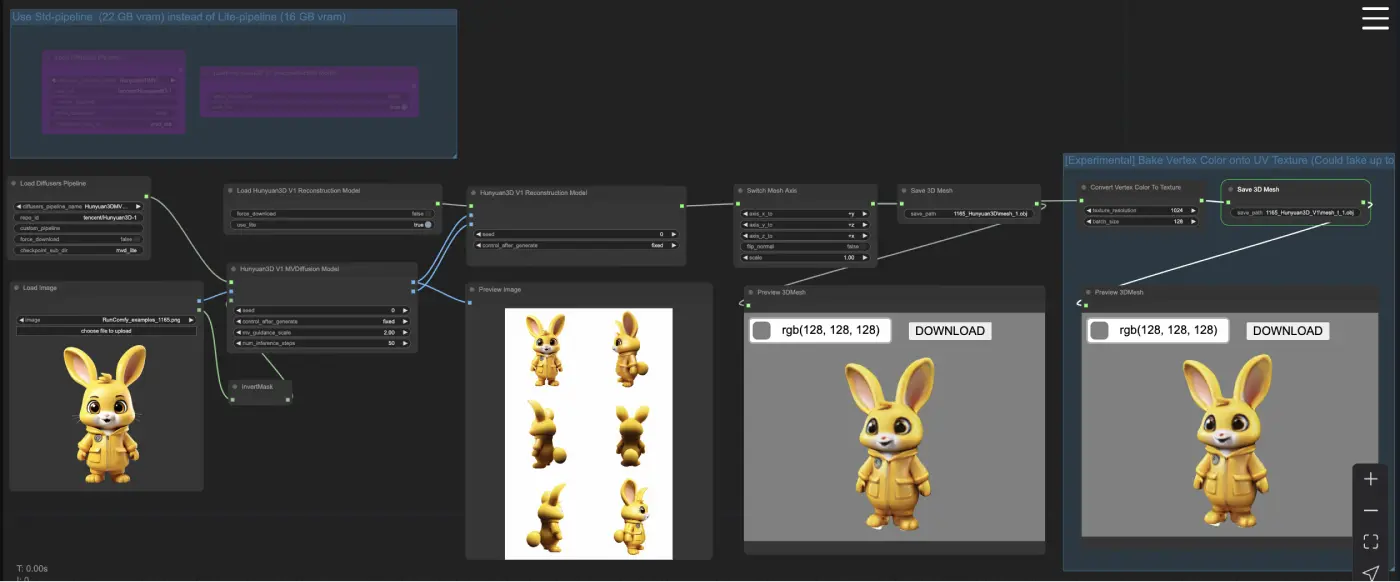

The ComfyUI 3D Pack offers a powerful suite of tools to work with 3D models and integrates seamlessly with the Hunyuan3D framework. One of the standout features of Hunyuan3D is its Two-stage pipeline: first, the multi-view diffusion model generates detailed RGB images from various viewpoints. Then, the reconstruction model quickly transforms these multi-view images into a fully realized 3D asset. This combination, along with other models like NeRF and 3DGS, enables fast and photorealistic 3D content generation, all through an intuitive interface in ComfyUI.ComfyUI Hunyuan3D Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI Hunyuan3D Examples

ComfyUI Hunyuan3D Description

Update:

The latest model has been released! Click the link to try it out.

Below is an introduction to the Hunyuan3D-1.0 model.

This guide provides a comprehensive introduction to creating 3D content with the 'Hunyuan3D-1.0' model, utilizing the advanced capabilities of "ComfyUI 3D Pack" nodes to streamline workflows and enhance output quality.

Part 1: ComfyUI 3D Pack

1.1. Introduction to ComfyUI 3D Pack

ComfyUI 3D Pack is an extensive node suite that enables ComfyUI to process 3D inputs such as Mesh & UV Texture using cutting edge algorithms and models. It integrates advanced 3D processing algorithms like 3DGS (Gaussian Splatting) and NeRF (Neural Radiance Fields), along with state-of-the-art models including Hunyuan3D**,** StableFast3D, InstantMesh, CRM, TripoSR and others.

With the ComfyUI 3D Pack, users can import, manipulate and generate high quality 3D content within the intuitive ComfyUI interface. It supports a wide range of 3D file formats like OBJ, PLY, GLB enabling easy integration of existing 3D models. The pack also includes powerful mesh processing utilities to edit, clean, and optimize 3D geometry.

One of the key highlights is the integration of NeRF technology which allows photorealistic 3D reconstruction from 2D images. The 3DGS nodes enable point cloud rendering and stylization. InstantMesh and TripoSR models allow high-resolution upscaling and super-resolution of 3D meshes. CRM (Convolutional Reconstruction Model) enables recovering 3D shape from multi-view images and CCM (Color Correction Map).

ComfyUI 3D Pack was developed by , with all credit going to MrForExample. For detailed information, please see .

1.2. ComfyUI 3D Pack: Ready to Run on RunComfy

Now ComfyUI 3D Pack is fully setup and ready to use on the RunComfy website. Users don't need to install any additional software or dependencies. All the required models, algorithms and tools are pre-configured and optimized to run efficiently in the web-based ComfyUI environment.

Part 2: Using Hunyuan3D model with ComfyUI 3D Pack Nodes

2.1. What is Hunyuan3D?

Hunyuan3D is an innovative 3D generation framework developed by Tencent that combines the power of multi-view diffusion models and sparse-view reconstruction models to create high-quality 3D assets from single images or text descriptions. The Hunyuan3D 1.0 framework is available in two versions: a lite version and a standard version, both supporting text- and image-conditioned generation. For detailed information, please see .

2.2. Techniques Behind Hunyuan3D

Hunyuan3D introduces several technical innovations to enhance the speed and quality of 3D generation:

a. Two-stage pipeline:

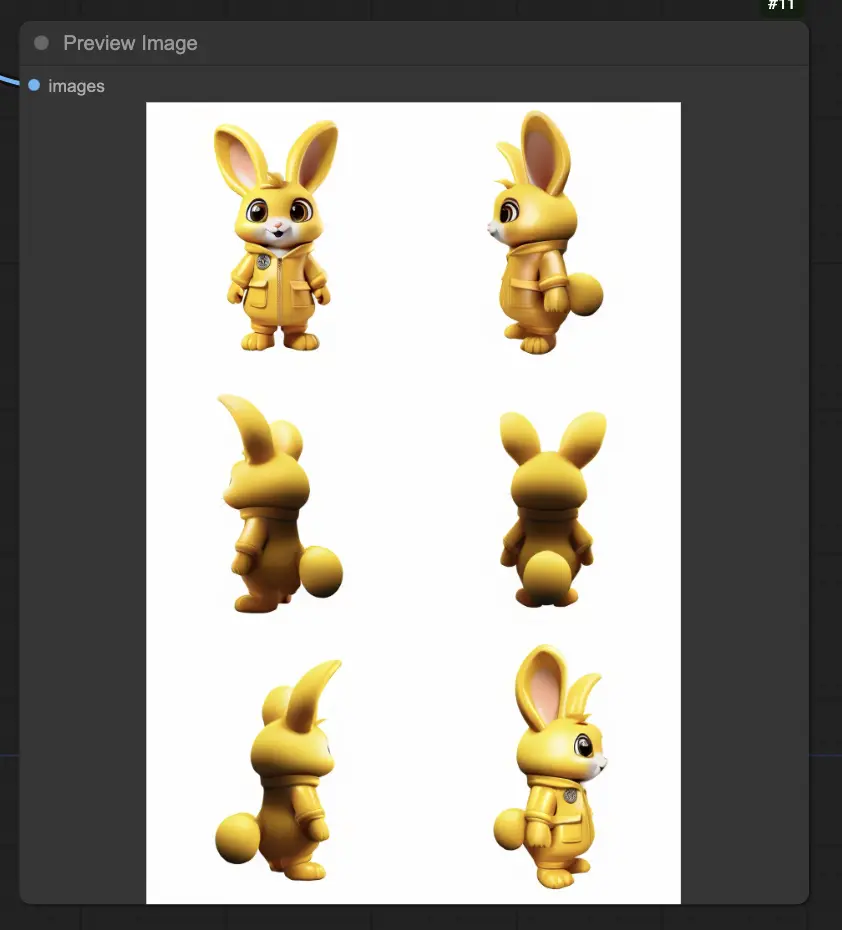

In the first stage, a multi-view diffusion model efficiently generates multi-view RGB images. These images capture rich details of the 3D asset from various viewpoints.

The second stage employs a feed-forward reconstruction model that rapidly reconstructs the 3D asset from the generated multi-view images.

b. 0-elevation pose distribution

Hunyuan3D's multi-view generation uses a 0-elevation camera orbit, maximizing the visible area between generated views and improving reconstruction quality.

c. Adaptive classifier-free guidance

This technique balances controllability and diversity for multi-view diffusion, ensuring consistent and high-quality results.

d. Hybrid inputs

The sparse-view reconstruction model incorporates the uncalibrated condition image as an auxiliary view to compensate for unseen parts in the generated images, enhancing reconstruction accuracy.

2.3. Advantages and Potential Limitations of Hunyuan3D

Advantages:

- Fast 3D generation: Hunyuan3D can create high-quality 3D assets in just 10 seconds, significantly reducing generation time compared to optimization-based methods.

- Improved generalization: By disentangling single-view generation tasks into multi-view image generation and sparse-view reconstruction, Hunyuan3D achieves better generalization to unseen objects.

- Unified framework: Hunyuan3D supports both text- and image-conditioned 3D generation, making it a versatile tool for various applications.

Potential Limitations:

- Memory requirements: The standard version of Hunyuan3D has 3x more parameters than the lite version, which may require more memory for optimal performance.

- Thin structure generation: Like other feed-forward methods, Hunyuan3D might struggle with generating thin, paper-like structures.

2.4. How to use Hunyuan3D-1.0 Workflow in ComfyUI

Here's a step-by-step guide for using Hunyuan3D workflow to generate high-quality 3D meshes from single images

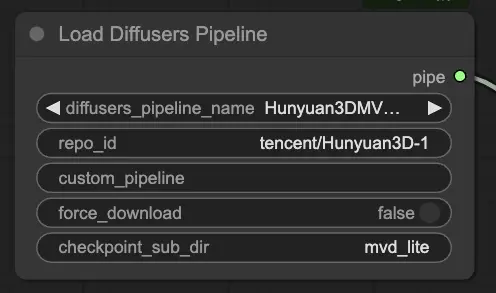

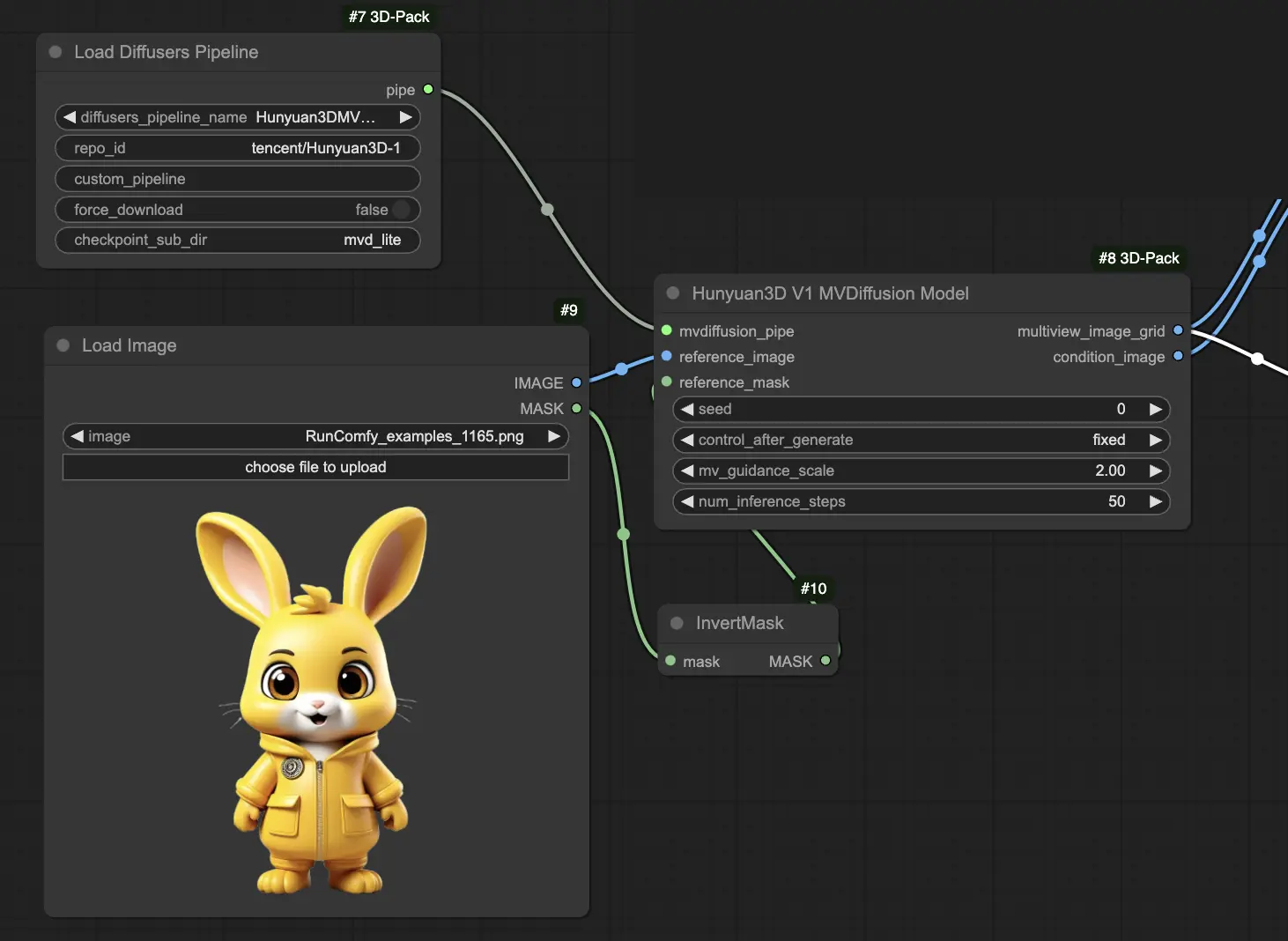

Load the Hunyuan3D multi-view diffusion model using the "[Comfy3D] Load Diffusers Pipeline" node. Choose between the lite or standard version based on your GPU memory.

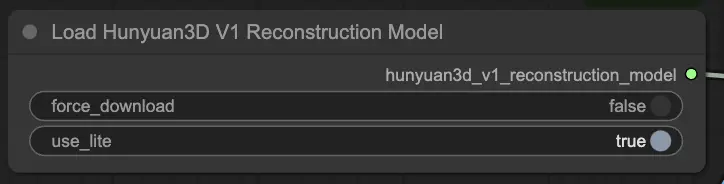

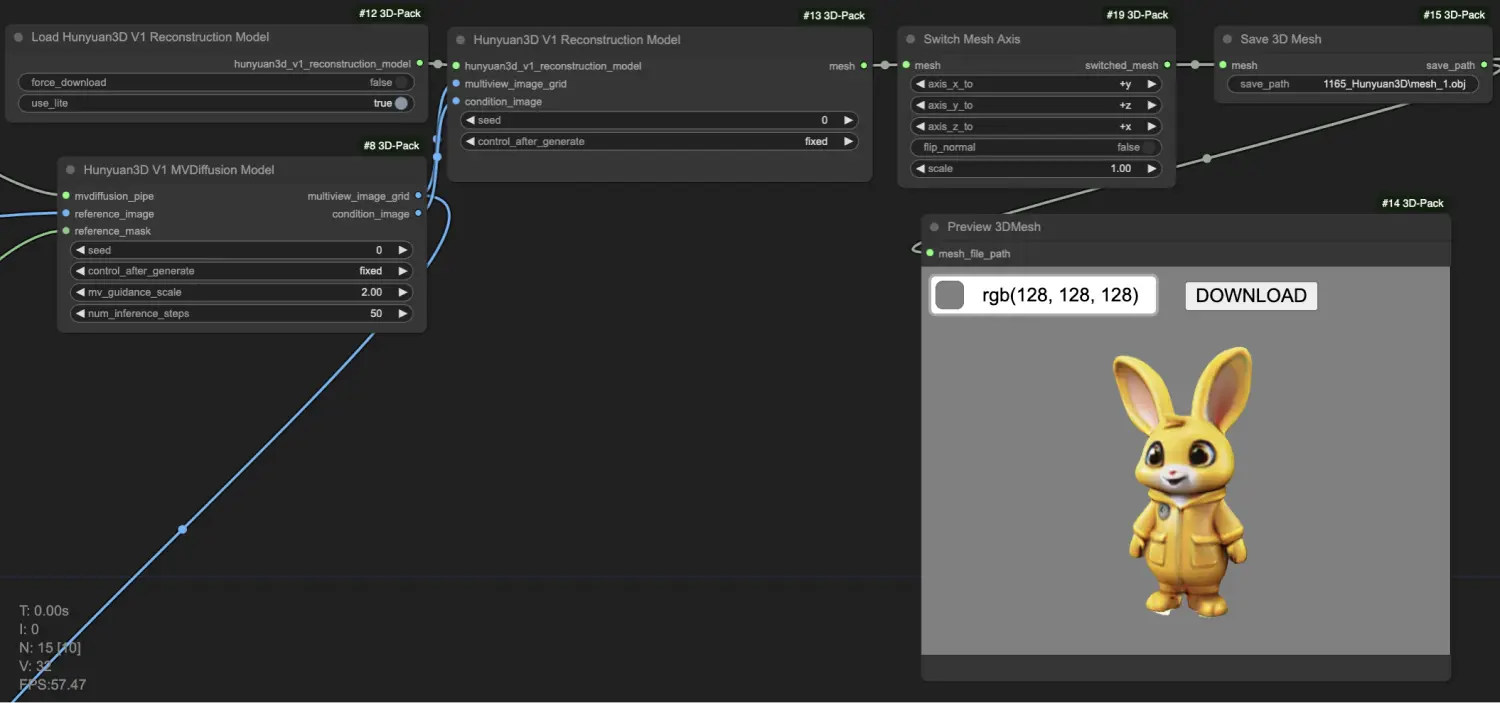

Load the Hunyuan3D reconstruction model using the "[Comfy3D] Load Hunyuan3D V1 Reconstruction Model" node.

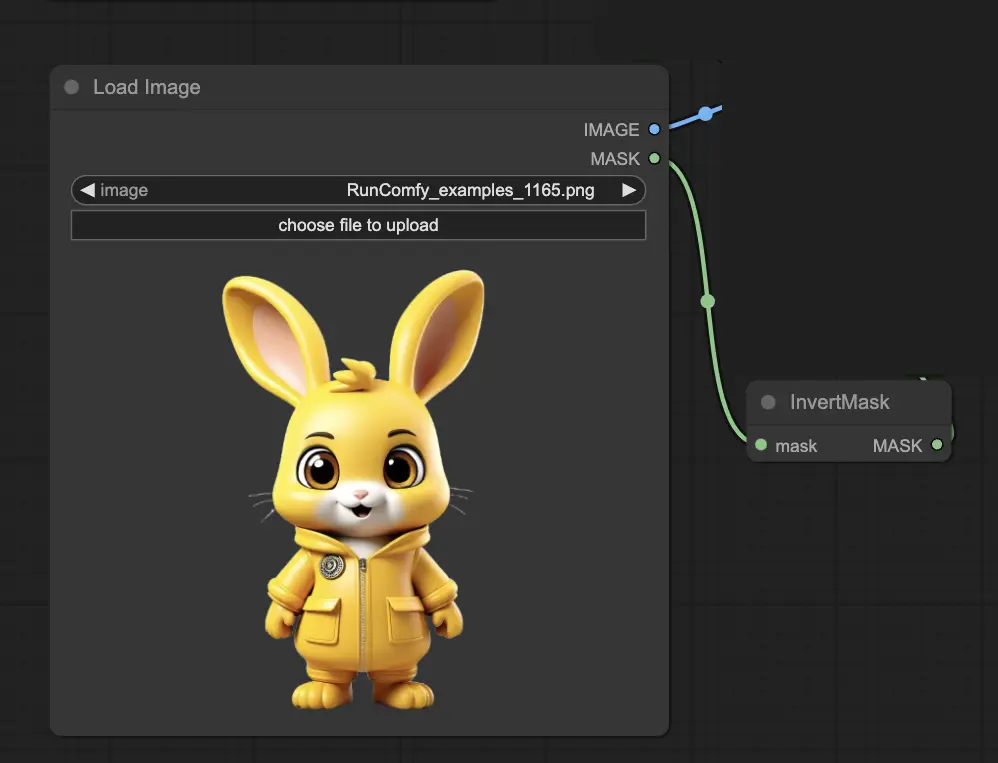

Prepare the input image and mask using the "LoadImage" and "InvertMask" nodes.

Feed the loaded diffusion pipeline, input image, and mask into the "[Comfy3D] Hunyuan3D V1 MVDiffusion Model" node to generate multi-view images and a condition image.

Preview the generated multi-view images using the "PreviewImage" node.

Input the loaded reconstruction model, generated multi-view images, and condition image into the "[Comfy3D] Hunyuan3D V1 Reconstruction Model" node to create the 3D mesh. Also, you can adjust the mesh's axis and scale using the "[Comfy3D] Switch Mesh Axis" node if needed.

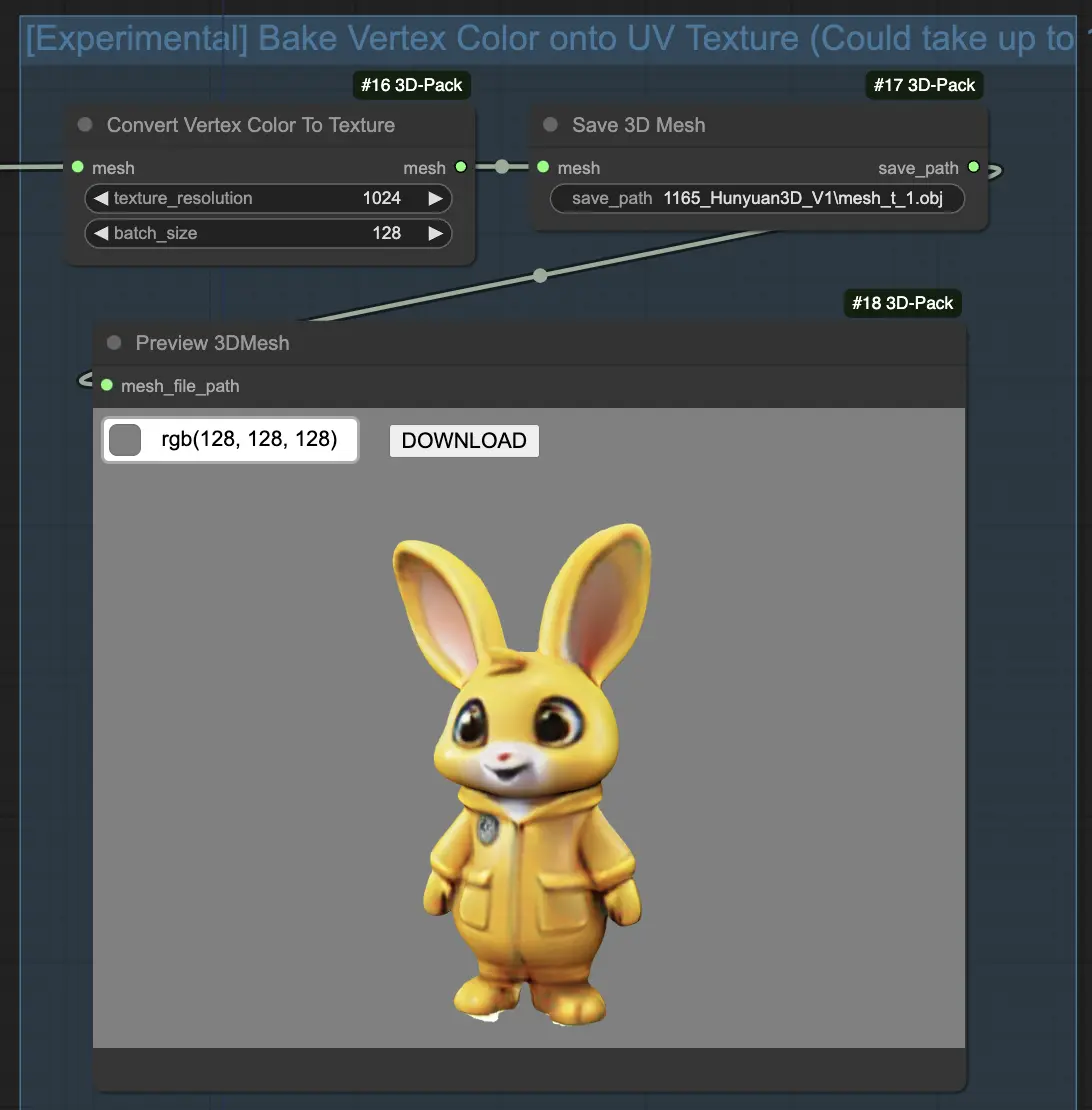

(Optional) Convert vertex colors to textures using the "[Comfy3D] Convert Vertex Color To Texture" node for improved texture quality.

Now you can unlock the full potential of Hunyuan3D to create stunning 3D assets from a single image. The Hunyuan3D model makes advanced 3D generation more accessible than ever!