This guide provides a comprehensive introduction to creating 3D content with the "Wonder3D" model, utilizing the advanced capabilities of "ComfyUI 3D Pack" nodes to streamline workflows and enhance output quality.

Part 1: ComfyUI 3D Pack

1.1. Introduction to ComfyUI 3D Pack

ComfyUI 3D Pack is an extensive node suite that enables ComfyUI to process 3D inputs such as Mesh & UV Texture using cutting edge algorithms and models. It integrates advanced 3D processing algorithms like 3DGS (Gaussian Splatting) and NeRF (Neural Radiance Fields), along with state-of-the-art models including Hunyuan3D, StableFast3D, InstantMesh, CRM, TripoSR and others.

With the ComfyUI 3D Pack, users can import, manipulate and generate high quality 3D content within the intuitive ComfyUI interface. It supports a wide range of 3D file formats like OBJ, PLY, GLB enabling easy integration of existing 3D models. The pack also includes powerful mesh processing utilities to edit, clean, and optimize 3D geometry.

One of the key highlights is the integration of NeRF technology which allows photorealistic 3D reconstruction from 2D images. The 3DGS nodes enable point cloud rendering and stylization. InstantMesh and TripoSR models allow high-resolution upscaling and super-resolution of 3D meshes. CRM (Convolutional Reconstruction Model) enables recovering 3D shape from multi-view images and CCM (Color Correction Map).

ComfyUI 3D Pack was developed by MrForExample, with all credit going to MrForExample. For detailed information, please see ComfyUI 3D Pack.

1.2. ComfyUI 3D Pack: Ready to Run on RunComfy

Now ComfyUI 3D Pack is fully setup and ready to use on the RunComfy website. Users don't need to install any additional software or dependencies. All the required models, algorithms and tools are pre-configured and optimized to run efficiently in the web-based ComfyUI environment.

Part 2: Using Wonder3D model with ComfyUI 3D Pack Nodes

2.1. What is Wonder3D?

Wonder3D is a cutting-edge method for efficiently generating high-quality textured meshes from single-view images. It leverages the power of cross-domain diffusion models to generate multi-view normal maps and their corresponding color images. Wonder3D aims to address the challenges of fidelity, consistency, generalizability, and efficiency in single-view 3D reconstruction tasks.

Wonder3D was developed by a team of researchers from The University of Hong Kong, Tsinghua University, VAST, University of Pennsylvania, Shanghai Tech University, MPI Informatik, and Texas A&M University, with Xiaoxiao Long and Yuan-Chen Guo as equal contribution first authors. All credit goes to their contribution; for more information, please see their project page at here.

2.2. Techniques behind Wonder3D

The core of Wonder3D lies in its innovative cross-domain diffusion model. This model is designed to capture the joint distribution of normal maps and color images across multiple views. To achieve this, Wonder3D introduces a domain switcher and a cross-domain attention scheme. The domain switcher allows seamless generation of either normal maps or color images, while the cross-domain attention mechanism facilitates information exchange between the two domains, enhancing consistency and quality.

Another key component of Wonder3D is its geometry-aware normal fusion algorithm. This algorithm robustly extracts high-quality surfaces from the generated multi-view 2D representations, even in the presence of inaccuracies. By leveraging the rich surface details encoded in the normal maps and color images, Wonder3D reconstructs clean and detailed geometries.

2.3. Advantages/Potential Limitations of Wonder3D

Wonder3D offers several advantages over existing single-view reconstruction methods. It achieves a high level of geometric detail while maintaining good efficiency, making it suitable for various applications. The cross-domain diffusion model allows Wonder3D to generalize well to different object categories and styles. The multi-view consistency enforced by the cross-domain attention mechanism results in coherent and plausible 3D reconstructions.

However, like any method, Wonder3D may have some limitations. The quality of the generated meshes depends on the training data and the ability of the diffusion model to capture the underlying 3D structure. Highly complex or ambiguous shapes might pose challenges. Additionally, the current implementation of Wonder3D focuses on single objects, and extending it to handle multiple objects or entire scenes could be an area for future research.

2.4. How to use Wonder3D Workflow

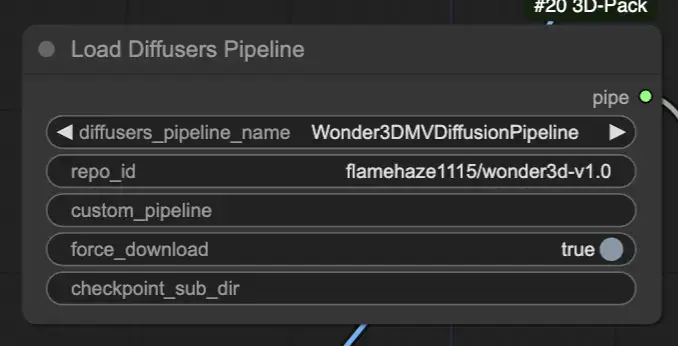

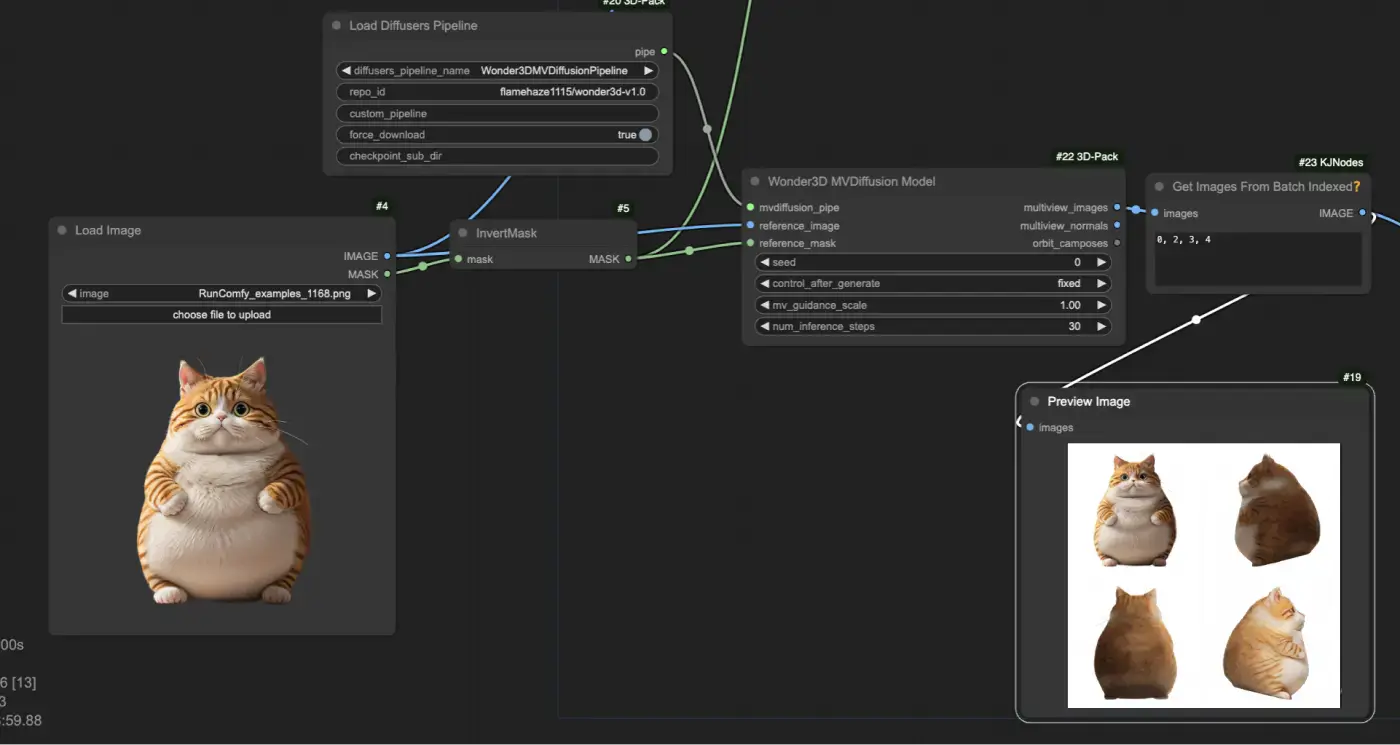

Load the pre-trained Wonder3D diffusion pipeline using the "[Comfy3D] Load Diffusers Pipeline" node, which imports the necessary model checkpoints and configurations.

Provide an input image and its corresponding mask using the "LoadImage" and "InvertMask" nodes. Then feed the input image and mask into the "[Comfy3D] Wonder3D MVDiffusion Model" node, which generates multi-view normal maps and color images.

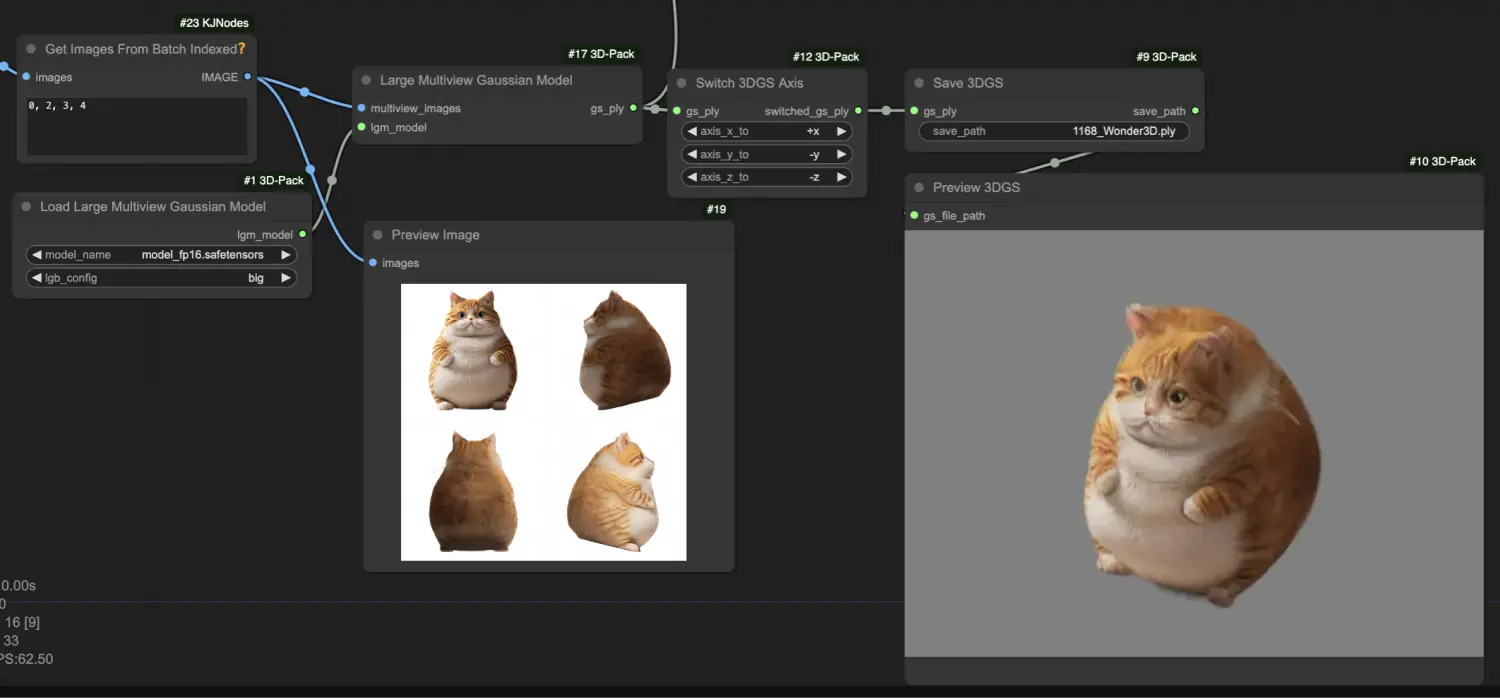

Process the generated multi-view images using the "[Comfy3D] Large Multiview Gaussian Model" node, which converts them into a 3D Gaussian Splatting (3DGS) representation, capturing the object's geometric details in a point cloud format.

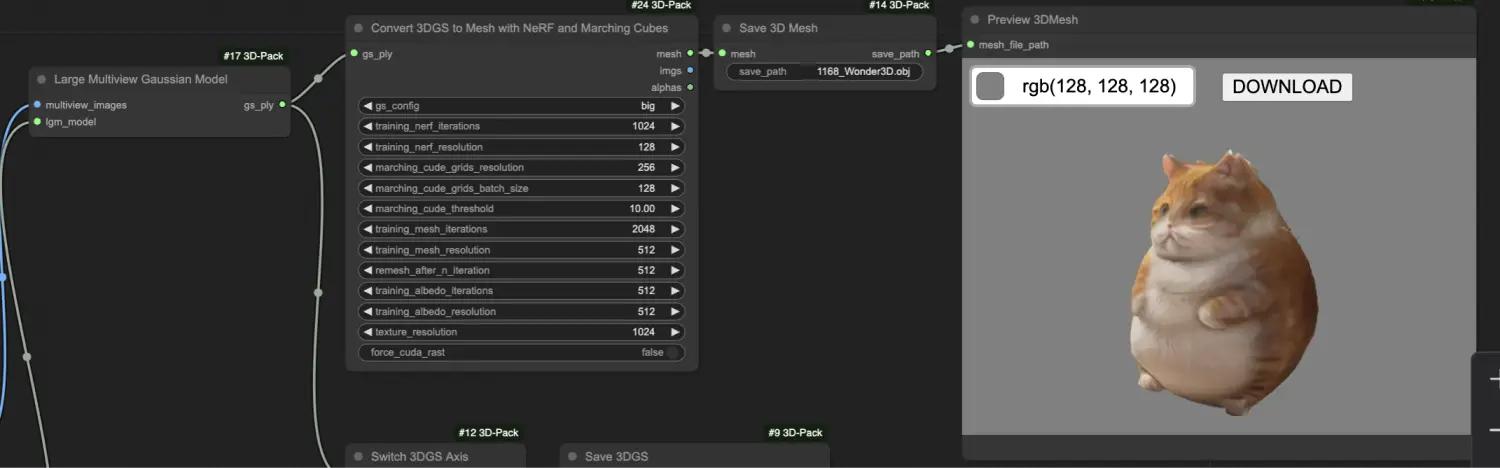

Transform the 3DGS representation into a textured mesh using the "[Comfy3D] Convert 3DGS to Mesh with NeRF and Marching Cubes" node, which employs neural radiance fields (NeRF) and marching cubes algorithms to extract a high-quality mesh.