PuLID | Accurate Face Embedding for Text to Image

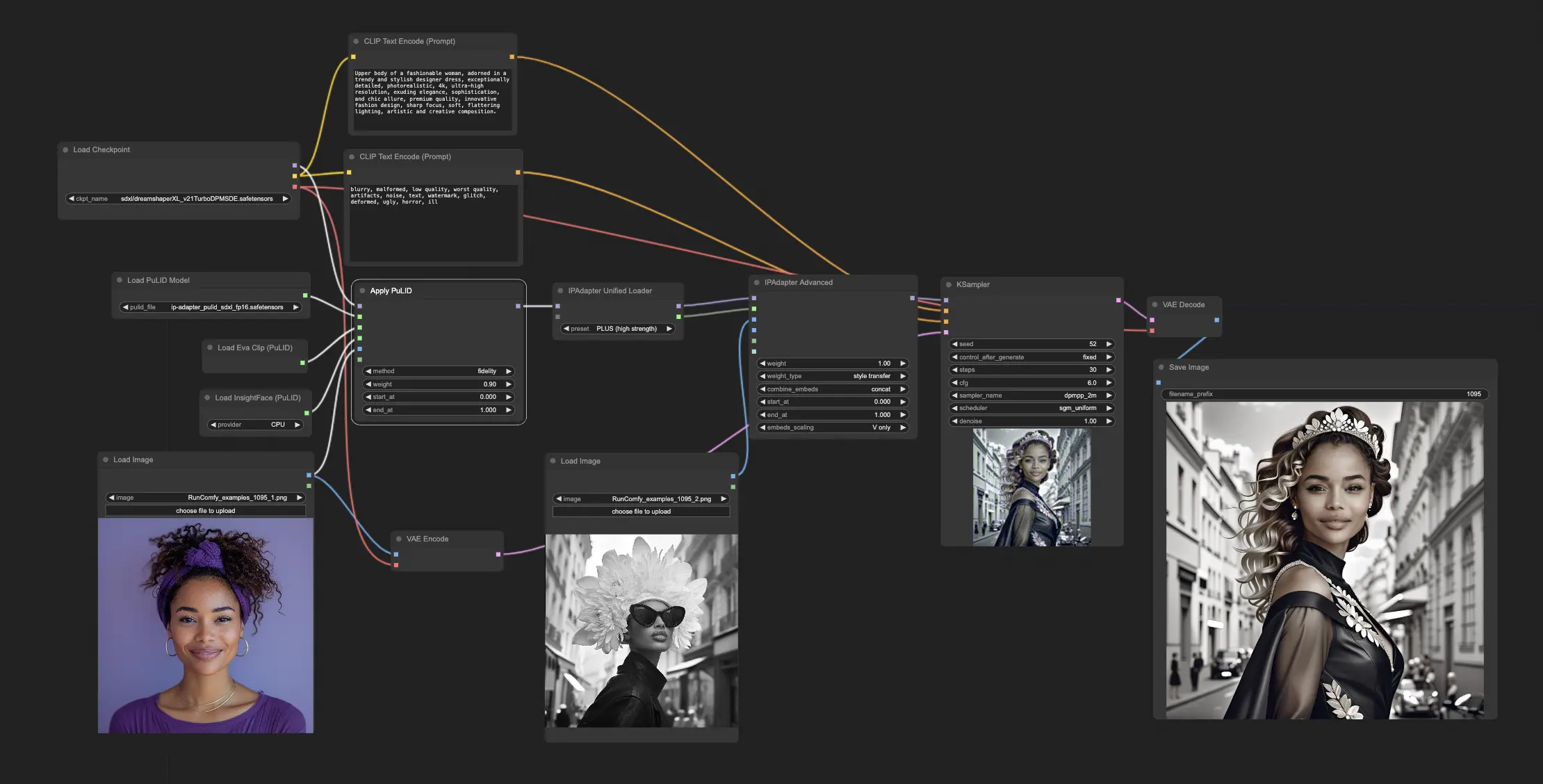

In this ComfyUI PuLID workflow, we use PuLID nodes to effortlessly add a specific person's face to a pre-trained text-to-image (T2I) model. This allows you to create high-quality, realistic face images that accurately capture the person's likeness. We also use IPAdapter Plus for style transfer, giving you precise control over both the facial features and the artistic style of the images. This combination ensures that the generated images not only look like the individual but also match the desired visual aesthetics.ComfyUI PuLID Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI PuLID Examples

ComfyUI PuLID Description

What is PuLID?

PuLID (Pure and Lightning ID customization) is a novel method for tuning-free identity (ID) customization in text-to-image generation models. It aims to embed a specific ID (e.g. a person's face) into a pre-trained text-to-image model without disrupting the model's original capabilities. This allows generating images of the specific person while still being able to modify attributes, styles, backgrounds etc. using text prompts.

PuLID incorporates two key components:

- A "Lightning T2I" branch that rapidly generates high-quality images conditioned on the ID in just a few denoising steps, alongside the standard diffusion branch. This enables calculating an accurate ID loss to improve the fidelity of the generated face.

- Contrastive alignment losses between the Lightning T2I paths with and without ID conditioning. This instructs the model on how to embed the ID information without contaminating the model's original prompt-following and image generation capabilities.

How PuLID Works

PuLID's architecture consists of a conventional diffusion training branch and the novel Lightning T2I branch:

- In the diffusion branch, PuLID follows the standard diffusion training process of iterative denoising. The ID condition is cropped from the target training image.

- The Lightning T2I branch leverages recent fast sampling methods to generate a high-quality image conditioned on the ID prompt in just 4 denoising steps, starting from pure noise.

- Within the Lightning T2I branch, two paths are constructed - one conditioned only on the text prompt, the other conditioned on both the ID and text prompt. The UNET features of these paths are aligned using contrastive losses:

- A semantic alignment loss ensures the model's response to the text prompt is similar with and without ID conditioning. This preserves the model's original prompt-following ability.

- A layout alignment loss maintains consistency of the generated image layout before and after ID insertion.

- The Lightning T2I branch enables calculating an accurate ID loss between the generated face embedding and the real ID embedding, since it produces a clean, denoised output face. This improves the fidelity of the generated ID.

How to Use ComfyUI PuLID

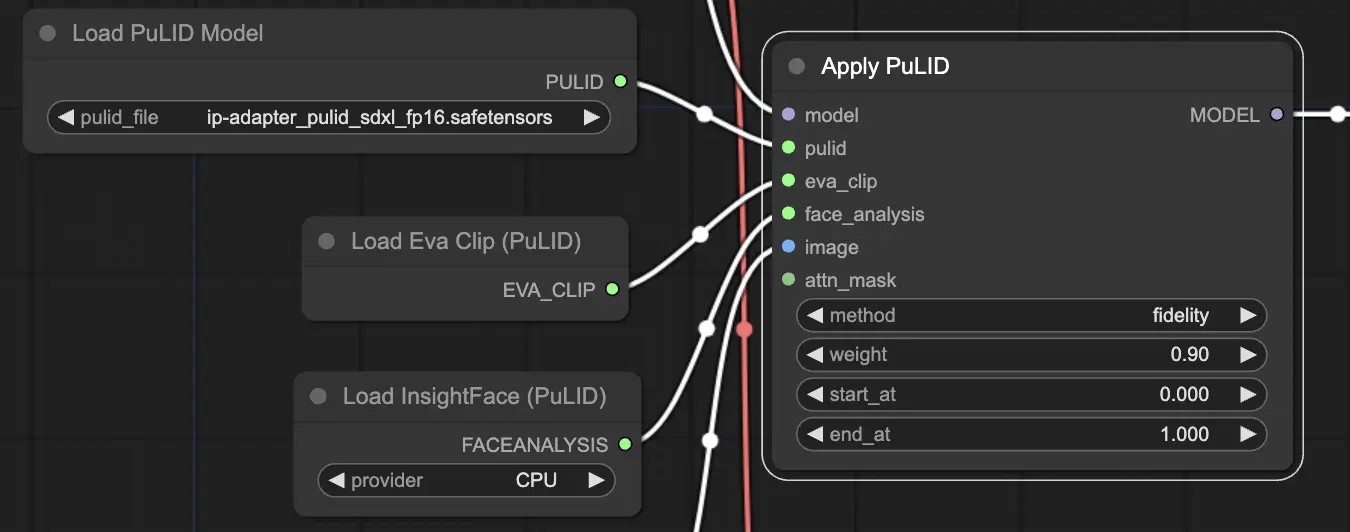

Using the ComfyUI PuLID workflow to apply ID customization to a model involves several key parameters in the "Apply PuLID" node:

"Apply PuLID" Required Inputs:

- model: The base text-to-image diffusion model to customize with the specific ID. This is typically a pre-trained model like Stable Diffusion.

- pulid: The loaded PuLID model weights, which define how the ID information is inserted into the base model. Different PuLID weight files can be trained to prioritize either ID fidelity or preserving the model's original generation style.

- eva_clip: The loaded Eva-CLIP model for encoding facial features from the ID reference image(s). Eva-CLIP produces a meaningful facial feature embedding.

- face_analysis: The loaded InsightFace model for recognizing and cropping the face in the ID reference image(s). This ensures only relevant facial features are encoded.

- image: The reference image or images depicting the specific ID to insert into the model. Multiple images of the same identity can be provided to improve the ID embedding.

- method: Selects the ID insertion method, with options "fidelity", "style" and "neutral". "fidelity" prioritizes maximum likeness to the ID reference even if generation quality degrades. "style" focuses on preserving the model's original generation capabilities with a lower-fidelity ID. "neutral" balances the two.

- weight: Controls the strength of the ID insertion, from 0 (no effect) to 5 (extremely strong). Default is 1. Higher weight improves ID fidelity but risks overriding the model's original generation.

- start_at: The denoising step (as a percentage from 0 to 1) to start applying the PuLID ID customization. Default is 0, starting the ID insertion from the first denoising step. Can be increased to start ID insertion later in the denoising process.

- end_at: The denoising step (as a percentage from 0 to 1) to stop applying the PuLID ID customization. Default is 1, applying ID insertion till the end of denoising. Can be reduced to stop ID insertion before the final denoising steps.

"Apply PuLID" Optional Inputs:

- attn_mask: An optional grayscale mask image to spatially control where the ID customization is applied. White areas of the mask receive the full ID insertion effect, black areas are unaffected, gray areas receive partial effect. Useful for localizing the ID to just the face region.

"Apply PuLID" Outputs:

- MODEL: The input model with the PuLID ID customization applied. This customized model can be used in other ComfyUI nodes for image generation. The generated images will depict the ID while still being controllable via prompt.

Adjusting these parameters allows fine-tuning the PuLID ID insertion to achieve the desired balance of ID fidelity and generation quality. Generally, a weight of 1 with method "neutral" provides a reliable starting point, which can then be adjusted based on the results. The start_at and end_at parameters provide further control over when the ID takes effect in the denoising, with the option to localize the effect via an attn_mask.

For more information, please visit