FLUX ControlNet Depth-V3 & Canny-V3

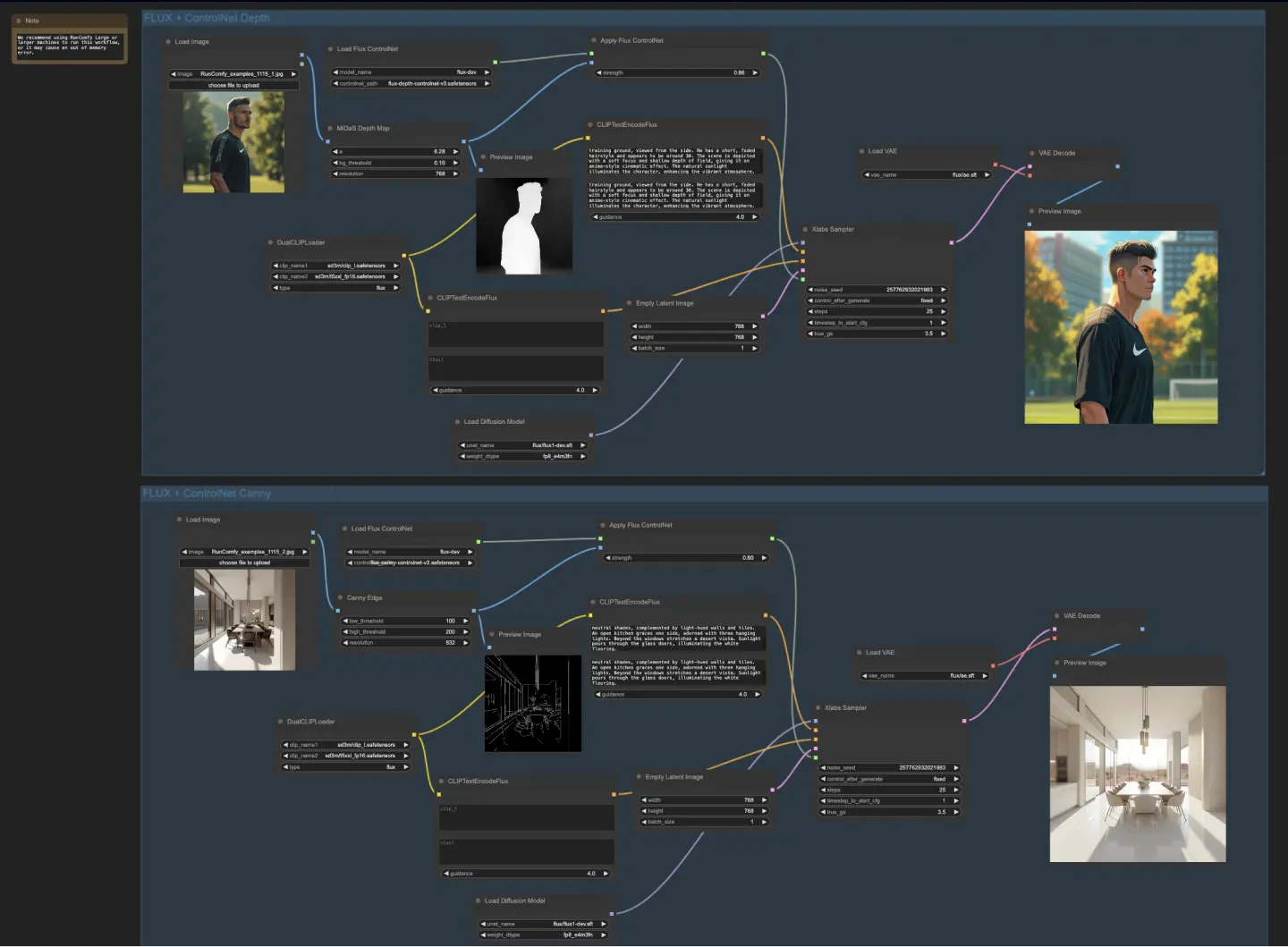

Transform your creative process with FLUX-ControlNet Depth and Canny models, designed for the FLUX.1 [dev] by XLabs AI. This ComfyUI workflow guides you through loading models, setting parameters, and combining FLUX-ControlNets for unprecedented control over image content and structure. Whether you're using depth maps or edge detection, FLUX-ControlNet empowers you to create stunning AI art.ComfyUI FLUX-ControlNet Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

- Features stunning visuals

ComfyUI FLUX-ControlNet Examples

ComfyUI FLUX-ControlNet Description

FLUX is a new image generation model developed by , The FLUX-ControlNet-Depth and FLUX-ControlNet-Canny models were created by the XLabs AI team. This ComfyUI FLUX ControlNet workflow was also created by the XLabs AI team. For more details, please visit . All credit goes to their contribution.

About FLUX

The FLUX models are preloaded on RunComfy, named flux/flux-schnell and flux/flux-dev.

- When launch a RunComfy Medium-Sized Machine: Select the checkpoint

flux-schnell, fp8and clipt5_xxl_fp8to avoid out-of-memory issues. - When launch a RunComfy Large-Sized or Above Machine: Opt for a large checkpoint

flux-dev, defaultand a high clipt5_xxl_fp16.

For more details, visit:

🌟The following FLUX-ControlNet Workflow is specifically designed for the model.🌟

About FLUX-ControlNet Workflow (FLUX-ControlNet-Depth-V3 and FLUX-ControlNet-Canny-V3)

We present two exceptional FLUX-ControlNet Workflows: FLUX-ControlNet-Depth and FLUX-ControlNet-Canny, each offering unique capabilities to enhance your creative process.

1. How to Use ComfyUI FLUX-ControlNet-Depth-V3 Workflow

The FLUX-ControlNet Depth model is first loaded using the "LoadFluxControlNet" node. Select the "flux-depth-controlnet.safetensors" model for optimal depth control.

- flux-depth-controlnet

- flux-depth-controlnet-v2

- flux-depth-controlnet-v3: ControlNet is trained on 1024x1024 resolution and works for 1024x1024 resolution, with better and realistic version

Connect the output of this node to the "ApplyFluxControlNet" node. Also, connect your depth map image to the image input of this node. The depth map should be a grayscale image where closer objects are brighter and distant objects are darker, allowing FLUX-ControlNet to interpret depth information accurately.

You can generate the depth map from an input image using a depth estimation model. Here, the "MiDaS-DepthMapPreprocessor" node is used to convert the loaded image into a depth map suitable for FLUX-ControlNet. Key params:

- Threshold = 6.28 (affects sensitivity to edges)

- Depth scale = 0.1 (amount depth map values are scaled by)

- Output Size = 768 (resolution of depth map)

In the "ApplyFluxControlNet" node, the Strength parameter determines how much the generated image is influenced by the FLUX-ControlNet depth conditioning. Higher strength will make the output adhere more closely to the depth structure.

2. How to Use ComfyUI FLUX-ControlNet-Canny-V3 Workflow

The process is very similar to the FLUX-ControlNet-Depth workflow. First, the FLUX-ControlNet Canny model is loaded using "LoadFluxControlNet". Then, it is connected to the "ApplyFluxControlNet" node.

- flux-canny-controlnet

- flux-canny-controlnet-v2

- flux-canny-controlnet-v3: ControlNet is trained on 1024x1024 resolution and works for 1024x1024 resolution, with better and realistic version

The input image is converted to a Canny edge map using the "CannyEdgePreprocessor" node, optimizing it for FLUX-ControlNet. Key params:

- Low Threshold = 100 (edge intensity threshold)

- High Threshold = 200 (hysteresis threshold for edges)

- Size = 832 (edge map resolution)

The resulting Canny edge map is connected to the "ApplyFluxControlNet" node. Again, use the Strength parameter to control how much the edge map influences the FLUX-ControlNet generation.

3. Both for ComfyUI FLUX-ControlNet-Depth-V3 and ComfyUI FLUX-ControlNet-Canny-V3

In both FLUX-ControlNet workflows, the CLIP encoded text prompt is connected to drive the image contents, while the FLUX-ControlNet conditioning controls the structure and geometry based on the depth or edge map.

By combining different FLUX-ControlNets, input modalities like depth and edges, and tuning their strength, you can achieve fine-grained control over both the semantic content and structure of the images generated by FLUX-ControlNet.

License: controlnet.safetensors falls under the Non-Commercial License

License

View license files:

The FLUX.1 [dev] Model is licensed by Black Forest Labs. Inc. under the FLUX.1 [dev] Non-Commercial License. Copyright Black Forest Labs. Inc.

IN NO EVENT SHALL BLACK FOREST LABS, INC. BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH USE OF THIS MODEL.