Hunyuan LoRA

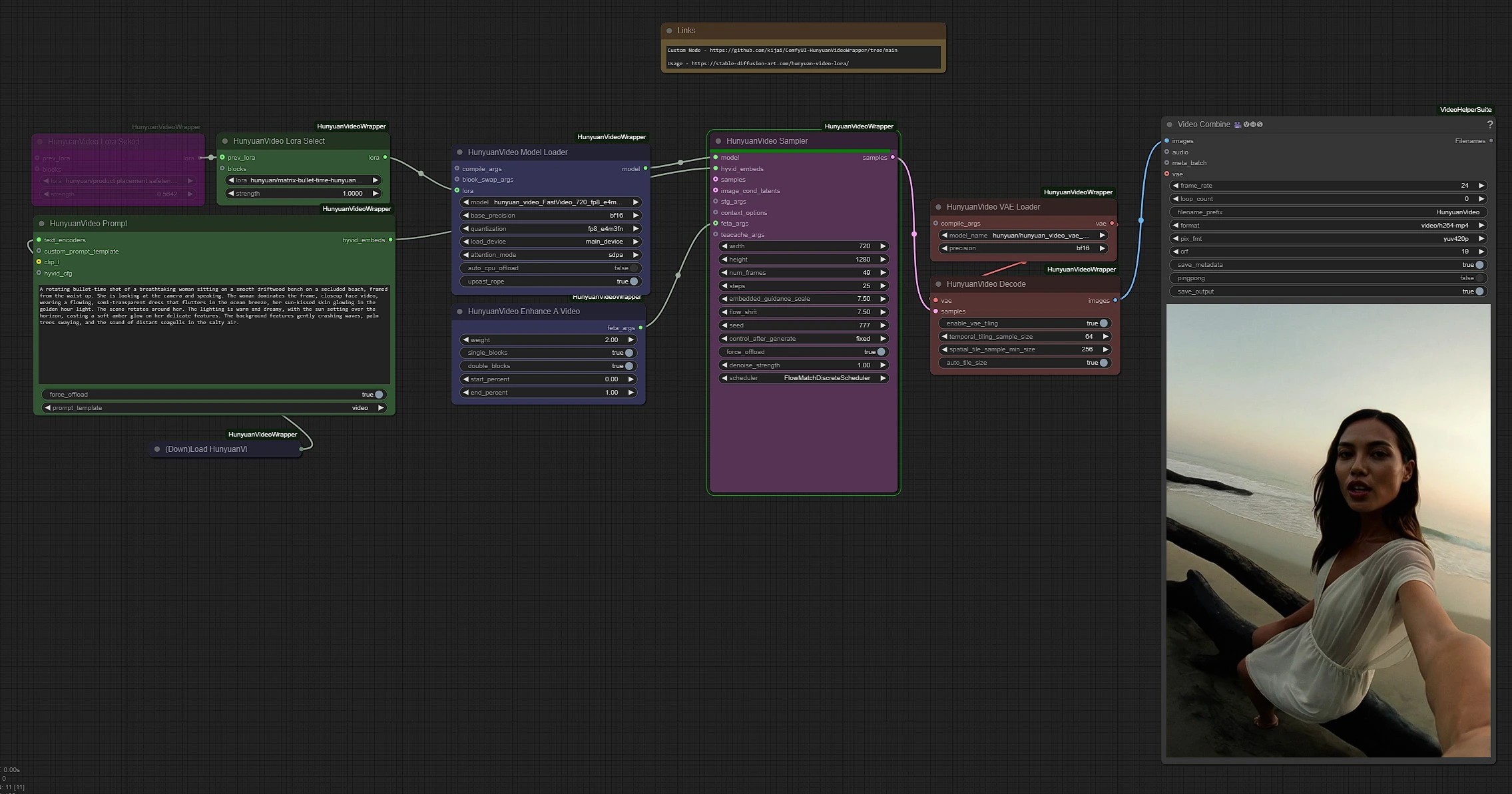

Hunyuan LoRA Workflow lets you download and apply pre-trained LoRAs to Hunyuan Video for better style control and character consistency. Instead of training a new LoRA, you can use existing ones to fine-tune specific looks, facial identities, or artistic styles in video generation. This workflow makes it easy to experiment with different LoRAs and apply them to create cohesive and visually refined results. Whether you're working on AI animations, cinematic sequences, or stylized video effects, using Hunyuan LoRAs can significantly improve the control and coherence of your generated content.ComfyUI Hunyuan LoRA Workflow

- Fully operational workflows

- No missing nodes or models

- No manual setups required

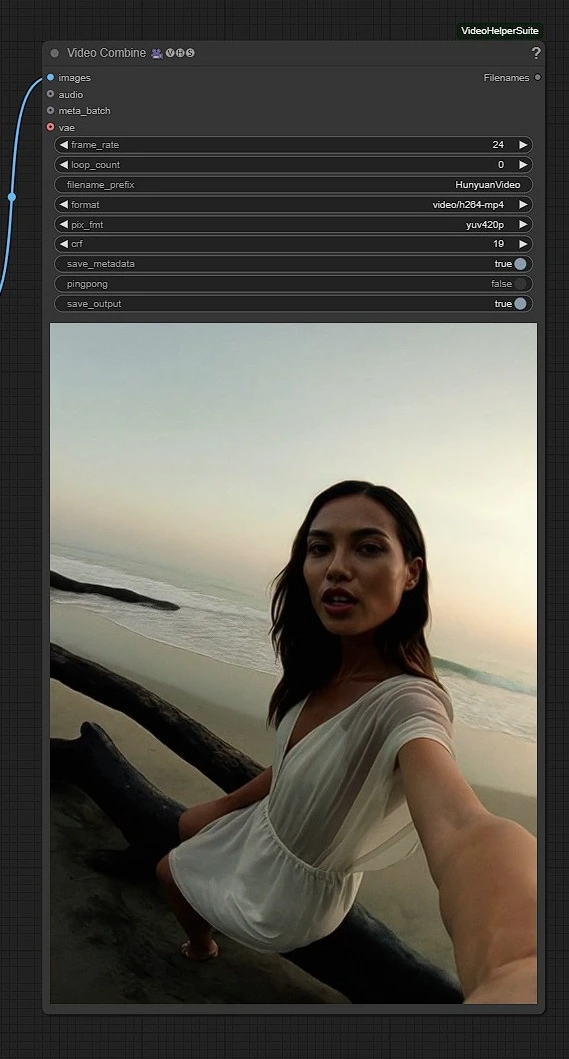

- Features stunning visuals

ComfyUI Hunyuan LoRA Examples

ComfyUI Hunyuan LoRA Description

Hunyuan Lora: Advanced Video Generation with Custom Styles

Hunyuan Video is an open-source video foundation model that pushes the boundaries of AI-driven video generation, offering state-of-the-art text-to-video capabilities. With advancements in large-scale data processing, adaptive model architecture, and high-efficiency rendering, Hunyuan Video delivers unmatched visual quality and fluid motion generation.

The Hunyuan Lora workflow enhances this capability by allowing users to apply pre-trained Loras to their video generations. Instead of relying solely on text prompts, users can load existing Hunyuan Lora models to refine style, maintain character consistency, or introduce specific artistic effects. This workflow simplifies the process of customizing video outputs, making it easier to achieve a cohesive and controlled look.

By leveraging Hunyuan Lora, creators can apply unique aesthetics, preserve facial identities, or replicate artistic styles across multiple videos, providing a powerful tool for AI-powered animation, cinematic sequences, and personalized video content creation. Whether you're working on character consistency, scene stylization, or detailed video refinement, the Hunyuan Lora workflow offers a seamless way to elevate your AI-generated videos. 🚀

How to Use Hunyuan Lora Workflow?

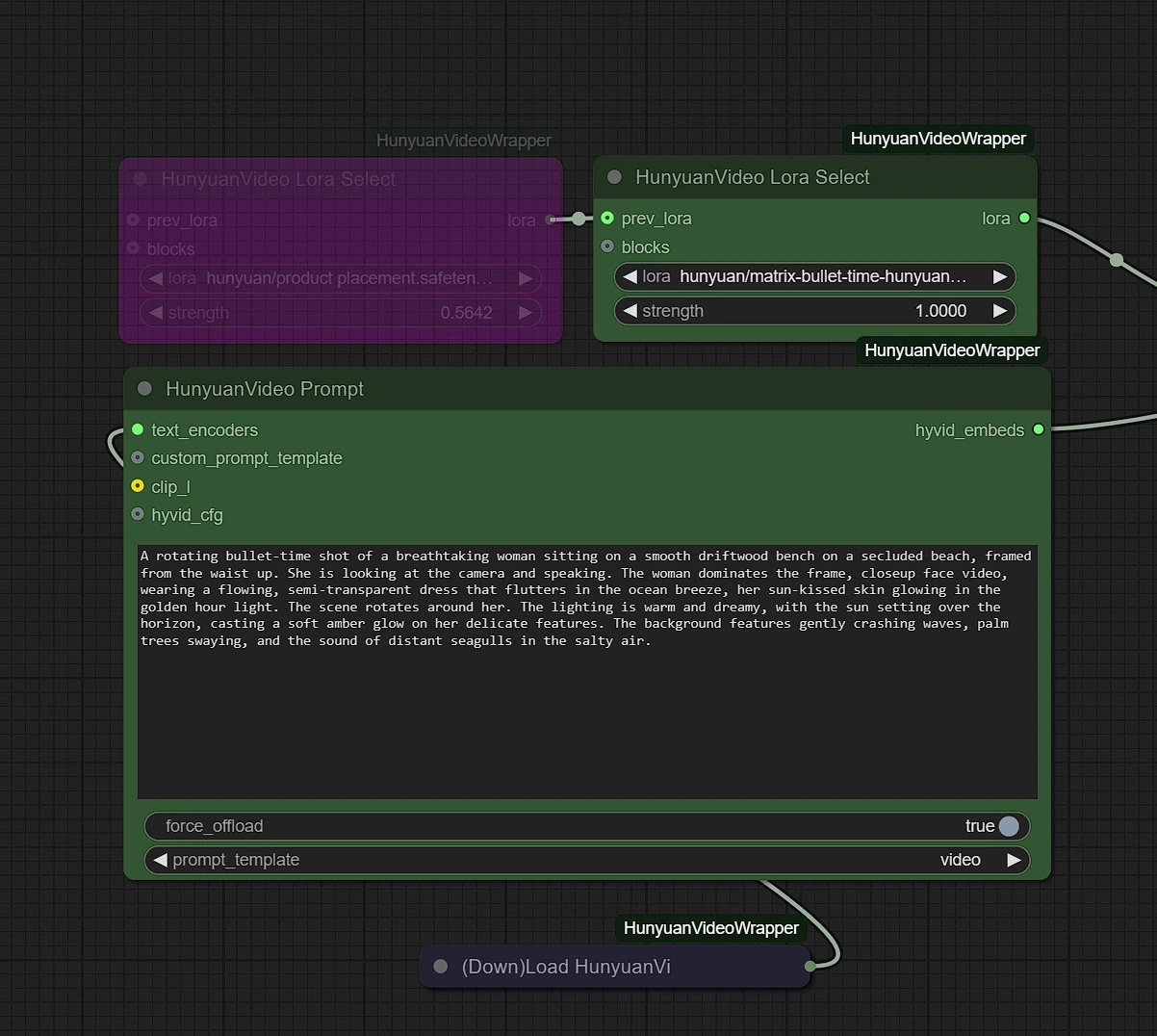

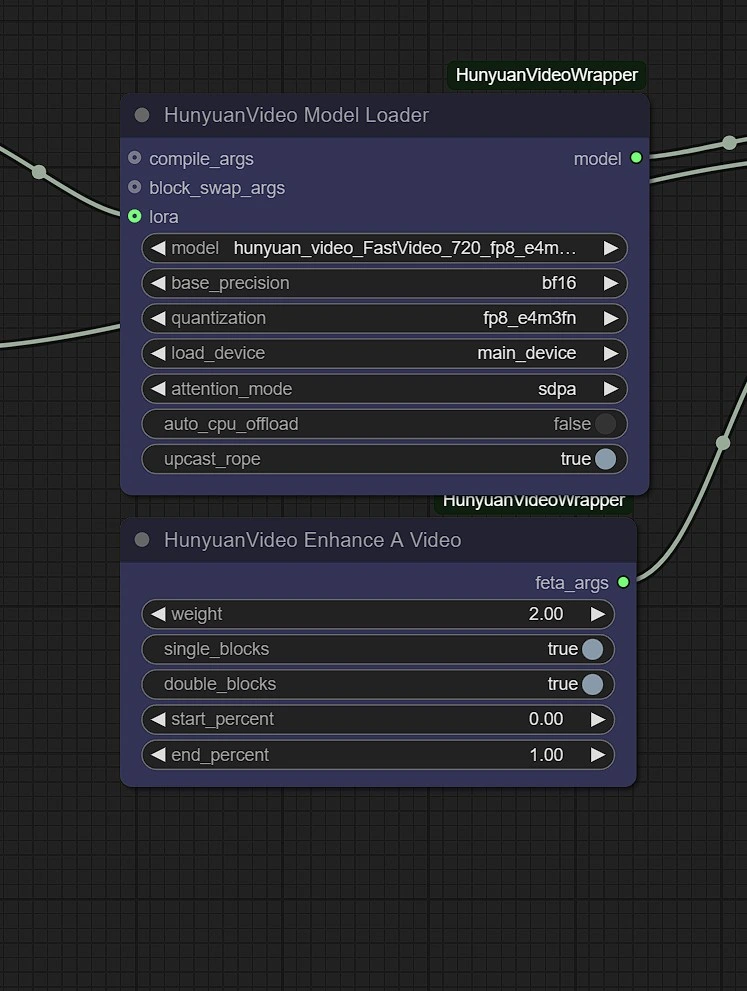

Groups are color-coded for clarity:

- Green - Inputs for Hunyuan Lora implementation

- Purple - Models

- Pink - Hunyuan Sampler

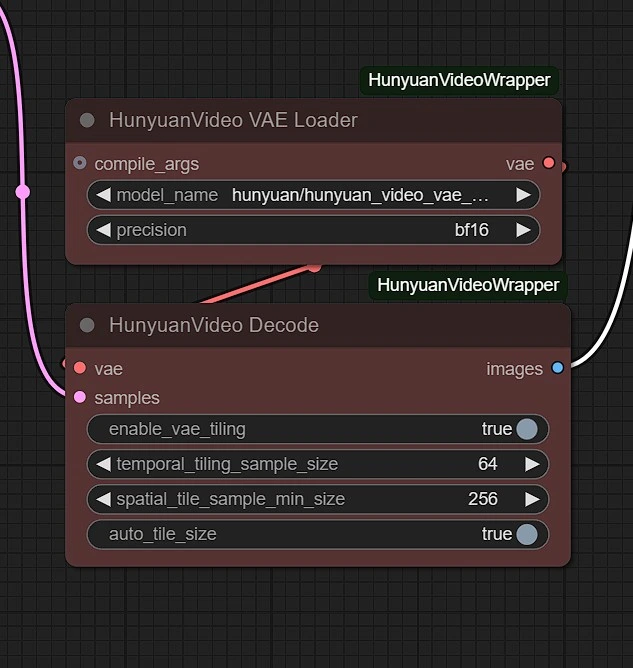

- Red - VAE + Decoding

- Grey - Output Video

Enter your Positive text and Load any Hunyuan Lora you Desire in the green nodes and adjust video settings, such as duration and resolution, in the pink sampler node.

Input (Hunyuan Lora and Text)

- Download your Hunyuan Lora models from the Civit.ai website and place the models in Comfyui > models > lora > Hunyuan for organization. Refresh the browswer to see them in the HunyuanVideo Lora Select list.

- Enter your desired prompts and keywords in the text box to trigger the Hunyuan Lora.

You can chain multiple video LORAs by duplicating the HunyuanVideo Lora node and connecting them as you do with standard SD models.

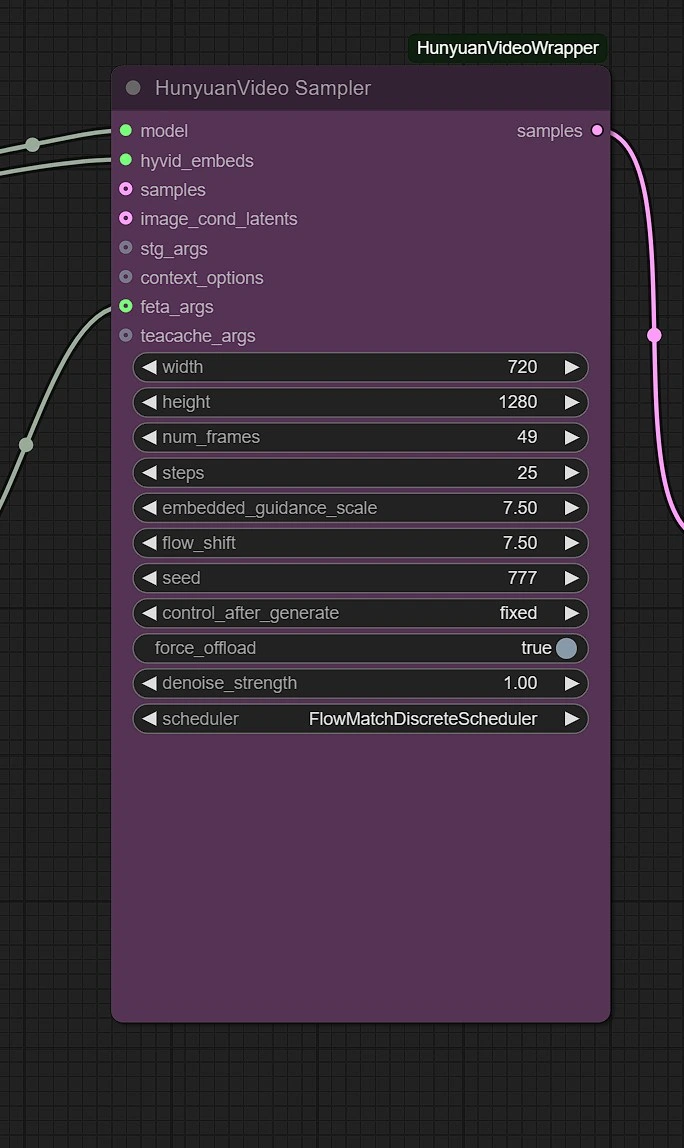

Sampler

You can adjust the following parameters for your Hunyuan Lora generation:

- Image Resolution - Maximum is 1280px 720px, requiring more VRAM.

- Frames - This sets the number of frames (24 frames = 1 second, Max 125 frames).

Models

In this Group, the models will auto-download on first run. Please allow 3-5 minutes for the download to complete in your temporary storage.

Links for Hunyuan Lora implementation:

- - ComfyUI > models > diffusion_models

- - ComfyUI > models > vae

Outputs

The rendered Hunyuan Lora video will be saved in the Outputs folder in Comfyui.

With the Hunyuan Lora, you can go beyond basic text-to-video generation and enhance your videos with custom styles and character consistency. Whether you're creating AI-driven animations, cinematic sequences, or artistic projects, applying pre-trained Hunyuan Lora models gives you greater control over your visual output. Try Hunyuan Lora today and take your Hunyuan Video creations to the next level! 🚀