This "Flux Consistent Characters Workflow Series 1 (Input Test)" was created by Mickmumpitz on his YouTube channel. We highly recommend checking out his detailed tutorial to learn how to use this powerful Consistent Characters workflow effectively. While we've reproduced the Consistent Characters workflow and set up the environment for your convenience, all credit goes to Mickmumpitz for his excellent work on developing this Flux-based Consistent Characters solution.

If you want to create consistent characters using an existing image, please use the "Flux Consistent Characters Workflow Series 2 (Input Image)" by Mickmumpitz.

The Flux Consistent Characters (Input Test)

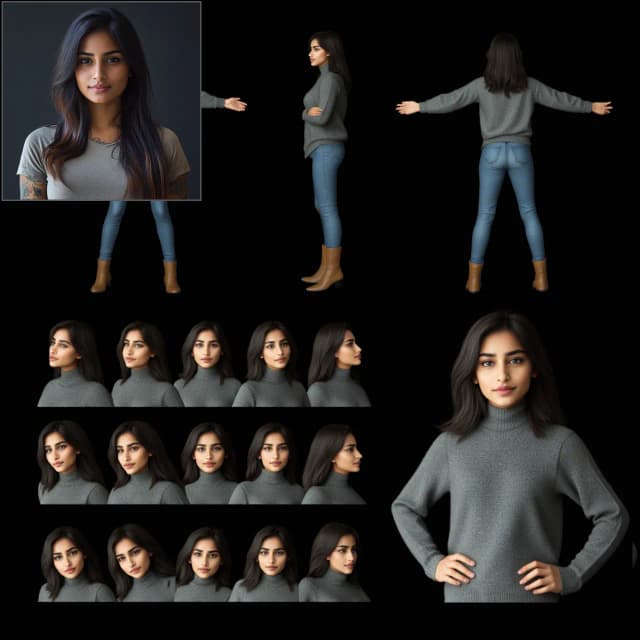

The Flux Consistent Characters (Text Input) Workflow is a tool that helps you create AI characters that look the same based on your text descriptions. This workflow makes it easy to get characters that maintain their appearance from different viewpoints. By using the Flux.1 dev model, the generated characters are more reliable. This workflow is perfect for creating AI movies, children's books, or any other project where you want the characters to have a consistent look and feel based on your text input.

How to Use the Flux Consistent Characters (Input Test) Workflow?

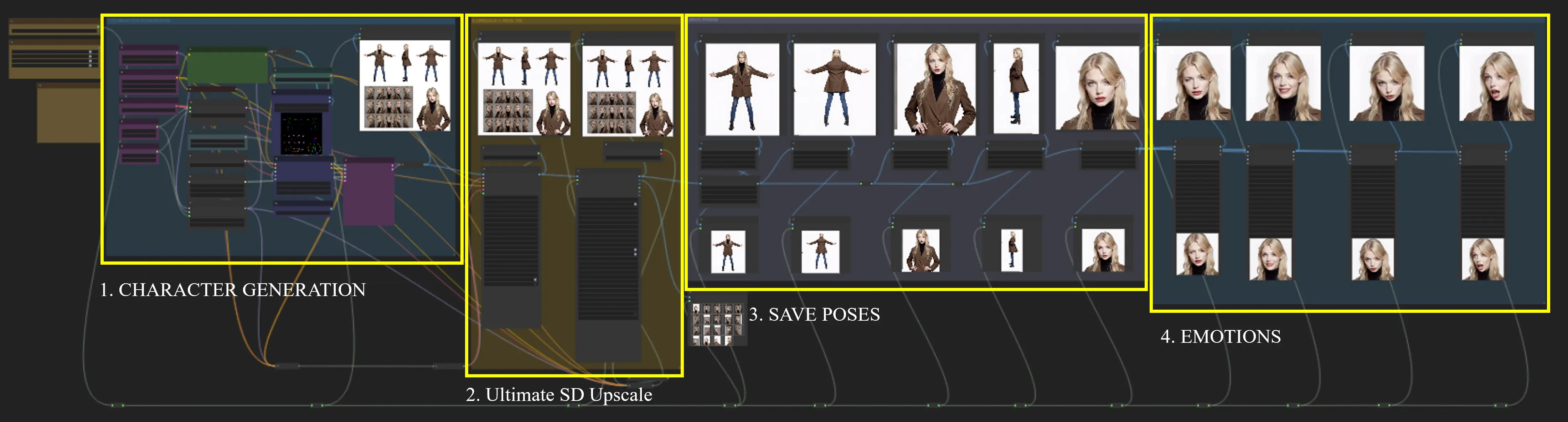

This Flux Consistent Characters Workflow is divided into four modules (Characters Generation, Upsacle + Face Fix, Poses, Emotions), each designed to streamline the process of generating Consistent Characters with a uniform appearance across multiple outputs.

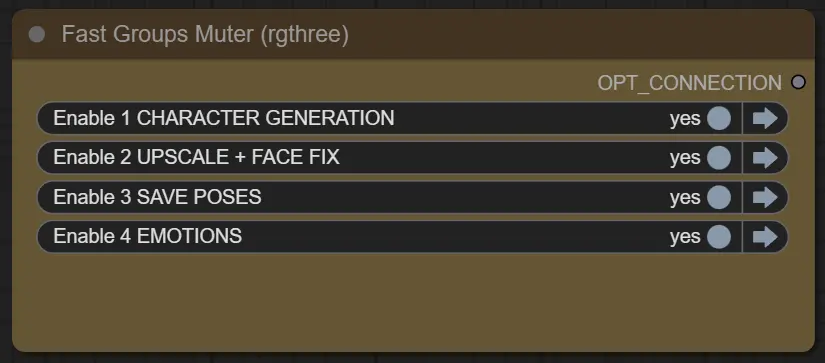

Fast Groups Muter (rgthree) Node

After loading the Fast Groups Muter (rgthree) node, modules 2, 3, and 4 are executed automatically, and no additional setup is required. This node controls the switches for all four modules, making the Consistent Characters process smoother and more efficient.

Fast Groups Muter (rgthree) Node Control module switch (yes/no).

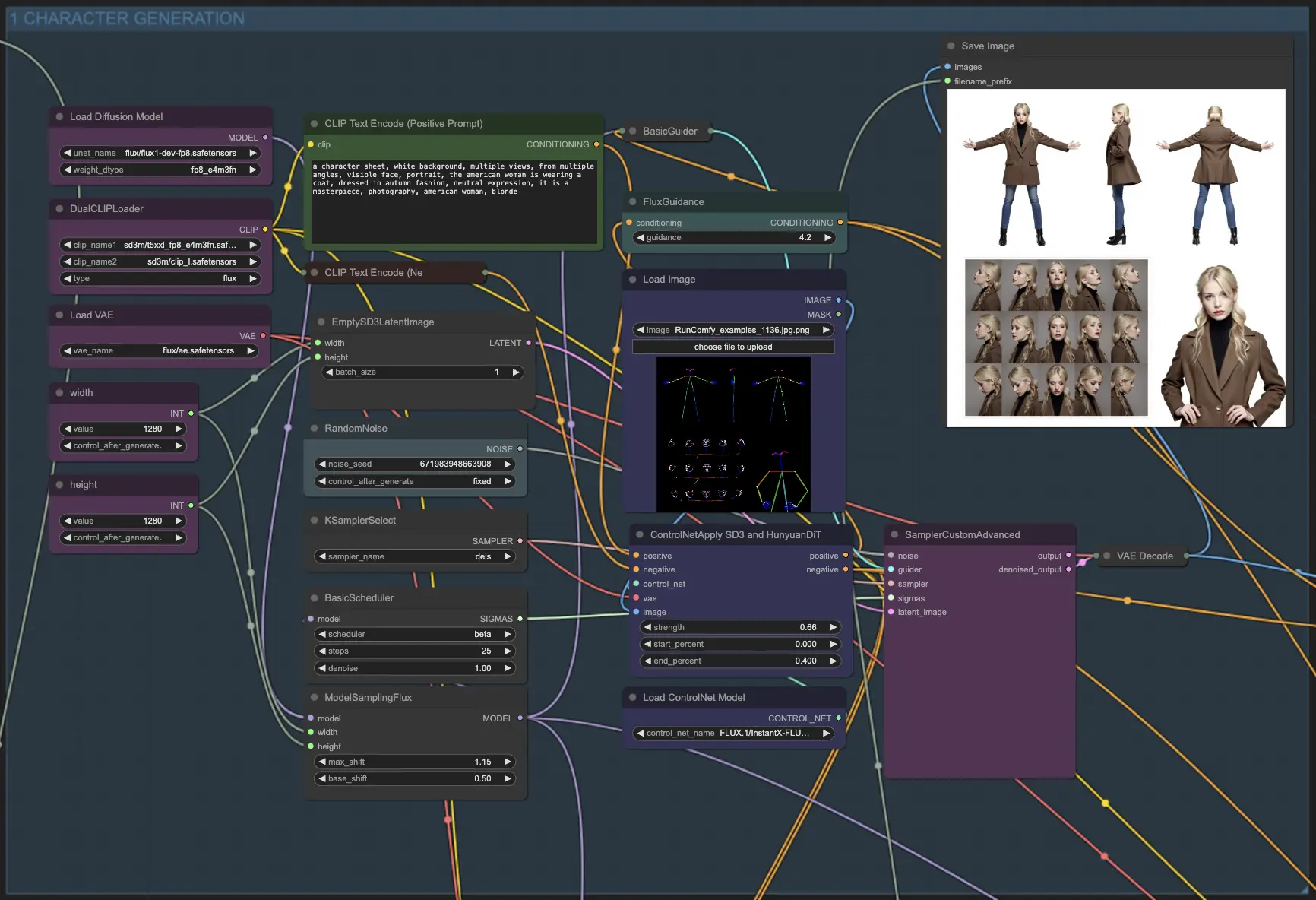

1. Characters Generation

This module utilizes the Flux models and Flux ControlNet model to generate a Consistent Characters table by guiding the generation process with prompts. The input, which is a pose sheet, serves as a reference to direct the Consistent Characters generation. By crafting appropriate prompts, you can steer the model to create desired Consistent Characters sheets.

Here are some prompt examples:

A character sheet featuring an American woman wearing a coat, dressed in autumn fashion, with a neutral expression. The sheet should have a white background, multiple views from various angles, and a visible face portrait. The overall style should resemble a masterpiece photography.

A character sheet depicting an elven ranger wearing a cloak made of autumn leaves, dressed in forest colors, with a determined expression. The sheet should have a parchment background, multiple views from different angles, and a visible face portrait. The ranger should be accompanied by a majestic stag, carrying a longbow and quiver on her back. The overall style should resemble a masterpiece digital painting of a female elf with long golden hair.

Tip: If the generated Consistent Characters sheet does not meet your expectations, try adjusting the seed value to regenerate the output with variations.

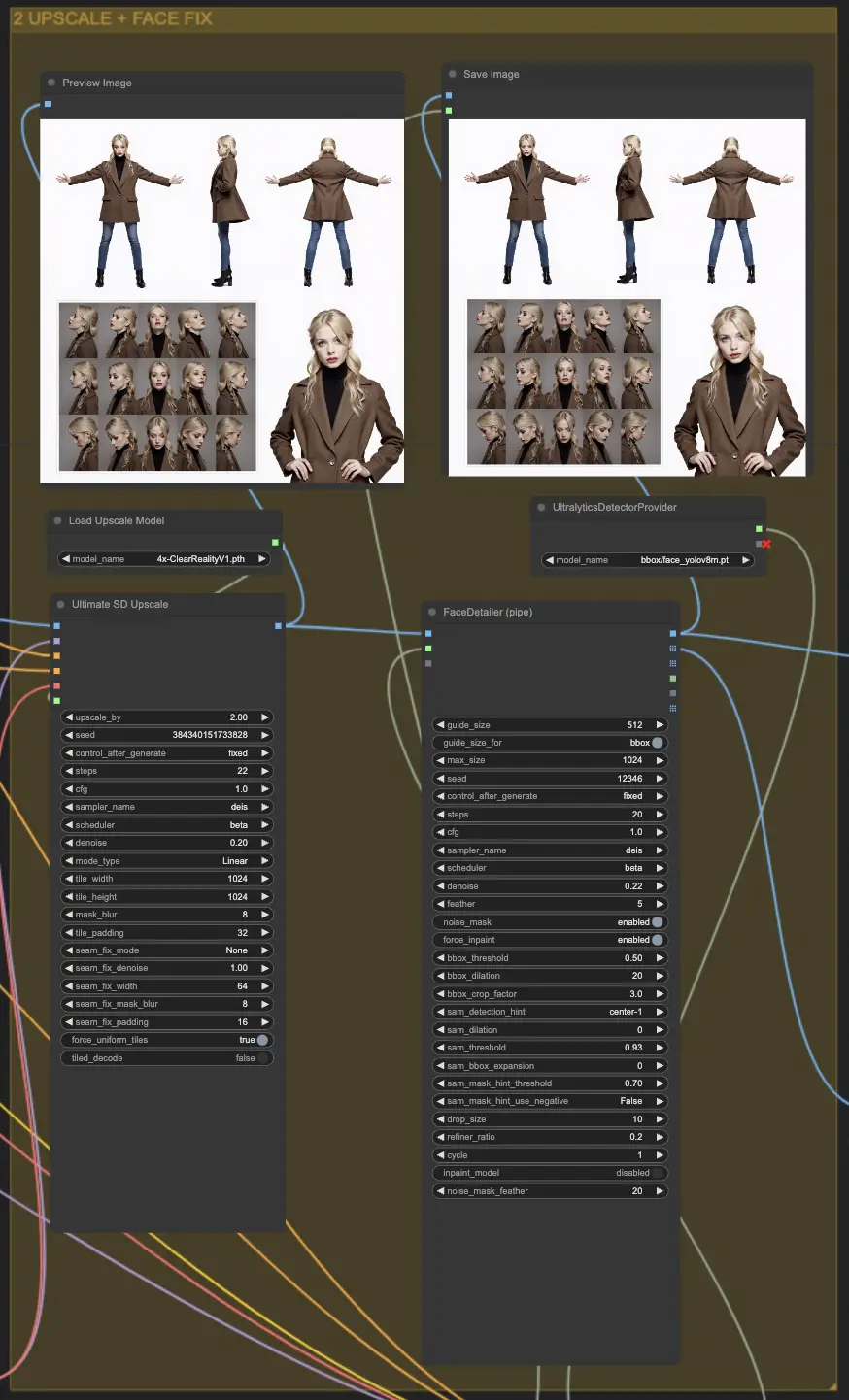

2. Upsacle + Face Fix

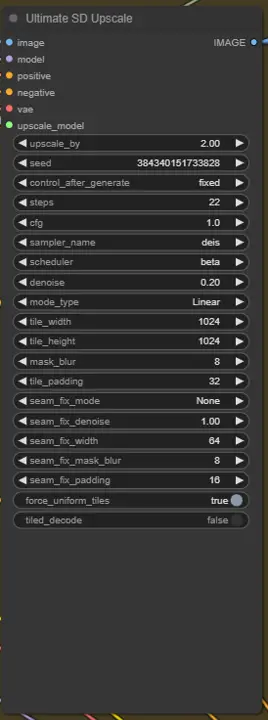

2.1 Ultimate SD Upscale

Ultimate SD Upscale is a node used in image generation pipelines to enhance image resolution by dividing the image into smaller tiles, processing each tile individually, and then stitching them back together. This process allows for generating high-resolution images while managing memory usage and reducing artifacts that can occur when upscaling.

Parameters:

upscale_by: The factor by which the image's width and height are multiplied. For precise dimensions, use the "No Upscale" version.seed: Controls randomness in the generation process. Using the same seed yields the same results.control_after_generate: Adjusts the image details post-generation.steps: The number of iterations during image generation. More steps result in finer details but longer processing time.cfg: Classifier-Free Guidance scale that adjusts how strictly the model follows the input prompts.sampler_name: Specifies the sampling method used for image generation.scheduler: Defines how computation resources are allocated throughout the generation.denoise: Controls the level of noise removal, influencing the detail retention from the original image. Recommended: 0.35 for enhancement, 0.15-0.20 for minimal changes.mode_type: Determines the mode of processing, such as how tiles are processed.tile_widthandtile_height: Dimensions of the tiles used in processing. Larger sizes reduce seams but require more memory.mask_blur: Blurs the edges of the masks used for tile blending, smoothing transitions between tiles.tile_padding: Number of pixels from neighboring tiles considered during processing to reduce seams.seam_fix_mode: Method for correcting visible seams between tiles:- Bands pass: Fixes seams along rows and columns.

- Half tile offset pass: Applies an offset to better blend seams.

- Half tile offset + intersections pass: Includes additional passes at intersections.

seam_fix_denoise: Strength of noise reduction during seam fixing.seam_fix_width: Width of the areas processed during seam fixing.seam_fix_mask_blur: Blurs the mask for smoother seam corrections.seam_fix_padding: Padding around seams during correction to ensure smoother results.force_uniform_tiles: Ensures tiles maintain a uniform size by extending edge tiles when needed, minimizing artifacts.tiled_decode: Processes image tiles individually to reduce memory usage during high-resolution generation.- Target size type: Determines how the final image size is set:

- From img2img settings: Uses default width and height.

- Custom size: Allows manual width and height setting (max 8192px).

- Scale from image size: Scales based on the initial image size.

- Upscaler: The method for upscaling images before further processing (e.g., ESRGAN).

- Redraw: Controls how the image is redrawn:

- Linear: Processes tiles sequentially.

- Chess: Uses a checkerboard pattern for processing to reduce artifacts.

- None: Disables redraw, focusing only on seam fixing.

2.2. FaceDetailer (pipe)

FaceDetailerPipe is a node designed to enhance facial details in images, using advanced image processing techniques to improve the sharpness and clarity of facial features. It is part of the ComfyUI Impact Pack, aiming to provide high-quality facial detail enhancements for various applications.

Parameters:

image: The input image to be enhanced, serving as the main subject for facial detailing.guide_size: A parameter that controls the size of the guidance area used for enhancing facial features, influencing how much context is considered.guide_size_for: Determines whether the guide size should be applied for specific regions.max_size: Sets the maximum size limit for processed images, ensuring memory management.seed: Controls randomness in the image enhancement process, allowing reproducible results when using the same seed.steps: The number of iterations for enhancing details. More steps result in finer details but require more processing time.cfg: The Classifier-Free Guidance scale, which adjusts how closely the model follows the input guidance.sampler_name: Defines the sampling method used for detail refinement.scheduler: Determines the computational scheduling strategy during processing.denoise: Controls the strength of noise reduction applied during the enhancement process. Lower values retain more original details, while higher values produce smoother results.feather: Controls the smoothness of the transition between enhanced and original areas, helping to blend the changes seamlessly.noise_mask: Enables or disables the use of a noise mask to target specific areas for noise reduction.force_inpaint: Forces inpainting in regions that need additional enhancement or corrections.bbox_threshold: Sets the threshold for detecting bounding boxes around facial features, influencing sensitivity.bbox_dilation: Expands the detected bounding box areas to ensure that all relevant features are included during enhancement.bbox_crop_factor: Adjusts the cropping factor for detected bounding boxes, controlling the area of focus for enhancement.sam_detection_hint: Specifies additional hints or guides for the detection process.sam_dilation: Adjusts the dilation applied to the detected regions, allowing for broader coverage.sam_threshold: Defines the threshold for detection sensitivity within the SAM (Segment Anything Model) process.sam_bbox_expansion: Expands the bounding boxes detected by the SAM, helping to include more surrounding context.sam_mask_hint_threshold: Adjusts the threshold for the mask hints provided by SAM, controlling how regions are defined for masking.sam_mask_hint_use_negative: Determines if negative hints should be used, influencing the masking of certain regions.drop_size: Sets the size of the drops applied during the enhancement process, which can influence the level of refinement.refiner_ratio: Controls the ratio for refining facial details, balancing between preserving original features and adding clarity.cycle: Specifies the number of refinement cycles to apply, affecting the depth of enhancement.- inpaint_model (Optional): Enables the use of an inpainting model for filling in missing or unclear areas during the detailing process.

noise_mask_feather: Adjusts the feathering of the noise mask, providing a smoother transition between noisy and denoised areas.

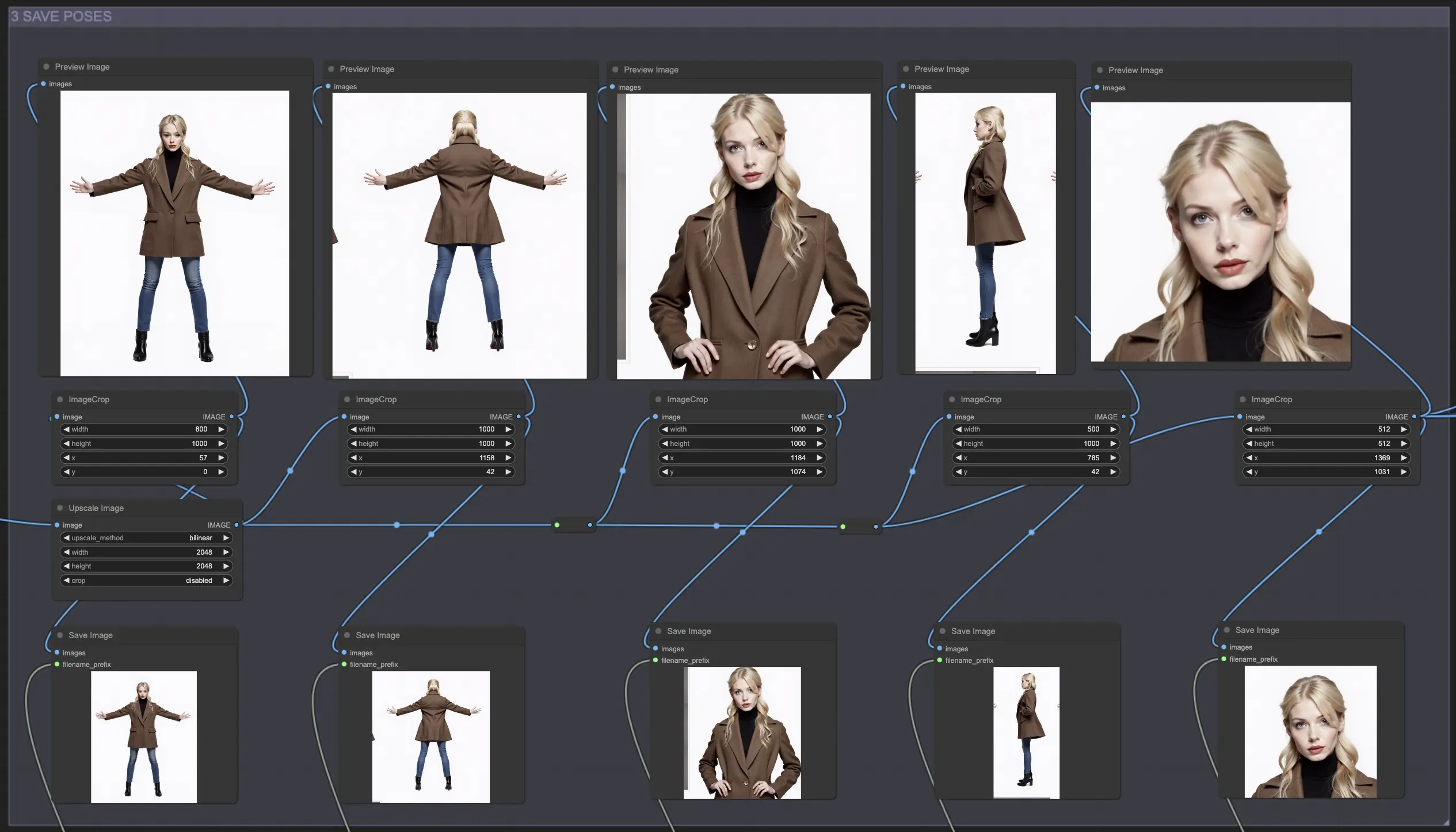

3. Poses

This module allows you to use the image crop node to separate each pose from the generated character sheet and save individual poses of the character for further use or adjustments.

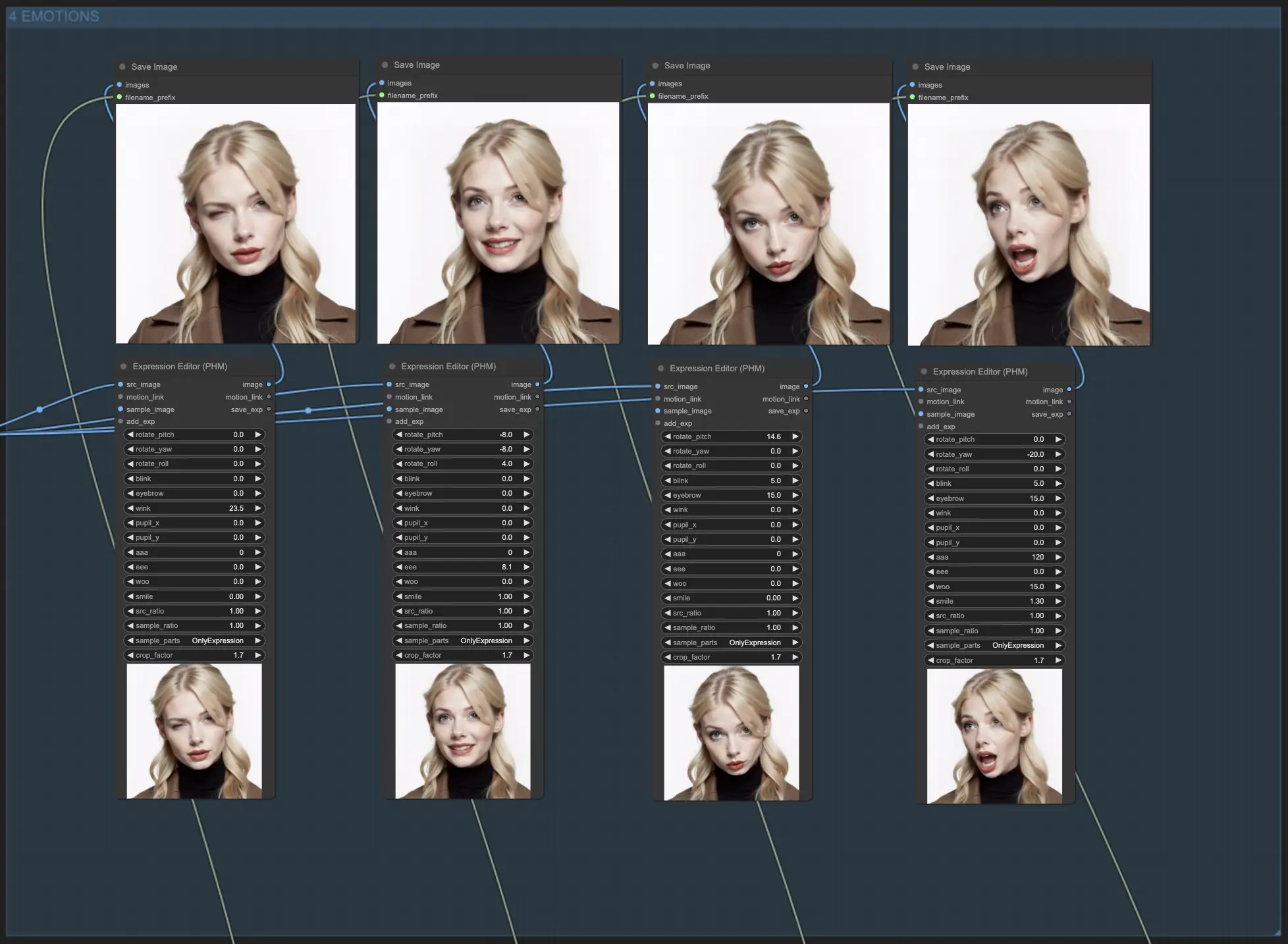

4. Emotions

This module utilizes the Photo Expression Editor (PHM) node to adjust facial expressions in photos. The parameters allow for fine-tuning various facial aspects, such as head movements, blinking, and smiling

Expression Editor Parameters:

rotate_pitch: Controls the up-and-down movement of the head.rotate_yaw: Adjusts the side-to-side movement of the head.rotate_roll: Determines the tilt angle of the head.blink: Controls the intensity of eye blinks.eyebrow: Adjusts eyebrow movements.wink: Controls winking.pupil_x: Moves the pupils horizontally.pupil_y: Moves the pupils vertically.aaa: Controls the mouth shape for the "aaa" vowel sound.eee: Controls the mouth shape for the "eee" vowel sound.woo: Controls the mouth shape for the "woo" vowel sound.smile: Adjusts the degree of a smile.src_ratio: Determines the ratio of the source expression to be applied.sample_ratio: Determines the ratio of the sample expression to be applied.sample_parts: Specifies which parts of the sample expression to apply ("OnlyExpression", "OnlyRotation", "OnlyMouth", "OnlyEyes", "All").crop_factor: Controls the cropping factor of the face region.

Flux models and the workflow's streamlined modules make it a breeze for you to maintain Consistent Characters appearances across various outputs. You'll be able to bring your characters to life like never before, creating a truly immersive experience for your audience!